Achieving 200 Million Concurrent Connections with HiveMQ

Executive Summary: Why Scale Matters

HiveMQ is an MQTT Platform that has established itself as a proven solution for reliable, scalable, mission-critical IoT, with the capability to handle tens of millions of connected devices and concurrent connections. HiveMQ powers large-scale IoT projects like Audi’s vehicle connectivity across Europe, user experience data analysis for one of the world’s largest telecom operators, and connected products like CPAP machines and warehouse robots.

We see more customers coming to us with this need for scale, while simultaneously demanding high reliability and performance. To support this need, our team benchmarked the HiveMQ platform at 200 million connections to demonstrate our ability to handle extreme scale.

Whether your current IoT deployment is already large or will grow rapidly over the next few years, you need to think about scale, and the learnings from our benchmark can help.

The 200 Million Benchmark

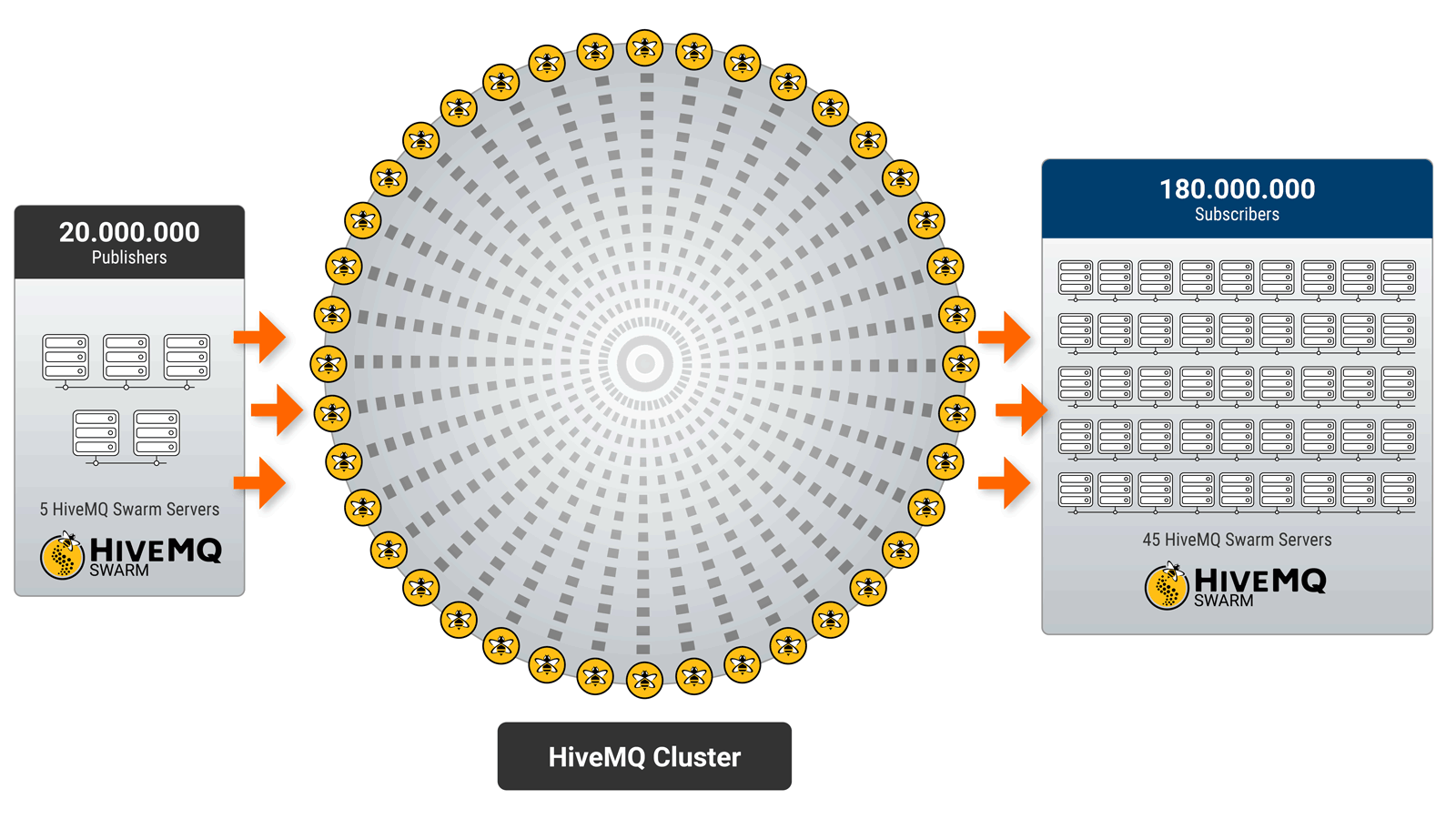

Our team created an installation based on projected real-world requirements like computing infrastructure and storage on a hyperscale cloud (AWS), using a pub/sub architecture with an asymmetrical ratio and a clustered system for resilience.

This benchmark test is helping us observe, document and share with the community how the broker can meet scaling expectations with the MQTT protocol and the HiveMQ broker.

Highlights:

Gradual and even ramp up to 200 million connections in 37 minutes

20 million publishers connecting to 180 million subscribers over 200 million unique MQTT topics

Peak message throughput of 1 million PUBLISH messages per second

Real-world scenario using commercially available public infrastructure with publicly available products from HiveMQ (Broker and Swarm)

Workload was contained to a single installation to test the true performance of the broker cluster

The results of our benchmark test on the HiveMQ broker demonstrates its ability to meet the demands of the growing IoT ecosystem. Read on for the technical details of how the benchmark was achieved.

The Groundwork

We deployed a 40-node HiveMQ cluster in the AWS Public cloud to run this benchmark. We built the cluster with the commercially available HiveMQ release: 4.11.

To establish the 200 million connections, we used our load generator HiveMQ Swarm.

HiveMQ Swarm is an IoT testing and simulation tool that generates load via MQTT clients. We simulated 20 Mil publishers pushing data into HiveMQ brokers and out to 180 Mil subscribers.

HiveMQ Swarm is an IoT testing and simulation tool that generates load via MQTT clients. We simulated 20 Mil publishers pushing data into HiveMQ brokers and out to 180 Mil subscribers.

Performance Results

Let’s start by showing you statistics on what the test accomplished, and we will follow it with the various stages of setup and infrastructure.

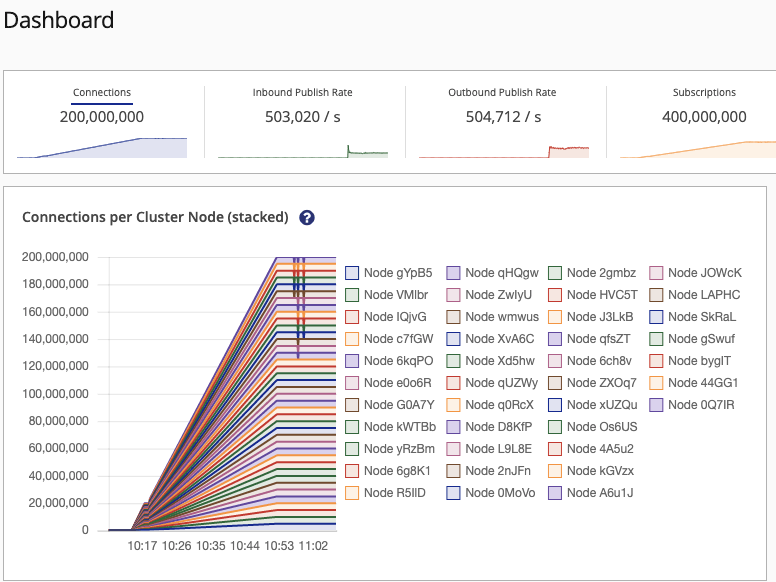

The installation (details in a later section) performed as expected. It took about 37 minutes for all 200 million clients to connect to the HiveMQ cluster. The clients subscribed to 200 million unique topics, and handled 1 million plus messages per second at the cluster.

Test Phases

Test Phases

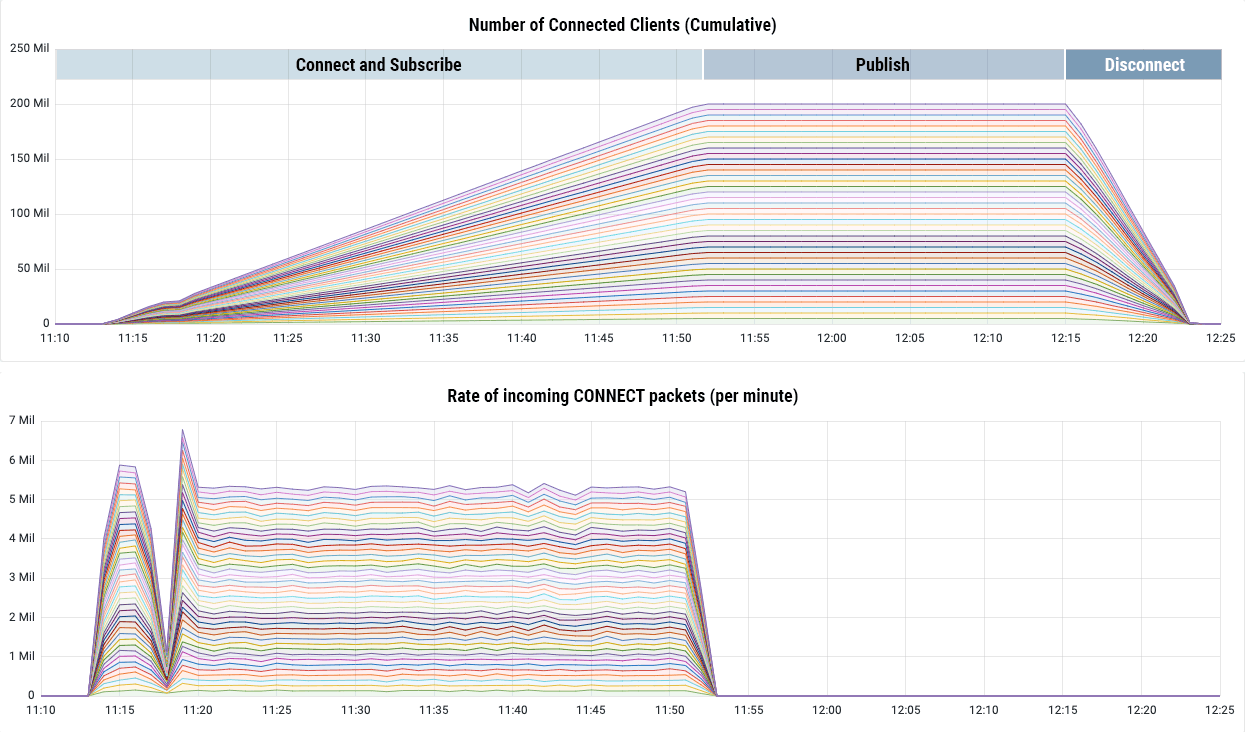

The visualization (directly exported from Grafana) shows the test phases mapped over the timeline. Client connections were closed at the end of the test.

Phases:

Connect and Subscribe

Publish

Disconnect

Grafana, an open source visualization tool, shows the cumulative number of connected clients.

Grafana, an open source visualization tool, shows the cumulative number of connected clients.

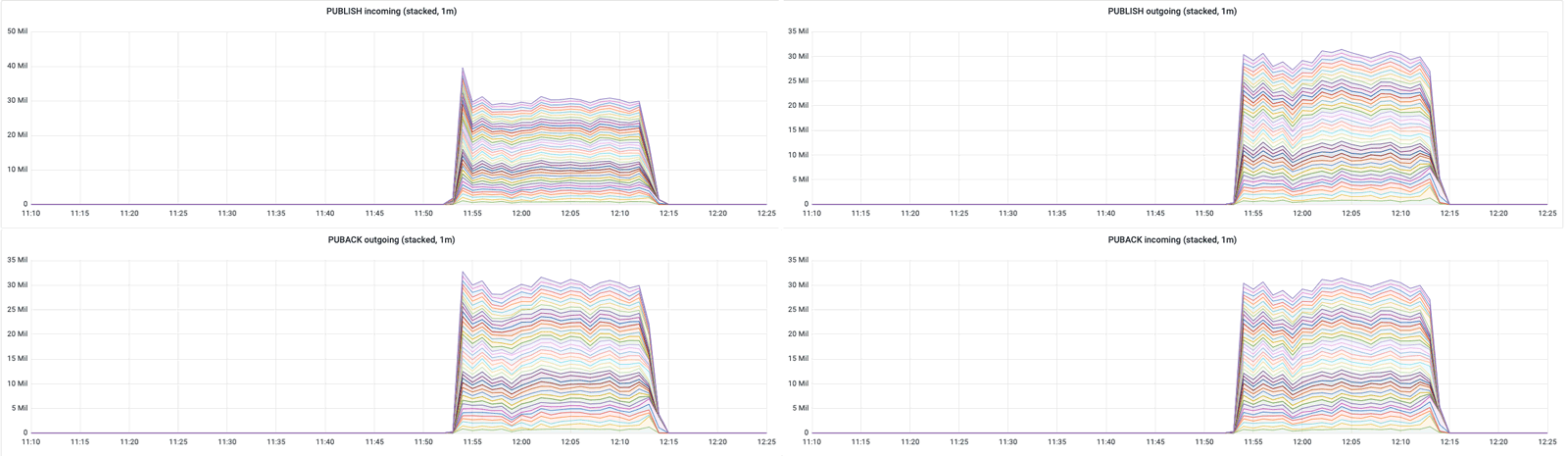

Grafana captures the message rate for PUBLISH and PUBACK (incoming and outgoing).

Grafana captures the message rate for PUBLISH and PUBACK (incoming and outgoing).

CPU and Memory Load

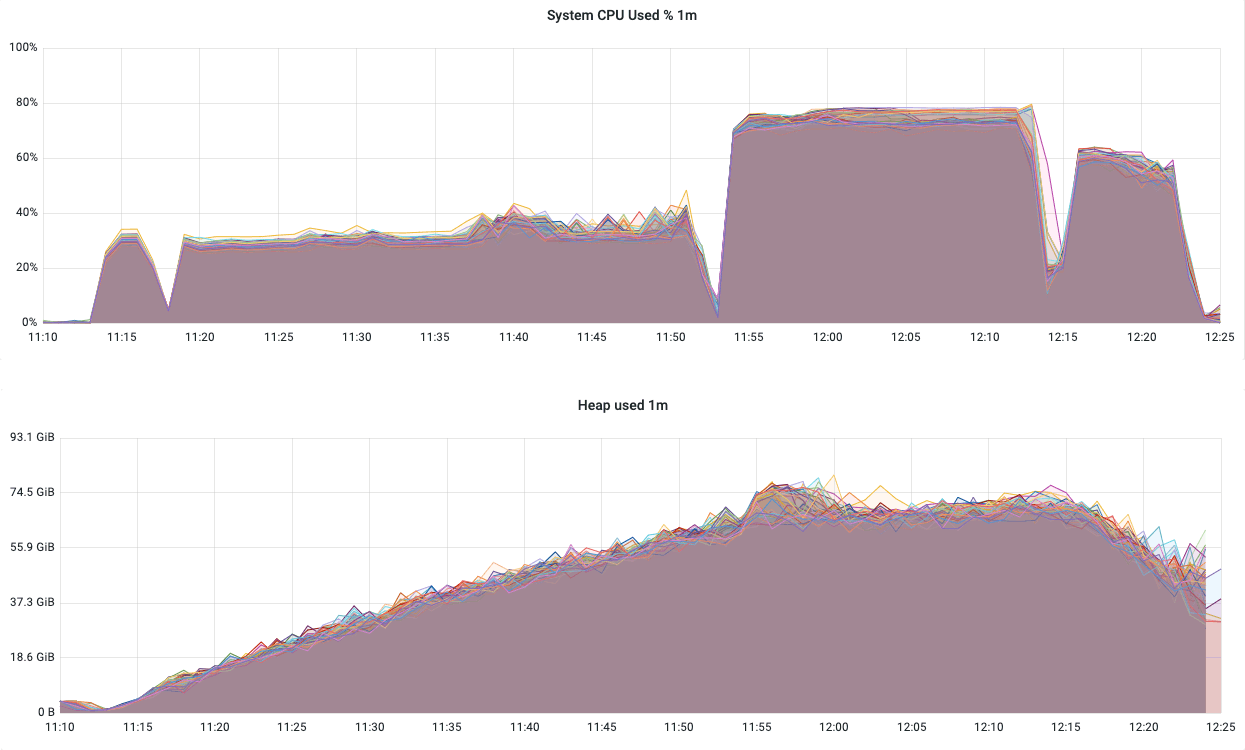

During the connection phase, the CPU usage on all broker instances stayed consistently between 30 and 40%. The JVM Heap usage steadily increased to about 65GB on average per node (or 13KB per connection). During the publish phase, the CPU usage hovered at 75 to 80% and the heap usage fluctuated between 65 to 75 GB. During the disconnect phase, the CPU usage was about 55%.

CPU usage was consistent with one gap early in the test when the publisher group finished connecting.

CPU usage was consistent with one gap early in the test when the publisher group finished connecting.

Building the Test Scenario

In this section, we walk through the methodology, preparation, and goals of this large-scale test.

Goals

Connect 200 million clients to a cluster of HiveMQ broker instances in less than 40 minutes

Pump messages to the peak of 1 million messages a second

Create 5 million connections per node

Test in real-world-like messaging and subscriber patterns

Infrastructure Used

| Compute layer | AWS EC2 |

|---|---|

| Region | EC2 us-east-1 |

| Cluster configuration for HiveMQ MQTT broker | 40 x m5n.8xlarge |

| HiveMQ Swarm instances (for MQTT clients) | 50 + 1 (commander) r5n.4xlarge |

| Broker Instance configuration | 32 vCPU, 128GB Memory, 300 GB gp3 storage with 3000 IOPS |

| HiveMQ Swarm instance configuration | 8 vCPU, 128GB Memory, 300 GB gp3 storage with 3000 IOPS |

Note: HiveMQ ensures data durability by persisting the incoming data to disk

Details

We used HiveMQ Swarm to connect 200 million clients to the HiveMQ Broker cluster. We used MQTT v5 for this test. The clients were connected with a Keep Alive of 30 minutes, clean-start set to “false”, and a session expiry of 24 hours.

Out of the 200 million clients, 20 million clients acted as publishers. After the clients successfully connected and subscribed, the publishers sent PUBLISH messages with QoS 1 for the other 180 million clients with an even distribution of one message every 40 seconds per publisher for a duration of 20 minutes.

The test results in a total of 1,000,000 PUBLISH messages processed and generated per second. The PUBLISH messages have the same 16-byte payload.

Once all PUBLISH messages were sent, the clients were disconnected at an even rate.

<?xml version="1.0" encoding="UTF-8"?>

<scenario xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:noNamespaceSchemaLocation="http://hivemq.com/scenario.xsd">

<brokers>

<!--Add listeners as separate brokers-->

</brokers>

<clientGroups>

<clientGroup id="publishers">

<count>20000000</count>

</clientGroup>

<clientGroup id="clients">

<count>180000000</count>

<clientIdPattern>c-[0-9]{9}</clientIdPattern>

</clientGroup>

</clientGroups>

<topicGroups>

<topicGroup id="topics">

<topicNamePattern>t-[0-9]{9}</topicNamePattern>

<count>180000000</count>

</topicGroup>

</topicGroups>

<stages>

<stage id="connect-pub">

<lifeCycle id="connect-pub-lc" clientGroup="publishers">

<delay spread="linear" duration="200s"/>

<connect keepAlive="30m" cleanStart="false" sessionExpiry="24h" autoReconnect="true"/>

</lifeCycle>

</stage>

<stage id="connect">

<lifeCycle id="connect-lc" clientGroup="clients">

<delay spread="linear" duration="2000s"/>

<connect keepAlive="30m" cleanStart="false" sessionExpiry="24h" autoReconnect="true"/>

<subscribe to="topics" qos="2"/>

</lifeCycle>

</stage>

<stage id="wait-1">

<lifeCycle id="wait-1-lc" clientGroup="clients">

<delay duration="30s"/>

</lifeCycle>

</stage>

<stage id="publish">

<lifeCycle id="publish-lc" clientGroup="publishers">

<delay duration="1200s" spread="linear"/>

<publish topicGroup="topics" count="30" qos="1" message="0123456789012345"/>

</lifeCycle>

</stage>

<stage id="wait-2">

<lifeCycle id="wait-2-lc" clientGroup="clients">

<delay duration="30s"/>

</lifeCycle>

</stage>

<stage id="disconnect">

<lifeCycle id="disconnect-lc" clientGroup="clients">

<delay spread="linear" duration="420s"/>

<disconnect/>

</lifeCycle>

</stage>

</stages>

</scenario>Common Questions and Discussion

Can HiveMQ handle 1 billion concurrent connections?

We are confident it can. This current benchmark of 200 million concurrent connections is already several times larger than some of the largest known IoT production deployments. If we increase the number of concurrent connections, we move beyond even liberal projections of growth for individual IoT deployments.

At HiveMQ we see KPIs like resource usage, long tail latencies, resilience against infrastructure outage to matter most to businesses as they scale. We will continue to produce more benchmarks around the efficiency and reliability of HiveMQ at scale.

Why not split the publisher and subscribers 50:50?

We used fewer publishers to ensure the PUBLISH message load got evenly distributed across the HiveMQ broker instances. This helps avoid situations where groups of publishers and subscribers are constantly connected to the same broker instance.

A test with a 50:50 split between publishers and subscribers can create misleading results for the reason just described.

This is important because it’s closer to a real-world scenario where a smaller number of publishers push data to many subscribers. This also means we could generate and manage a significantly higher (compared to a 50:50 split) amount of intra-cluster load and observe the broker’s behavior.

Why use a long Keep Alive timer and the small payload size?

The long keep-alive duration (30 minutes) was used because PING messages don’t affect the scalability of the broker cluster. So, processing PINGREQ messages doesn’t require communication between broker instances. A long Keep Alive is also typical of a Wide Area Network deployment that typically underpins real-world deployments.

We used a payload size of 16 B because it doesn’t affect how the broker processes PUBLISH messages (except for enormous payloads that can go up to 256 MBs). The payload size mainly impacts infrastructure like storage and network bandwidth which wasn’t the focus of the test. Since we were using a public cloud service (AWS), we did not see value optimizing payload for this project.

Using the same payloads instead of generating new payloads doesn’t impact the broker’s performance since payloads were not cached or deduplicated in any way. However, generating random payloads would unnecessarily increase the workload for the HiveMQ Swarm agents.

Should we count PUBACKs in message rate?

In our scenario, the HiveMQ broker cluster processed and created 1,000,000 PUBLISH messages per second (incoming and outgoing).

Concurrently, the broker cluster also sent PUBACK to the publishing client and received PUBACK messages from the receiving client at the same rate. This results in an average rate of 1 million incoming MQTT messages per second and the same rate of outgoing MQTT messages during the publish phase.

Technically speaking, PUBACKs can be counted in the rate calculation. With PUBACKs included, the HiveMQ broker cluster processes 2 million messages per second. However, we filter our perspective through a typical business use case, focusing on the PUBLISH messages that carry a payload.

Why is all publishing done with QoS 1?

QoS 1 is the most used QoS level in IoT deployments as it strikes a good balance between performance and durability for most use cases.

How did our test deviate from real-world recommendations?

To focus on the scalability and stability aspect of this test, we chose the following settings which had a neutral effect on the premise of the experiment and the performance numbers:

Skipped using load balancers since, depending on their type, they can add peculiarities to the setup. They also add to testing and configuration times.

For the broker instance:

JVM heap of each broker instance set to 85GB. (production recommendation is 50% of RAM)

Connection throttling and overload protection were disabled as we could allow ourselves less safety margin in a test environment. If turned on, the overload protection feature would start to throttle the incoming PUBLISH message at a slightly lower rate (about 450,000 per second) compared to the 500,000 messages per second we needed.

How can I run this test myself?

You will need three main elements to reproduce this benchmark:

An AWS account and sufficient AWS skills to set up the environment. Be aware that a benchmark run will cost around $100 per run depending on how fast/automated you can spin up and wind down your environment. Please refer to the Infrastructure section for compute environment details.

The HiveMQ Broker config and the HiveMQ Swarm Scenario used for this benchmark. The necessary environmental adaptations are marked in the files.

HiveMQ Platform and HiveMQ Swarm licenses of sufficient magnitude to allow for the tested scale. Please reach out to benchmarking@hivemq.com and we are happy to start a conversation about this.

Conclusion

There are three critical considerations companies working to deploy and scale IoT projects can learn from the successful HiveMQ 200 million benchmark.

Load test before deploying into production. Many customers think the small POC phase is enough without using a distributed simulation environment to successfully test millions of clients and messages. As a result, they truly don’t know how the deployment will behave. Load testing for performance, scalability, and reliability ensures success.

Plan for scale. There are two types of projects. One is already large, for instance connecting all 115 million smart electricity meters in the US, or offering services for the 13 million+ connected vehicles sold in the US since 2021. The other starts small but plans for growth as new services, devices, and sites are added, for instance a consumer goods manufacturing company with plans to add 20 factories. In both cases, it is essential to support growth from the onset by planning for scale.

Architecture matters. In this test, the clustered architecture provided by the HiveMQ MQTT broker makes it ultra-scalable and highly available. Clustering is typically used in cloud environments for systems that must not fail and need linear scalability over time. While the MQTT protocol has its inherent benefits, it’s not built for extreme scale by itself and a premium MQTT broker like HiveMQ is essential.

The long-term success of your business depends on the ability to scale. Thanks to the benchmark you know MQTT can help and HiveMQ is available to support you along the entire journey whether you start small or require enterprise-level scale and reliability. Contact us to download the HiveMQ broker and build a data foundation for your project that can scale to any size.