Automating MQTT Broker Management on Kubernetes with IaC and GitOps: Part 1

In today’s world of connected devices and real-time data flow, many companies rely on MQTT to manage and route massive volumes of information. However, deploying an MQTT broker in a production Kubernetes environment, especially at scale, brings a unique set of challenges. Companies often struggle with configuration drift, version control issues, lack of visibility, and managing consistent deployments across multiple clusters and environments. Traditional deployment approaches make it difficult to keep up with rapid changes while ensuring stability and security.

This is where Infrastructure as Code (IaC) and GitOps come in. By defining infrastructure configurations in code, IaC enables teams to create reproducible deployments and maintain consistency across environments. GitOps builds on this by using Git as the single source of truth for both infrastructure and application code, enabling automated, declarative, and consistent deployments on Kubernetes. With GitOps and IaC, companies can streamline the MQTT broker deployment process, simplify rollback and recovery, and enhance overall reliability and scalability within their Kubernetes clusters.

In this two-part blog series, we explore how adopting IaC and GitOps can help overcome the complexities of large-scale MQTT broker deployments on Kubernetes and transform how organizations manage their production environments. In Part 1, we show how to prep our environment to support a secure, scalable, and GitOps-driven deployment. Let’s dive in.

Deployment Automation Setup with ArgoCD and Terraform

At the heart of this GitOps-driven deployment solution is Argo CD, a Kubernetes-native continuous delivery tool that automates the deployment and synchronization of applications across clusters. Designed with cloud-native principles, Argo CD integrates seamlessly with Kubernetes and adheres to its declarative management approach, making it ideal for modern containerized environments. One of Argo CD’s core concepts is the Application, which represents a Kubernetes resource defined by manifests stored in a Git repository. These Application resources not only specify what to deploy but also keep track of the desired state, ensuring that the deployed applications in the cluster always match what’s defined in Git.

Argo CD continuously monitors these Applications, detecting any divergence between the live state and the desired state in Git. If discrepancies arise—whether due to configuration drift, manual changes, or new commits to the Git repository—Argo CD can automatically or manually synchronize resources back to the desired state, depending on the configured policy. This ensures that deployments are reproducible and consistent across environments, a cornerstone of GitOps.

What happens when you start completely from scratch or want to create multiple deployments? In large-scale environments, the goal is to minimize manual steps even in these situations, ensuring every part of the deployment is automated, consistent, and easily repeatable. This is where Terraform comes in. By defining infrastructure as code, Terraform enables a truly reproducible solution, allowing you to deploy all necessary components, including Argo CD itself, without manual intervention.

In this setup, Terraform is used to perform essential bootstrapping tasks, configuring the initial resources needed to get Argo CD up and running in the Kubernetes cluster. With Terraform’s declarative syntax, you can define these resources as code, making it straightforward to replicate the setup in different environments and scale the deployment consistently.

A key component of Terraform’s functionality is its state, a file that records the current status of all resources deployed by Terraform. To ensure that this state isn’t stored locally on a developer’s machine, which could lead to inconsistencies and version conflicts, Terraform supports remote state storage. The remote state enables teams to store and share this state in a central, accessible location. Conveniently, Kubernetes itself can serve as a backend for Terraform state, allowing the state to be securely stored within the Kubernetes cluster. This centralization of state in Kubernetes promotes consistency across deployments and avoids local dependencies.

Beyond bootstrapping, Terraform’s extensive provider support also enables users to create scalable infrastructure across multiple platforms. With compatibility for major cloud platforms, Terraform can provision Kubernetes clusters, such as Amazon’s Elastic Kubernetes Service (EKS) or Microsoft’s Azure Kubernetes Service (AKS)

For simplicity, this article assumes a Kubernetes cluster is already deployed and accessible, allowing us to focus on deploying and managing the HiveMQ MQTT broker and all dependencies within the cluster using GitOps practices. With Terraform handling the initial setup, Argo CD is fully operational and ready for seamless, Git-driven deployments, providing an entirely automated, reproducible deployment solution.

Setting Up the Git Repository

A Git repository is central to any GitOps workflow, serving as the single source of truth for all configurations and application definitions. To ensure consistency and organization, the repository needs to follow a specific structure that clearly defines the resources to be deployed and managed. For this example, we’ll use GitHub to host our repository.

Within this repository, the desired structure should include directories for configuration files, application manifests, and any other resources that Argo CD will monitor and synchronize with the Kubernetes cluster. Each application, including the HiveMQ MQTT broker, can be organized into its own directory with clear naming conventions, making it easy to manage and update configurations.

To enable Argo CD to access the GitHub repository, we need to create an access token. In this case, we use a GitHub deploy key—an SSH key specifically designed to allow read-only access to a repository. Deploy keys are ideal for GitOps scenarios, as they provide secure, limited access for tools like Argo CD. Once created, this deploy key will be added to Argo CD’s configuration, allowing it to pull updates and synchronize the cluster with the latest changes in the repository.

For detailed instructions on creating a deploy key, refer to the GitHub documentation on deploy keys. With the repository structured and secured via a deploy key, Argo CD can now monitor for changes and continuously deploy updates to the Kubernetes cluster, ensuring that our configurations remain in sync with the declared state in Git.

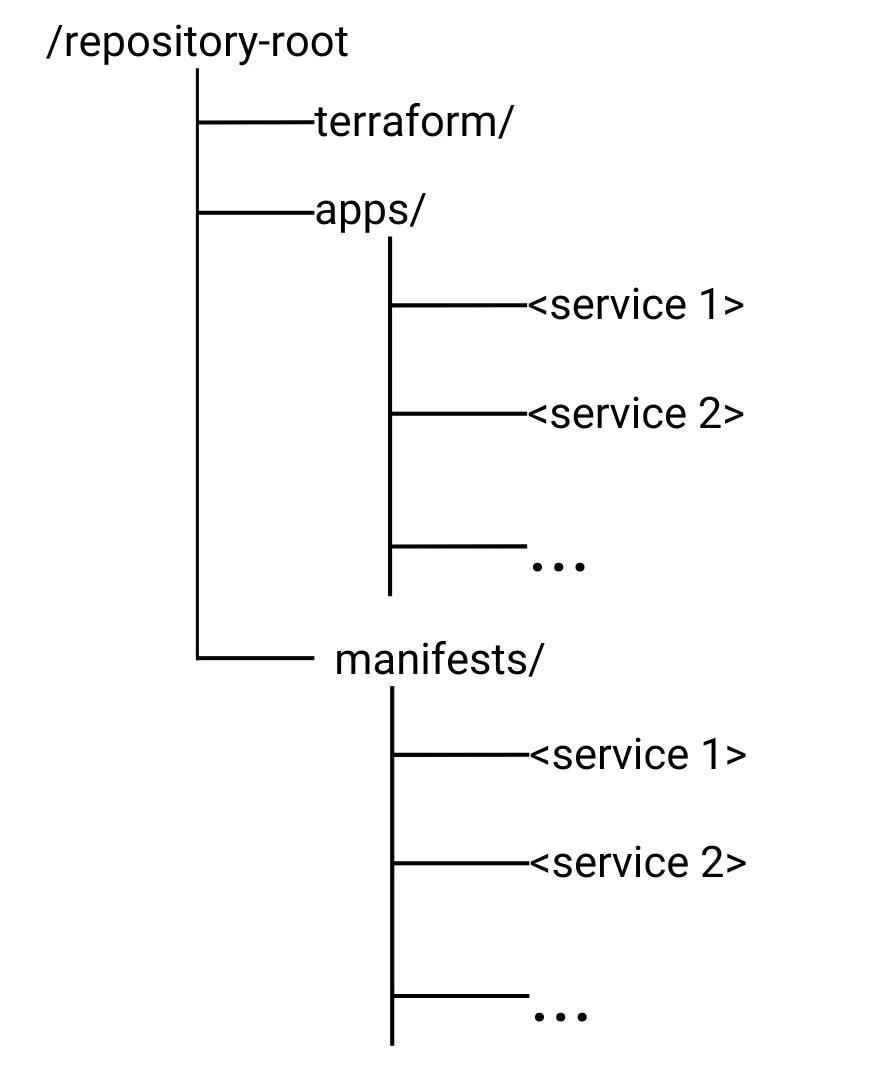

Organizing the Git Repository for Effective Application Management

To ensure clean organization and separation of applications and services, the Git repository should follow a structured layout. This layout not only helps in managing multiple applications but also supports Argo CD’s concept of grouping Kubernetes resources and manifests under distinct Argo CD Applications. Here is a recommended structure:

terraform/: This directory contains all files related to Terraform configurations. These files define the infrastructure setup, including the bootstrapping of Argo CD and any other required resources. By keeping Terraform files in a dedicated directory, we maintain a clear separation between infrastructure code and application configurations, making the repository more manageable and reproducible.

apps/: This directory is dedicated to Argo CD Application definitions. Each Argo CD Application represents a service to be deployed, e.g. the HiveMQ Broker. Ideally, this is a Helm deployment but it can be of any type that is supported by Argo CD (Kubernetes manifests, Kustomize, etc.). Each apps/<service> folder typically contains two Argo CD applications: one for the main service deployment called

<service>.yamland one for additional manifests belonging to the service, such as dependencies or configuration called<service>-manifests.yaml. These manifests are located in the manifests/<service> folder. This approach enables Argo CD to monitor and synchronize each application independently, simplifying management and scalability.manifests/: This directory holds all Kubernetes manifests for each service application managed by Argo CD. Inside

manifests/, subdirectories for each application or service can be created, containing the necessary deployment files,ConfigMaps, Services, and any other Kubernetes resources needed. By storingmanifestshere, we can easily track and update the exact state of each application and ensure that changes are centrally managed through Git.

This repository structure makes it easy to maintain a clear separation between infrastructure, Argo CD application definitions and the specific Kubernetes manifests each application requires. With this setup, Argo CD can monitor the apps/ directory for application configuration changes, while the manifests/ directory contains the granular resource definitions that each Argo CD Application will manage within the Kubernetes cluster.

Streamlining Deployment Process Using Terraform

As previously discussed, Terraform is used here to provide a consistent and automated way to set up the environment from scratch. In this setup, Terraform takes care of deploying Argo CD, configuring it to connect to the Git repository, and setting up a special Argo CD Application called the Bootstrap application.

The Bootstrap application serves as a foundational component in the GitOps pipeline. Its primary role is to manage the deployment of all subsequent applications stored in the apps/ folder. By defining the Bootstrap application through Terraform, we ensure that this initial configuration is part of the automated setup process and easily reproducible in any environment. Once the Bootstrap application is up, it will automatically monitor the apps/ folder for new Argo CD Application definitions, triggering the deployment of each defined service or application within the Kubernetes cluster.

This Terraform-based setup not only streamlines the deployment process for Argo CD but also reduces manual intervention by fully automating the initial and ongoing application synchronization process with the Kubernetes cluster.

For this example, we will stick to the Terraform style guide and create the following files:

A

backend.tffile that contains your backend configurationA

main.tffile that contains all resource and data source blocksA

outputs.tffile that contains all output blocks in alphabetical orderA

providers.tffile that contains all provider blocks and configurationA

terraform.tffile that contains a single terraform block, which defines yourrequired_versionandrequired_providers.A

variables.tffile that contains all variable blocks in alphabetical order

Leveraging Terraform Providers to Manage Kubernetes

In Terraform, Providers are plugins that enable interaction with various external platforms and services. Providers allow Terraform to manage resources by abstracting the APIs of these services, enabling users to define resources in a consistent way across different platforms. Each provider corresponds to a specific service, such as cloud platforms, databases, or, in our case, Kubernetes and Helm.

Providers are essential in defining the types of resources Terraform can manage within a configuration. By specifying providers in the Terraform setup, we can leverage their functionality to deploy resources consistently and reproducibly.

For our example setup, we need two providers:

Kubernetes Provider: The Kubernetes provider allows Terraform to manage Kubernetes resources directly within a cluster. Through this provider, Terraform can define ConfigMaps, namespaces, and other Kubernetes resources required to set up Argo CD and the Bootstrap application. Check out Kubernetes Provider documentation.

Helm Provider: The Helm provider allows Terraform to deploy and manage Helm charts in Kubernetes. Helm charts are pre-packaged collections of Kubernetes resources that can simplify the deployment of complex applications. Using the Helm provider, Terraform can install Argo CD as a Helm chart, ensuring all necessary resources are set up consistently. Check out Helm Provider documentation.

By using these providers, our Terraform configuration can create all necessary Kubernetes resources and deploy Argo CD in a streamlined and automated manner. This approach further reinforces the infrastructure-as-code and GitOps principles by making the deployment environment entirely code-driven and repeatable.

providers.tf

terraform {

required_providers {

local = {

source = "hashicorp/local"

}

}

}

provider "kubernetes" {

config_path = var.kubernetes_config

config_context = var.kubernetes_context

}

provider "helm" {

kubernetes {

config_path = var.kubernetes_config

config_context = var.kubernetes_context

}

}For our example, we’ll configure the Kubernetes and Helm providers using a local configuration file and define this file path as a variable. This setup adds flexibility, making it easy to adapt the configuration for different environments.

Kubernetes Provider Configuration: The Kubernetes provider requires a

kubeconfigfile to authenticate and connect with the Kubernetes cluster. In our setup, we’ll define thekubeconfigfile path as a variable (kubeconfig_path), which points to a localkubeconfigfile. This allows us to switch between different clusters by simply changing the variable value. Additionally, we specify theconfig_contextas a variable to select the desired context within thekubeconfigfile. This enables precise targeting of the appropriate Kubernetes cluster in multi-cluster setups.

Example configuration for the Kubernetes provider:

provider "kubernetes" {

config_path = var.kubeconfig_path

config_context = var.config_context

}Helm Provider Configuration: Similar to the Kubernetes provider, the Helm provider uses the same

kubeconfigfile to connect to the Kubernetes cluster. By defining thekubeconfigpath as a variable, we ensure flexibility in targeting different clusters without hardcoding the connection details. This setup also allows us to specify the desired context within thekubeconfigfile, ensuring Helm operates within the intended Kubernetes environment.

Example configuration for the Helm provider:

provider "helm" {

kubernetes {

config_path = var.kubeconfig_path

config_context = var.config_context

}

}By managing kubeconfig_path and config_context as variables, our configuration is flexible and adaptable to different environments or clusters. This approach is especially useful in scenarios where multiple Kubernetes clusters are involved, allowing for quick adjustments to the Terraform configuration.

Handling Terraform State

In Terraform, state is a critical concept used to keep track of the resources it manages. Terraform stores information about the deployed infrastructure in a state file, allowing it to map real-world resources to the configuration defined in the code. This state enables Terraform to detect changes, manage dependencies, and determine the actions required to update the infrastructure.

To store this state file, Terraform uses backends. By default, the state file is stored locally, but in collaborative environments, this can lead to issues with consistency and team coordination. For team-based workflows, a remote state is recommended. Remote state allows the state file to be stored centrally, ensuring that multiple team members can access the latest state, avoid conflicts, and maintain consistency across deployments.

Kubernetes Backend

In our demo, we’ll use the Kubernetes backend to store Terraform’s remote state directly within the Kubernetes cluster. By storing the state in Kubernetes, we gain the benefits of centralized state management without relying on external services. This backend stores the state as a Kubernetes Secret, providing a secure and accessible location within the cluster itself. This setup ensures that the Terraform state is centrally managed, versioned, and accessible to all team members working with the same cluster.

Using the Kubernetes backend helps us achieve a consistent and reliable deployment environment where every change is tracked and shared, enhancing collaboration and transparency within the team.

terraform {

backend "kubernetes" {

secret_suffix = "state"

config_path = "../kubeconfig"

}

}NOTE: Unfortunately, variables cannot be used with Terraform backends. So keep this in mind when working with different clusters, as config_path needs to be adjusted manually!

Deploying Argo CD with Terraform

With the prerequisites in place, we’re ready to start deploying Argo CD. To keep our Terraform code modular and reusable, we’ll encapsulate each deployment in a separate Terraform module. This best-practice approach allows us to organize resources by function, making the code more maintainable and easier to scale. Each module is stored within the modules directory and follows a standardized file and folder structure. For our Argo CD deployment, we’ll create a dedicated module at modules/argocd.

Within the modules/argocd folder, the following common files and structure help keep the module clean, organized, and flexible:

main.tf: The primary Terraform file, defining all resources necessary for deploying Argo CD, such as the Helm chart or individual Kubernetes manifests.

variables.tf: A file defining input variables, making it possible to pass in configurable values like the namespace, Helm chart version, or other parameters that may vary across environments.

outputs.tf: This file specifies the module’s outputs, allowing other parts of the Terraform configuration to access important values such as the Argo CD URL or other relevant outputs.

bootstrap-app.yaml: This file contains the definition of the Bootstrap Application, which will essentially be responsible for deploying all subsequent Argo CD Applications.

By encapsulating the Argo CD deployment into a module, we make it reusable and configurable, so it can be easily incorporated into different Terraform setups or modified without disrupting the main codebase. This structure not only supports better code organization but also enhances flexibility and reusability, aligning well with infrastructure-as-code best practices.

In the following sections, we’ll define the Terraform code for deploying Argo CD using this module and configure it to connect with the GitHub repository using the deploy key created earlier.

In the main.tf file within the modules/argocd module, we define the resources necessary to set up Argo CD and configure it to access our GitHub repository securely. One important resource here is the data resource that reads the SSH key used to authenticate Argo CD with the repository.

Key Resources in main.tf

Data Resource:

repository_deploy_key

In Terraform, data resources are used to retrieve information defined outside the Terraform configuration or generated by other processes. Unlike resource blocks, data resources do not create or manage infrastructure directly; instead, they fetch existing data that Terraform can use within its configuration.

We use a data resource of type local_file to read the SSH key file, which was previously created and added as a deploy key to our Git repository. If you do not want to or if you are not allowed to store the deploy key on your local file system there are other options to get the key, e.g. by using a password manager or central secret management system as well. This SSH key allows Argo CD to access the Git repository securely and synchronize the desired application state. By storing the key locally and using Terraform to load it into the deployment, we ensure the key can be consistently applied within the Kubernetes cluster where Argo CD will run.

data "local_file" "repository_deploy_key" {

filename = var.git_repository_key_path # Path to the SSH key file as defined in a variable

}NOTE: As the deploy key grants access to your repository make sure NOT to commit it to the repository but to store it securely in another location!

In this snippet, var.git_repository_key_path is a variable pointing to the location of the SSH key file on disk. The local_file data source reads the file contents, allowing us to pass the SSH key as a Kubernetes Secret for Argo CD’s use.

This data resource is essential for enabling Argo CD to authenticate with the Git repository using the deploy key, eliminating the need for manual intervention and enhancing the security and consistency of repository access within the cluster. The contents read by this data resource can then be used to create an Argo CD repository configuration, which essentially is a Kubernetes Secret.

Resource:

argocd_namespace

In Kubernetes, namespaces are logical partitions within a cluster, used to organize and isolate resources. Namespaces make it easier to manage large clusters by allowing resources to be grouped by environment (e.g., dev, staging, production), application, or team, ensuring that resources don’t inadvertently interact with one another.

For our Argo CD setup, we create a dedicated namespace to isolate all related resources:

resource "kubernetes_namespace" "argocd_namespace" {

metadata {

name = "argocd"

}

}Resource:

argocd

The argocd resource is a helm_release that deploys the Argo CD Helm chart to our Kubernetes cluster. Using Helm allows us to manage the deployment of Argo CD in a streamlined way, with configurable parameters that make it easy to customize and update.

We could pass custom values to the Helm chart to modify Argo CD’s configuration. This can be done through the values field, where we define a map of key-value pairs to override default settings in the Helm chart.

While our example doesn’t require any additional parameters, this flexibility is useful for customizing Argo CD as needed. For instance, we could set the insecure option for the argocd-server service, which may be necessary when exposing Argo CD through an ingress with TLS termination. This would allow the Argo CD server to accept plain HTTP connections, deferring encryption to the ingress.

Example snippet for setting custom values:

resource "helm_release" "argocd" {

name = "argocd"

repository = "https://argoproj.github.io/argo-helm"

chart = "argo-cd"

namespace = kubernetes_namespace.argocd_namespace.metadata[0].name

# Optional values for further customization

values = [

{

# Example to enable HTTP for Argo CD server when using TLS termination at ingress

"server.config.insecure" = true

}

]

}Using the values field allows us to tailor the Argo CD deployment to meet specific needs within the cluster, making the deployment more adaptable to different environments and requirements.

In this helm_release resource, we define key parameters required to deploy Argo CD, including the Helm chart repository, chart name, deployment name, and target namespace.

resource "helm_release" "argocd" {

name = "argocd" # Name of the release in Kubernetes

repository = "https://argoproj.github.io/argo-helm" # Repository URL for the Argo CD Helm chart

chart = "argo-cd" # Chart name within the repository

namespace = kubernetes_namespace.argocd_namespace.metadata[0].name # Namespace where Argo CD will be deployed

}name: Sets the name of the Helm release in Kubernetes. Here, it’s set to "argocd", which will be used as the name for Argo CD resources within the cluster.repository: Specifies the URL of the Helm chart repository, in this case pointing to the official Argo CD Helm chart repository.chart: Defines the specific chart to be deployed, here set to "argo-cd", which contains all required resources for deploying Argo CD.namespace: References the namespace resource created earlier, so Argo CD is deployed in its dedicated namespace.

This Helm release resource provides a convenient and modular way to install and configure Argo CD within Kubernetes. By leveraging the Helm chart, we can easily manage versioning, updates, and configuration parameters, making it simple to customize the Argo CD deployment as needed.

Resource:

argocd_gitops_repository

The argocd_gitops_repository resource is of type kubernetes_secret and represents the secure connection from Argo CD to the Git repository where our application configurations are stored. This Kubernetes Secret provides the credentials and information Argo CD needs to authenticate with the repository, enabling it to pull configurations and sync them to the Kubernetes cluster.

The argocd_gitops_repository resource defines key attributes necessary for connecting to the Git repository. It references the repository_deploy_key data resource described earlier to securely inject the SSH private key, which enables Argo CD to authenticate with the Git repository via SSH.

resource "kubernetes_secret" "argocd_gitops_repository" {

metadata {

name = "argocd-gitops-repository"

namespace = kubernetes_namespace.argocd_namespace.metadata[0].name

labels = {

"argocd.argoproj.io/secret-type" = "repository"

}

}

data = {

"name" = "gitops-demo-repository"

"url" = var.git_repository_url

"type" = "git"

"sshPrivateKey" = data.local_file.repository_deploy_key.content

}

}name: The unique identifier Argo CD will use for this GitOps repository connection.

url: The URL of the Git repository, provided as a variable (

git_repository_url) to allow flexibility and reuse.type: Specifies the repository type. Here, it’s set to "git", indicating that this is a Git repository.

sshPrivateKey: The SSH private key content, retrieved securely from the

repository_deploy_keydata resource.

Argo CD will use this Secret to authenticate and pull application definitions, supporting continuous synchronization with the desired state defined in Git.

Resource:

argocd_bootstrap_app

The final resource for deploying Argo CD is the argocd_bootstrap_app, an Argo CD Application responsible for bootstrapping the environment. Known as the Bootstrap App, this application uses the "app-of-apps" pattern, a commonly used pattern with Argo CD that allows a single parent application to manage multiple child applications. This setup enables Argo CD to deploy and manage a collection of applications from a central point, streamlining multi-application management.

To implement this pattern, we can use the argocd-apps Helm chart provided by Argo CD, which allows us to define the bootstrap application configuration in a separate YAML file, such as bootstrap-app.yaml. This approach is essential because the Kubernetes provider for Terraform validates the types of Kubernetes resources against Custom Resource Definitions (CRDs). Without Argo CD and its CRDs being deployed first, Terraform’s validation would fail, creating a chicken-and-egg problem.

By using the argocd-apps Helm chart and defining the Bootstrap App in a YAML file, we can avoid this issue. The YAML file can be passed directly through the values property in the Helm chart, allowing us to defer CRD validation until Argo CD is fully deployed.

Example configuration for the Bootstrap App:

resource "helm_release" "argocd_bootstrap_app" {

depends_on = [helm_release.argocd]

name = "argocd-bootstrap-app"

repository = "https://argoproj.github.io/argo-helm"

chart = "argocd-apps"

namespace = kubernetes_namespace.argocd_namespace.metadata[0].name

values = [

# Load bootstrap application from yaml file and pass repo url variable

templatefile("${path.module}/bootstrap-app.yaml", {

repo_url = var.git_repository_url

})

]

}In this setup:

depends_on: Specifies that argocd_bootstrap_app depends on helm_release.argocd. This guarantees that the Bootstrap App is deployed only after Argo CD is fully set up.values: The values key takes the content ofbootstrap-app.yaml, defining all child applications to be managed by the Bootstrap App. This file contains the configuration for deploying other applications, aligning with the app-of-apps pattern.templatefile: Reads thebootstrap-app.yaml.tplfile and replaces the ${git_repository_url} placeholder with the value ofvar.git_repository_url.

Using the argocd-apps Helm chart and specifying the configuration in a YAML file simplifies the setup and ensures Terraform can deploy the entire environment in a stable, repeatable manner.

For more on the app-of-apps pattern, see Argo CD’s documentation.

terraform/modules/argocd/applications.yaml

applications:

demo-bootstrap-app:

namespace: argocd

project: default

source:

repoURL: ${repo_url}

targetRevision: HEAD

path: apps

directory:

recurse: true

destination:

name: in-cluster

syncPolicy:

automated:

prune: true

selfHeal: true

syncOptions:

- CreateNamespace=trueOur Bootstrap Application is an Argo CD Application that follows a straightforward structure. It uses the "app-of-apps" pattern to deploy all child applications defined in the apps folder, enabling Argo CD to manage multiple applications from a central point. This setup simplifies application deployment and management, especially in large environments with many applications or services.

In our example, all applications will be deployed to the same cluster where Argo CD itself is running. We set the destination cluster to "in-cluster" to ensure the applications are managed within the same Kubernetes environment.

Explanation of Key Sections:

repoURL: This references the Git repository URL where the apps folder is located, dynamically populated using the Terraform variable.path: Points to the apps folder, which Argo CD will use to recursively find and deploy child applications.destination.server: Set to "https://kubernetes.default.svc" to indicate that applications will be deployed in the same cluster. This is Argo CD’s way of recognizing the "in-cluster" environment, meaning it will deploy resources within the current Kubernetes cluster.syncPolicy: Enables automated synchronization with prune and selfHeal options.prune: Automatically removes resources that are no longer defined in the Git repository.selfHeal: Continuously monitors and re-applies the desired state, ensuring that applications remain in sync with the configuration in Git.

With this setup, the Bootstrap Application in Argo CD will automatically discover and deploy any applications defined in the apps folder, all within the same cluster where Argo CD is deployed. This configuration supports a GitOps workflow by enabling the Bootstrap Application to dynamically manage new or updated child applications, making deployments simple, scalable, and maintainable.

terraform/modules/argocd/main.tf

data "local_file" "repository_deploy_key" {

filename = var.git_repository_key_path

}

resource "kubernetes_namespace" "argocd_namespace" {

metadata {

name = "argocd"

}

}

resource "helm_release" "argocd" {

name = "argocd"

repository = "https://argoproj.github.io/argo-helm"

chart = "argo-cd"

namespace = kubernetes_namespace.argocd_namespace.metadata[0].name

}

resource "kubernetes_secret" "argocd_gitops_repository" {

metadata {

name = "argocd-gitops-repository"

namespace = kubernetes_namespace.argocd_namespace.metadata[0].name

labels = {

"argocd.argoproj.io/secret-type" = "repository"

}

}

data = {

"name" = "gitops-demo-repository"

"url" = var.git_repository_url

"type" = "git"

"sshPrivateKey" = data.local_file.repository_deploy_key.content

}

}

resource "helm_release" "argocd_bootstrap_app" {

depends_on = [helm_release.argocd]

name = "argocd-apps"

repository = "https://argoproj.github.io/argo-helm"

chart = "argocd-apps"

namespace = kubernetes_namespace.argocd_namespace.metadata[0].name

values = [

templatefile("${path.module}/bootstrap-app.yaml", {

repo_url = var.git_repository_url

})

]

}Handling Secrets with Sealed Secrets to Safeguard GitOps Environment

In a GitOps workflow, managing sensitive data such as passwords, API keys, and certificates poses unique challenges. Since GitOps relies on storing the entire infrastructure and application configuration, including Kubernetes manifests, in a Git repository, the question arises: how do we keep secret data secure in a system designed for transparency?

Storing sensitive information in plaintext within a Git repository is highly insecure, as it exposes secret values to anyone with access to the repository and increases the risk of accidental leaks. To address this, a solution like Sealed Secrets becomes essential for securely managing secrets in a GitOps environment.

Sealed Secrets from Bitnami allows for secure, encrypted handling of Kubernetes Secrets using SealedSecrets. SealedSecrets are encrypted Kubernetes secrets that can be stored safely in Git repositories. Only the Kubernetes cluster with the correct private key (available only to the controller running on the cluster) can decrypt the SealedSecrets and convert them into Kubernetes Secret resources during deployment. The sensitive data does not leave the cluster.

Why Use Sealed Secrets?

Security: By encrypting secrets before they are committed to Git, Sealed Secrets ensures that sensitive data is never stored in plaintext, protecting it from unauthorized access.

Automation-Friendly: SealedSecrets integrate well with GitOps processes, enabling secure storage of secrets that can be managed and version-controlled along with other application configurations.

Cluster-Specific Encryption: Sealed Secrets uses an asymmetric key pair, where only the cluster holds the private key to decrypt secrets, ensuring that SealedSecrets can only be used on the intended cluster.

Sealed Secrets thus provides a secure and GitOps-compatible way to manage secrets, enabling safe, automated deployment pipelines without compromising sensitive information.

Sealed Secrets Terraform Module

As security is paramount when dealing with sensitive information in a GitOps workflow, Sealed Secrets is also integrated and deployed as part of the Terraform infrastructure. To ensure a seamless and secure process, we have included a dedicated module named sealed-secrets within the project, following the default file layout. This module leverages a Terraform helm_release resource to deploy the Sealed Secrets Helm chart to the Kubernetes cluster.

By deploying Sealed Secrets via Terraform, we can ensure that the solution is fully automated and part of the same declarative infrastructure code, making it easier to manage and maintain in conjunction with other infrastructure resources.

In the sealed-secrets module, we define a helm_release resource that installs the Sealed Secrets controller into the Kubernetes cluster. The module follows the typical structure and naming conventions as seen in the other modules within the project, ensuring consistency and ease of use.

Example sealed-secrets module in Terraform (main.tf):

module "sealed-secrets" {

source = "./modules/sealed-secrets"

}Inside the modules/sealed-secrets folder, we define the helm_release resource to deploy the Sealed Secrets controller:

resource "helm_release" "sealed_secrets" {

name = "sealed-secrets"

repository = "https://bitnami-labs.github.io/sealed-secrets"

chart = "sealed-secrets"

namespace = "kube-system"

set {

name = "fullnameOverride"

value = "sealed-secrets-controller"

}

}This Terraform configuration ensures that:

Sealed Secrets is deployed and managed as part of the infrastructure, ensuring consistency and security.

Secrets management is fully integrated into the Terraform-based deployment pipeline, making it part of the same GitOps process.

By incorporating Sealed Secrets into the Terraform deployment, we ensure that secret management is both secure and automated, giving teams confidence in their GitOps workflow and the integrity of sensitive data.

Check out the documentation to learn how to create SealedSecrets using the tube seal tool.

Putting it All together

In this setup, we have organized our infrastructure deployment using Terraform, dividing the configuration into modular components for easy management and reuse. The two primary Terraform modules in this setup—argocd and sealed-secrets—cover the essential components needed to enable a secure GitOps workflow on Kubernetes.

1. Argo CD Module: This module sets up Argo CD, our GitOps engine, by:

Deploying Argo CD via a Helm chart.

Configuring a Bootstrap Application following the app-of-apps pattern, which deploys all subsequent applications from the apps folder to the Kubernetes cluster.

Establishing secure access to our Git repository by creating a Kubernetes Secret with the SSH deploy key, allowing Argo CD to synchronize applications from Git.

2. Sealed Secrets Module: This module provides a secure solution for handling sensitive data by:

Deploying the Sealed Secrets controller through a Helm chart, enabling encrypted secret storage in Git.

Allowing teams to manage and version control secrets securely alongside other Kubernetes manifests.

Together, these modules form the foundation of a robust GitOps pipeline. By deploying Argo CD and Sealed Secrets through Terraform, we ensure a consistent, repeatable, and secure infrastructure setup, enabling teams to manage applications and secrets within a Git-driven workflow. This modular approach not only streamlines deployments but also provides the flexibility to scale and adapt the setup to various environments and requirements.

main.tf

module "sealed-secrets" {

source = "./modules/sealed-secrets"

}

module "argocd" {

source = "./modules/argocd"

git_repository_url = var.git_repository_url

git_repository_key_path = var.git_repository_key_path

}variables.tf

variable "kubernetes_context" {

description = "The Kubernetes context to use."

default = "default"

}

variable "kubernetes_config" {

description = "The path to your Kubernetes config file to use."

default = "./../kubeconfig"

}

variable "git_repository_url" {

description = "The URL of the Git repository to use"

type = string

}

variable "git_repository_key_path" {

description = "The path to the private key for the Git repository"

type = string

}Running the Deployment with Terraform

With the infrastructure modules configured, we’re ready to deploy our setup to the Kubernetes cluster using Terraform. Terraform’s plan and apply commands will help us preview and execute the deployment, ensuring all resources are correctly defined and any changes are accurately reflected in the environment.

Step 1: Run terraform plan

The terraform plan command generates an execution plan, allowing us to see which resources will be created, modified, or deleted without making any actual changes. Running this command helps verify that the configuration aligns with expectations and that no unintended changes will be applied.

terraform plan

This command will output a summary of the changes Terraform intends to make. Review the plan carefully to ensure it’s correct. For example, you should see the Argo CD and Sealed Secrets Helm releases, along with any Kubernetes resources like namespaces and secrets, ready to be created.

Step 2: Run terraform apply

Once you’re confident in the plan, use terraform apply to execute the deployment. This command will apply the configuration and create all specified resources in the Kubernetes cluster.

terraform apply

Terraform will prompt for confirmation before proceeding. After you confirm, Terraform will proceed to deploy Argo CD, Sealed Secrets, and any other configured resources to the cluster.

Verifying the Deployment

After the terraform apply completes, you can verify the deployment by checking that:

1. Argo CD is running in the specified namespace (e.g., argocd).

2. Sealed Secrets is deployed and ready to manage encrypted secrets.

3. The Bootstrap Application is visible in Argo CD and has successfully started deploying the applications defined in the apps folder.

Running terraform plan and terraform apply simplifies the deployment process, allowing you to manage infrastructure changes declaratively and track them in version control, ensuring that every change is documented, auditable, and reproducible.

Accessing the Argo CD UI

Once Argo CD is deployed, you can access its web UI to manage and monitor applications. The Argo CD UI provides a graphical interface to view the status of applications, trigger syncs, and review configurations directly. To access the UI, we’ll use port forwarding to expose the argocd-server service locally and retrieve the initial admin password from a Kubernetes Secret.

Step 1: Port Forwarding to the argocd-server Service

Argo CD’s server component (argocd-server) is responsible for the web UI and API access. By creating a port-forward to this service, we can access the UI on our local machine.

Run the following command to set up port forwarding:

kubectl port-forward svc/argocd-server -n argocd 8080:443

This command forwards local port 8080 to port 443 of the argocd-server service within the argocd namespace. Once this is running, you can access the Argo CD UI by navigating to https://localhost:8080 in your web browser.

Step 2: Retrieving the Initial Admin Password

By default, Argo CD creates an initial admin user. The initial password for this admin user is stored in a Kubernetes Secret named argocd-initial-admin-secret. To retrieve this password, run:

kubectl get secret argocd-initial-admin-secret -n argocd -o jsonpath="{.data.password}" | base64 -dThis command extracts the base64-encoded password from the Secret and decodes it, displaying the plaintext password. Use this password to log in as the admin user.

This setup allows you to securely access and interact with the Argo CD UI, providing a convenient way to visualize and control your GitOps deployments.

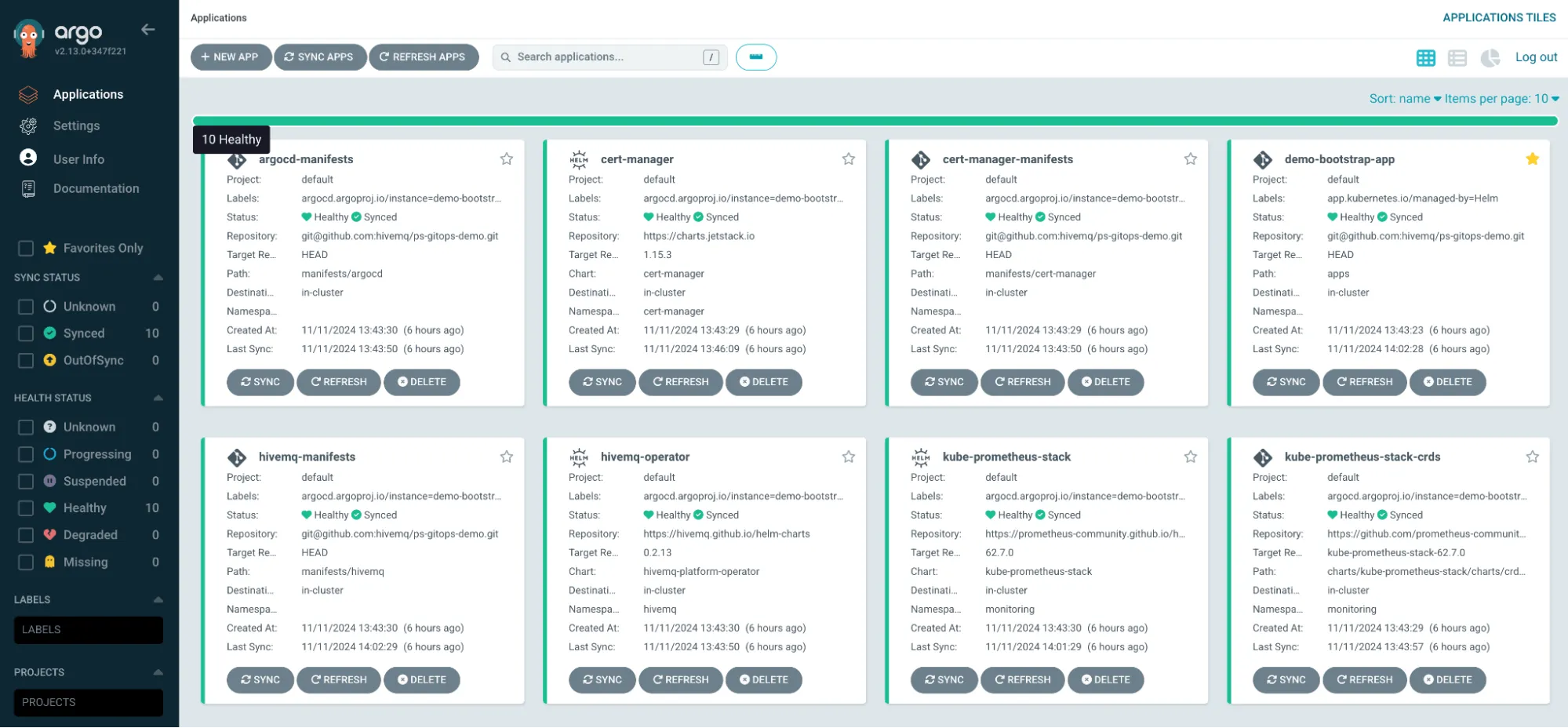

Argo CD web UI showing an overview of all applications.

Argo CD web UI showing an overview of all applications.

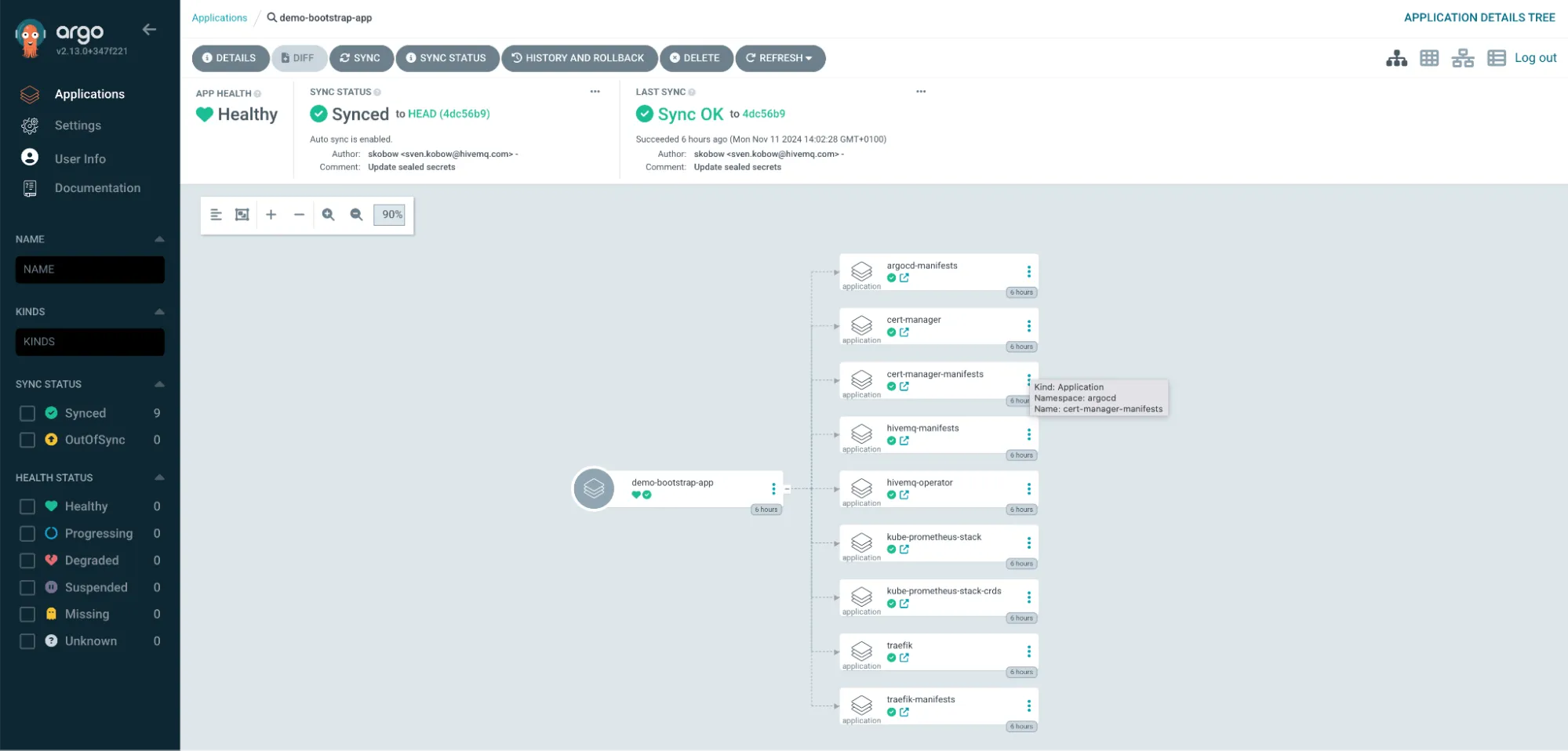

Argo CD web UI showing the detailed view of the demo-bootstrap-app and all its child resources.

Argo CD web UI showing the detailed view of the demo-bootstrap-app and all its child resources.

Configure the HiveMQ MQTT Platform Deployment

In this section, we’ll set up a multi-node HiveMQ MQTT broker cluster configuration designed for high availability and load balancing.

Our setup follows these key objectives:

Multi-Node Cluster: Deploying multiple broker instances for scalability and fault tolerance.

MQTT access: Expose Port 1883 for MQTT traffic

Access to HiveMQ Control Center: Expose the HiveMQ Control Center to monitor and manage the Cluster

You will learn how to secure MQTT traffic and access the HiveMQ Control Center using TLS encryption in part 2 of this series! Stay tuned!

Configuration Overview

To implement this setup, we focus on two objectives:

HiveMQ Platform Deployment: Using the HiveMQ Platform Operator for Kubernetes to manage multiple broker instances as a StatefulSet, which provides stable identities and ordered scaling necessary for clustering.

HiveMQ Platform Deployment: Using the HiveMQ Platform Helm chart to configure and deploy an actual HiveMQ MQTT Platform and enable access to the MQTT port and the HiveMQ Control Center

With these components in place, our HiveMQ MQTT Platform can handle client requests and administration. Integrating this deployment into our GitOps setup allows for centralized configuration management and consistency across deployments. Each component is managed through version-controlled manifests, ensuring our HiveMQ MQTT broker cluster is easily reproducible and scalable.

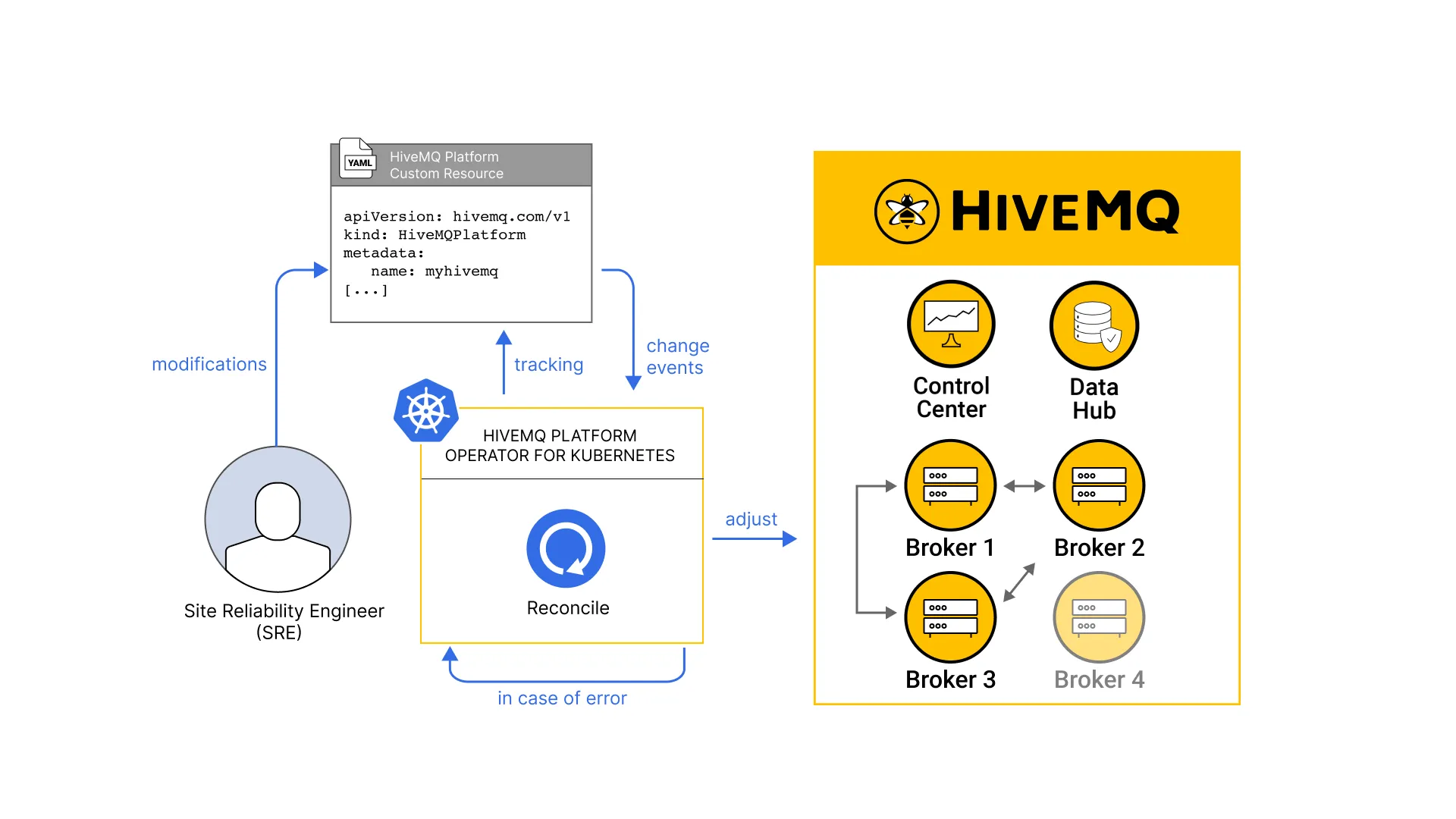

Deploy the HiveMQ Platform Operator for Kubernetes

The HiveMQ Platform Operator for Kubernetes is a tool for managing your HiveMQ deployments in a Kubernetes environment. The operator allows you to install, scale, configure, and monitor your HiveMQ Platform deployments in a versatile, adaptable manner.

The operator installs the HiveMQ Platform based on your configuration. When you make changes to the configuration, the operator automatically reconciles the changes with your running HiveMQ nodes, minimizing downtime and need for manual intervention. This automates many aspects of platform management for DevOps engineers and makes operational tasks such as platform upgrades, new component installation, or configuration updates far simpler.

The operator also provides logs, metrics, and Kubernetes events to help you monitor your HiveMQ deployments and make it easy to integrate into your own observability stack.

A single HiveMQ Platform Operator can manage one or more HiveMQ deployments in your Kubernetes cluster. This makes it possible to manage HiveMQ deployments for multiple, differing environments, such as staging and production, or environments with separate production use cases.

The HiveMQ Platform Operator Helm chart installs the following items on your Kubernetes environment:

The custom resource definition (CRD) for the HiveMQPlatform object.

The DeploymentSet and pod for the HiveMQ Platform Operator.

The Kubernetes RBAC permissions the operator requires to manage your HiveMQ platforms.

apps/hivemq/hivemq-platform-operator.yaml

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: hivemq-operator

namespace: argocd

spec:

project: default

source:

repoURL: https://hivemq.github.io/helm-charts

chart: hivemq-platform-operator

targetRevision: 0.2.13 # check for latest target revision

destination:

name: in-cluster

namespace: hivemq

syncPolicy:

automated: {}

syncOptions:

- CreateNamespace=true

ignoreDifferences:

- group: apiextensions.k8s.io

kind: CustomResourceDefinition

jsonPointers:

- /spec/versions/0/additionalPrinterColumns/0/priority

- /spec/versions/0/additionalPrinterColumns/1/priority

- /spec/versions/0/additionalPrinterColumns/2/priorityDeploy the HiveMQ Platform

The HiveMQ Platform Helm chart installs the following items:

A HiveMQ Platform custom resource.

The HiveMQ

config.xmlas a Kubernetes ConfigMap.

When you use the HiveMQ Platform Helm chart, a HiveMQ Platform custom resource is deployed. Your HiveMQ Platform Operator watches for this incoming custom resource and automatically initiates the installation of your HiveMQ Platform.

The HiveMQ Platform cluster is deployed using a Kubernetes StatefulSet and defines how many HiveMQ nodes are installed.

apps/hivemq/hivemq-platform.yaml

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: hivemq-platform

namespace: argocd

spec:

project: default

source:

repoURL: https://hivemq.github.io/helm-charts

chart: hivemq-platform

helm:

valuesObject:

license:

create: false

name: "hivemq-licenses"

services:

- type: mqtt

exposed: true

containerPort: 1883

serviceType: LoadBalancer

- type: control-center

exposed: true

containerPort: 8080

serviceType: LoadBalancer

targetRevision: 0.2.29

destination:

name: in-cluster

namespace: hivemq

syncPolicy:

automated: {}

syncOptions:

- CreateNamespace=trueAs mentioned earlier, Helm charts can be configured using the spec.source.helm.valuesObject object in our Argo CD application. You can find a complete description of the default values in the values.yaml of the HiveMQ Platform Helm chart.

valuesObject:

# Configures all HiveMQ licenses.

license:

# as we are using SealedSecrets no license secret should be created by the operator

create: false

# The name of the Kubernetes Secret with all HiveMQ licenses.

name: "hivemq-licenses"

# Defines the Service configurations for the HiveMQ platform.

# Configures the Kubernetes Service objects and the HiveMQ listeners.

# Possible service types are: "control-center", "rest-api", "mqtt", "websocket" and "metrics"

services:

# Configure the MQTT service to listen on port 1883 and use type LoadBalancer for external connectivity

- type: mqtt

exposed: true

containerPort: 1883

serviceType: LoadBalancer

# Configure the HiveMQ Control Center service to listen on port 8080 and use type LoadBalancer

# for external connectivity

- type: control-center

exposed: true

containerPort: 8080

serviceType: LoadBalancerDeploy Additional Resources

apps/hivemq/hivemq-manifests.yaml

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: hivemq-manifests

namespace: argocd

spec:

project: default

source:

repoURL: <YOUR_REPOSITORY_URL>

targetRevision: HEAD

path: manifests/hivemq

destination:

name: in-cluster

syncPolicy:

automated:

prune: trueAs we have two Helm deployments in this case we have defined two primary Argo CD applications. The secondary application defined in hivemq-manifests.yaml will add additional resources to the HiveMQ Platform deployment.

Create your HiveMQ Licenses Secret

As you can see we have configured the HiveMQ Platform Helm chart to use an existing Secret for picking up the HiveMQ Broker License. We will add this secret as a SealedSecret to the additional manifests application for HiveMQ.

Step 1: Create a Secret template

Create a file called hivemq-licenses-secret.yaml. Make sure NOT to commit it to Git! If you have added *-secret.yaml to you .gitignore configuration the file will be ignored anyways.

apiVersion: v1

kind: Secret

metadata:

name: hivemq-licenses

namespace: hivemq

type: Opaque

data:

license.lic: >-

<BASE64_ENCODED_LICENSE_GOES_HERE>Step 2: Encode Your License as Base64

To add the license to the secret you have created in Step 1 it is necessary to encode it as Base64. Assuming your license is stored in a file called hivemq-license.lic you can do the encoding as follows:

cat hivemq-license.lic | base64 [| pbcopy # optional on macOS to copy to clipboard]This will print your Base64 encoded license to the terminal and you can copy it to the Kubernetes secret.

Step 3: Seal the secret and commit to Git

The final step is to seal the secret with kubeseal and to commit it to the Git repository.

kubeseal -f hivemq-licenses-secret.yaml -w hivemq-licenses-sealedsecret.yamlRun the Deployment

In the previous sections, we’ve carefully prepared our environment to support a secure, scalable, and GitOps-driven deployment. We started by setting up a structured Git repository to manage Argo CD applications and additional Kubernetes resources. We configured Terraform modules for Argo CD and sealed-secrets. We also explored how to handle sensitive data securely with SealedSecrets and kubeseal, allowing us to keep secret information safely stored in version control.

Now that all configuration files are in place and the necessary prerequisites are met, we’re ready to run the deployment. This final step is simple and straightforward: we’ll use Terraform to plan and apply the configuration, ensuring all resources are deployed as defined in our manifests.

To start the deployment:

1. Run terraform plan to review the resources that will be created and verify the configuration.

2. Run terraform apply to provision the infrastructure and services in your Kubernetes cluster.

With these two commands, the entire setup—including Argo CD and the MQTT broker cluster—will be deployed according to the GitOps principles we’ve established, resulting in a consistent environment.

MQTT Broker Management: Continues in Part 2

At this stage, we have a fully automated and functional HiveMQ MQTT Platform deployment. You can now connect to the broker using your MQTT clients of choice and start publishing and consuming data. This setup is well suited for testing but is still missing some pieces to achieve production-readyness. This particularly concerns the security of the system.

In Part 2 of this blog series, Automating MQTT Broker Management on Kubernetes with IaC and GitOp, you will learn how to:

Secure MQTT traffic with TLS encryption

Secure access to the HiveMQ Control Center using IngressRoute and TLS

Read on. In Part 3, we will cover how to enable the HiveMQ Enterprise Extension to add authentication and authorization to the system. Stay tuned!

Sven Kobow

Sven Kobow is part of the Professional Services team at HiveMQ with more than two decades of experience in IT and IIoT. In his role as IoT Solutions Architect, he is supporting customers and partners in successfully implementing their solutions and generating maximum value. Before joining HiveMQ, he worked in Automotive sector at a major OEM.