Elements of the Unified Namespace

In our previous post, we explored what a Unified Namespace (UNS) does—how it serves as a single source of truth, bridges IT and OT systems, and turns raw data into actionable insights. But while the concept of a UNS is powerful, its success depends on how well it is designed and implemented.

A UNS is more than just an MQTT broker or a data pipeline; it serves as the real-time, event-driven backbone of an organization's data architecture. It must support high-throughput messaging, fine-grained access control, edge and cloud interoperability, and historical data integration to provide a single source of truth across OT, IT, and business systems.

In this post, we’ll explore the key technical requirements for building a scalable and resilient UNS, from communication protocols and data models to message routing, security, and compute infrastructure. These elements form the foundation for a UNS that not only centralizes and contextualizes data but ensures it remains accessible, secure, and actionable across an enterprise.

Technical Requirements and Considerations to Implement a UNS

Scalability and Performance

A Unified Namespace is designed to handle real-time data flow across an entire enterprise, from industrial machines on the shop floor to business intelligence systems in the cloud.

Scalability in a UNS means the ability to support thousands of devices, millions of messages per second, and multiple enterprise applications without degradation in performance. Performance is equally critical, as real-time responsiveness is (often) the primary reason for adopting a UNS. Whether monitoring production lines, tracking asset conditions, or feeding AI-driven automation, sub-second data latency can be the difference between smooth operations and costly downtime.

Horizontal and Vertical Scalability

A well-architected UNS must be able to scale horizontally, adding more brokers, nodes, or clusters to distribute workloads dynamically, ensuring that performance remains stable as more devices and users join the system. Cloud-native technologies, containerized deployments, and microservices-based architectures provide the flexibility needed to dynamically allocate resources as demand increases.

Vertical scaling refers to increasing the capacity of existing infrastructure, increasing memory, processing power, or storage to enhance performance without redesigning the system. Unlike horizontal scaling, which distributes the load across multiple servers, vertical scaling enhances the performance of individual components within the UNS for higher message throughput, faster query execution, and improved response times, ensuring that real-time data processing remains efficient even as the volume of connected devices, applications, and messages grows.

A balanced UNS architecture leverages both vertical and horizontal scaling, using vertical scaling. This hybrid approach ensures that the UNS remains resilient, performant, and scalable without relying solely on one method of expansion.

Enterprise-Wide Connectivity: Interoperability and Multi-Protocol Support

As Unified Namespace is not just an isolated data pipeline, it must connect and integrate diverse systems across the entire enterprise. Interoperability is the key enabler for a successful UNS. From OT systems like PLCs from different vendors or multiple SCADA systems (Shop Floor and Edge Connectivity) to IT applications like ERP, MES or CMMS up to cloud analytics (Enterprise Software & Cloud Integration), a UNS must support seamless interoperability to ensure a single, unified source of truth.

Multi-protocol support is fundamental to a UNS system. Without the ability to translate and support multiple protocols, a UNS becomes a silo rather than a unifying layer.

The ability to accommodate different protocols also affects scalability. Enterprises grow and integrate new technologies, so a UNS must be flexible enough to support emerging standards without requiring extensive reconfiguration. Whether deploying edge computing solutions, integrating AI-driven analytics, or enabling cross-plant data exchange, multi-protocol support ensures that data remains fluid across various environments.

A Well-Architectured UNS

Data Modeling and Standardization: Schema Enforcement

A UNS is only as effective as the data it organizes and distributes. Without proper data modeling and standardization, the UNS risks becoming a chaotic, unstructured message hub rather than a reliable, scalable source of truth. Schema and data model enforcement is a critical component of this standardization, ensuring that data remains consistent, meaningful, and interoperable across the enterprise. Without a well-defined schema, integrating and consuming data becomes a challenge, leading to misinterpretations, inefficiencies, and unreliable analytics.

Schema enforcement acts as a contract that defines how data should be structured, ensuring that every system publishing or subscribing to the UNS follows the same set of rules. Schema registries can provide a centralized location where data models are maintained and updated, allowing for controlled evolution of data structures without breaking existing integrations.

Beyond structure, schema enforcement also facilitates data validation and quality control. Ensuring that data adheres to a predefined format prevents incomplete, malformed, or incorrect information from propagating through the UNS. This is especially critical in industrial settings where sensor inaccuracies, faulty device configurations, or inconsistent metadata can lead to incorrect operational insights.

Raw data by itself holds little value unless it is placed within the context of its operational environment. Schema enforcement in an UNS ensures that data is not just standardized, but also tagged e.g. with metadata, location, and process-specific attributes. This allows consumers (whether human operators, analytics engines, or AI models) to instantly understand what the data represents, where it comes from, and how it relates to the broader system.

Standardized schemas also play a key role in enabling semantic interoperability across different business units. By applying consistent naming conventions, units of measurement, and hierarchical structures, organizations ensure that data remains understandable and usable across diverse systems.

For example, a machine sensor may generate a temperature reading, but without context (machine ID, process phase, production batch), it is just an isolated data point. Contextualization ensures that this temperature reading is associated with a specific asset, process condition, and historical trend, enabling deeper operational insights. This allows engineers, data scientists, and business analysts to work with the same dataset without needing extensive data transformation.

Ultimately, schema enforcement is not just about maintaining order but also about ensuring that data remains a valuable, trustworthy asset across the enterprise.

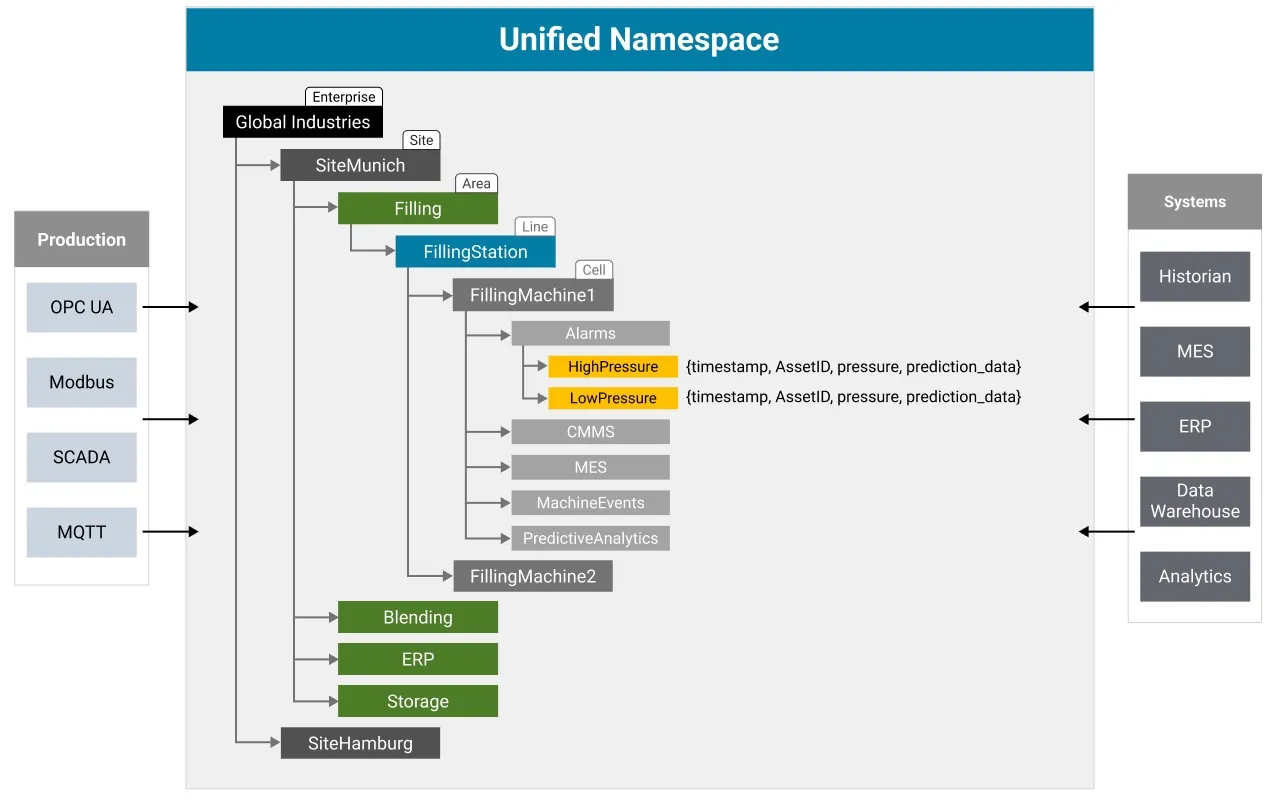

Easy Data Discovery Through an Organizationally Representative Topic Structure

Another key requirement of a Unified Namespace is its ability to make data easily discoverable, navigable, and understandable across an entire organization. This is achieved through a structured topic hierarchy that mirrors the actual organizational structure—whether that’s a factory, supply chain, or enterprise-wide system.

When data is organized using a logical, hierarchical topic structure, users and applications must be enabled to intuitively locate relevant information without requiring complex queries or external documentation. Instead of searching through unstructured data streams, stakeholders can quickly navigate through well-defined namespaces that align with physical locations, processes, and assets.

For example, a manufacturing UNS might use a topic structure such as:

This structured approach ensures consistency and scalability while making it easy for different teams, operations, maintenance, IT, and business intelligence, to consume the data they need without deep technical expertise.

This structured approach ensures consistency and scalability while making it easy for different teams, operations, maintenance, IT, and business intelligence, to consume the data they need without deep technical expertise.

Additionally, because the topic structure reflects real-world relationships, data consumers can infer context without prior knowledge of specific system integrations. If an engineer wants to find performance metrics for a specific production line, they can logically traverse the namespace rather than relying on IT teams to provide custom integrations.

As organizations scale, a well-designed topic structure prevents data sprawl and reduces complexity, ensuring that new assets, devices, or locations can be seamlessly integrated into the existing framework.

Message Filtering and Routing

A Unified Namespace is designed to handle a massive influx of real-time data from various sources, but not every system, application, or user needs to consume all that information. Without efficient message filtering and routing, the UNS risks becoming an overwhelming data firehose, leading to unnecessary bandwidth consumption, processing overhead, and potential security risks. A well-architected UNS ensures that the right data reaches the right consumers at the right time.

Message filtering allows subscribers to receive only the data they need, reducing unnecessary traffic and improving system efficiency. In a topic-based system this is achieved through structured topic hierarchies and wildcard subscriptions. Instead of subscribing to all data, a consumer can subscribe to a specific plant, production line, or machine state, ensuring that only relevant messages are processed. More advanced filtering mechanisms, such as content-based filtering, allow consumers to receive messages that match specific conditions within the payload, such as temperature thresholds, error states, or specific asset IDs.

Routing ensures that messages reach the appropriate destinations based on predefined rules, business logic, or dynamic conditions. In a large-scale UNS, messages may need to be routed to multiple destinations, such as real-time monitoring dashboards, AI-driven analytics platforms, cloud storage, or enterprise systems like ERP and MES.

Security is also an integral part of message filtering and routing. Access control policies ensure that only authorized users and applications can subscribe to specific topics or receive certain messages. Role-Based Access Control (RBAC) can be applied to further restrict data flow based on user permissions, device identity, or security policies.

Security and Access Control

Given its critical role in managing operational and business data, security and access control are not optional—they are foundational requirements. Without a robust security framework, a UNS becomes a potential attack vector, exposing sensitive production data, operational insights, and business intelligence to unauthorized access, manipulation, or cyber threats.

Security in a UNS must be multi-layered, addressing authentication, authorization, encryption, and auditability. Every device, application, and user interacting with the UNS must be authenticated before being allowed to publish or subscribe to data. Strong authentication mechanisms, such as x.509 certificates, OAuth, or JWT, ensure that only verified entities can access the namespace.

Once authenticated, entities must be authorized based on Role-Based Access Control. Not every system or user should have unrestricted access to all data in the UNS. Fine-grained access control allows for granular permissions at the topic level, defining which entities can publish, subscribe, or request data.

Encryption is another key pillar of UNS security. TLS (Transport Layer Security) should be enforced to secure data in transit, ensuring that messages are protected from eavesdropping or tampering.

Historical Data and Persistence

A UNS is primarily designed for real-time, event-driven data exchange, but a truly valuable UNS must also support historical data and persistence. Real-time insights are essential for monitoring and automation, but a UNS without historical data is like a factory running without records, useful for real-time operations but lacking the ability to improve, learn, and adapt.

By implementing strong persistence mechanisms, enterprises can unlock the full potential of their data, enabling continuous improvement, compliance, and advanced analytics that drive smarter data driven decision-making.

Persistence in a UNS ensures that data is not lost when consumers go offline or when applications need to process historical events. However, different types of data require different persistence strategies. Some data points, such as machine telemetry and sensor readings, may need to be retained in time-series databases. Others, such as business transactions and operational events, may be better suited for event stores, or cloud-based storage solutions.

For historical analytics, data archiving must be structured and accessible. A UNS should provide queryable storage options that allow systems to retrieve past data on demand, whether for compliance audits, production analysis, or AI-driven insights.

Retained messaging plays a key role in ensuring that the latest known state of a device or system is always available to new subscribers. Retained messages allow devices to immediately receive the most recent update instead of waiting for the next real-time event. This is particularly useful in scenarios where applications or dashboards need to reconnect and get an instant snapshot of the system state.

Edge and Cloud Computing

Seamlessly integrated edge computing and cloud computing to support real-time processing, scalability, and long-term data management is pivotal in a UNS system. The balance between edge and cloud is critical because different types of data processing require different levels of latency, computational power, and accessibility.

Edge computing brings processing power closer to where data is actually generated: on the factory floor, in remote industrial sites, or embedded in IoT devices. This is crucial for low-latency decision-making, where milliseconds matter, such as in real-time machine control, predictive maintenance, and anomaly detection. Processing data at the edge reduces network congestion and cloud dependency, allowing systems to operate autonomously even when connectivity is inconsistent or unreliable. By filtering, aggregating, and analyzing data locally, edge computing ensures that only meaningful insights are sent to the cloud, reducing storage and bandwidth costs.

Cloud computing, on the other hand, provides scalability, centralization, and advanced analytics capabilities. While the edge handles immediate, high-speed processing, the cloud is best suited for long-term data storage, historical analysis, AI/ML model training, and cross-site data integration. A UNS with cloud integration enables enterprises to correlate data across multiple locations, perform large-scale analytics, and derive informed strategic insights that are impossible at the local level. Cloud services provide elasticity, allowing businesses to scale computing power as data volume grows without having to invest in on-premise infrastructure.

A well-designed UNS must support a hybrid architecture, where edge and cloud computing work together. Edge-to-cloud pipelines ensure that real-time data from the factory floor is processed at the edge when needed, but also synchronized with cloud storage for deeper insights.

Security plays a crucial role in this balance. Edge devices must be secured against physical tampering and cyber threats, while cloud environments must enforce strict access controls and encryption to protect sensitive industrial data.

Event-Driven Architecture and Real-Time Processing

In traditional systems, data exchange typically relies on request-response models, requiring applications to constantly poll for updates. This approach results in tightly coupled, rigid system architectures that are difficult to scale and maintain, leading to increased delays, inefficiencies, and excessive network traffic.

A Unified Namespace is fundamentally built on an event-driven architecture (EDA) enabling real-time data flow, decoupled system interactions, and scalable automation across an enterprise. Unlike traditional architectures that depend on periodic polling or request-response mechanisms, an event-driven UNS enables data to be pushed the moment it is generated, facilitating immediate reactions and informed decision-making. This approach supports asynchronous, push-based data distribution, where events are published once and instantly made available to multiple subscribers in real time (one-to-many).

The event-driven communication pattern relies on a publish-subscribe model, where data producers send events to the namespace, and consumers subscribe to the specific—so called —topics they need. This decouples data producers from consumers, making the system highly scalable and flexible. For example, a machine sensor can publish temperature data without knowing which applications will use it, whether it’s an AI-driven predictive maintenance system, a control dashboard, or a cloud analytics platform. This allows for dynamic data distribution without requiring hardcoded integrations.

Laying the Groundwork for a Scalable UNS

A well-architected UNS is the foundation of a data-driven enterprise, ensuring that OT and IT systems work together for real-time, structured, and secure data exchange. From enforcing schema standards to enabling seamless multi-protocol interoperability, the technical elements outlined in this post provide a framework for enterprises looking to implement a scalable and resilient UNS.

But building a UNS isn’t just about architecture—it’s about choosing the right technology to support it. MQTT has emerged as the protocol best suited for powering a UNS, offering lightweight, scalable, and event-driven communication that meets all the requirements we’ve discussed.

Read our blog, Why MQTT is Critical for Building a Unified Namespace, highlighting why MQTT is critical for UNS success. Read our another blog, Beyond MQTT: The Fit and Limitations of Other Technologies in a UNS, which dives deep into why alternative technologies like Kafka, OPC UA, and traditional data lakes fall short in delivering the real-time intelligence enterprises need for building a Unified Namespace.

Navigate this series:

Jens Deters

Jens Deters is the Principal Consultant, Office of the CTO at HiveMQ. He has held various roles in IT and telecommunications over the past 22 years: software developer, IT trainer, project manager, product manager, consultant, and branch manager. As a long-time expert in MQTT and IIoT and developer of the popular GUI tool MQTT.fx, he and his team support HiveMQ customers every day in implementing the world's most exciting (I)IoT UseCases at leading brands and enterprises.