Overcoming MQTT Sparkplug Challenges for Smarter Manufacturing

In complex industrial environments, such as smart manufacturing and energy production and distribution, managing data presents significant challenges. Data is spread across different platforms, devices, and applications and often involves compatibility issues, rigid protocols, and excess data that’s tough to manage.

MQTT Sparkplug is a specification that offers a solution for managing this complexity. It gives manufacturers the ability to manage their devices, data models, and digital infrastructure with a known standard, reducing complexity and offering a plug-and-play approach to data pipelines.

While a data specification can greatly reduce data-management complexity, it relies on users enforcing it for all devices and can be somewhat inflexible for downstream data processing tasks that aren’t aware of Sparkplug.

HiveMQ's Sparkplug Module for Data Hub addresses these challenges, removing downstream data bottlenecks and allowing a user to enforce Sparkplug compliance, making it even easier to handle and process IIoT data in real-time and ensure your data pipeline is fit for purpose.

How is MQTT Sparkplug Used in Industrial Environments?

The Sparkplug protocol has become a standard in industrial environments for managing and transmitting IIoT data in real-time. Built on MQTT, Sparkplug is specifically designed to meet the unique needs of industrial environments, providing a reliable, low-latency method for sharing data across systems. Additionally, Sparkplug supports stateful data management, which allows devices and applications to detect when data sources go offline, which is critical for continuous factory floor operations.

However, the Sparkplug protocol comes with challenges. Its rigid topic structure can be restrictive when facilities need to customize data flows to suit specific operational requirements. Furthermore, Sparkplug’s binary Protobuf data format, while efficient, is not compatible with many downstream applications, which rely on simpler, human-readable formats. Without additional processing, Sparkplug data can create bottlenecks and require extensive downstream handling by systems like Manufacturing Execution Systems (MES), historians, or analytics platforms. This adds time and complexity to data workflows and may delay critical insights. Some other Sparkplug known limitations include lack of support for retained messages, only supporting three organization layers for data contextualization and only supporting QoS 0.

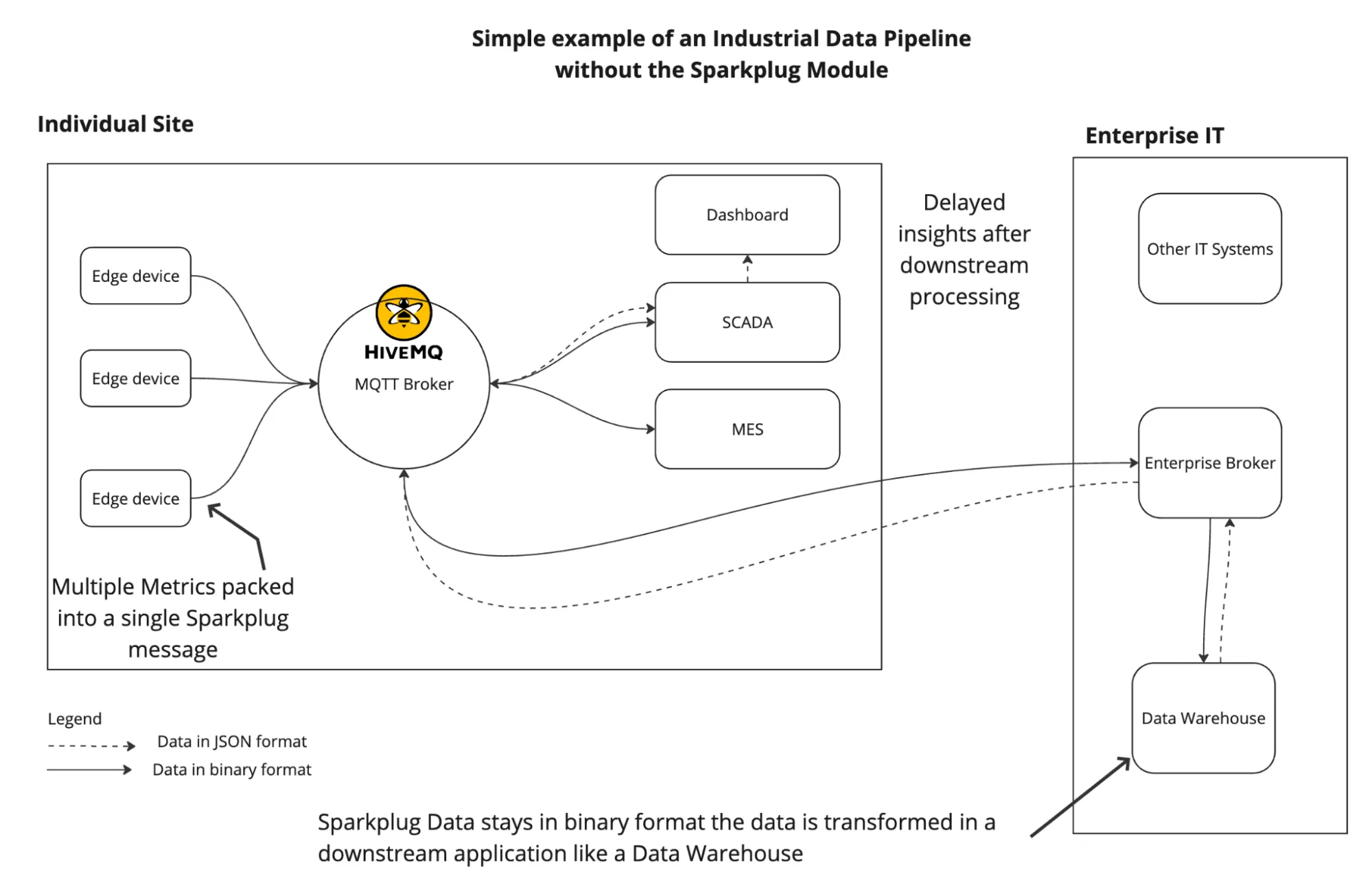

Below is a simple representation of such a case:

As you can see, the data is processed downstream in a data warehouse and needs a round trip before it can be used for any meaningful insights.

The Sparkplug Module for Data Hub addresses these issues by transforming Sparkplug data to a generic MQTT message in the JSON format earlier in the data pipeline, enabling smoother integration and faster processing. By converting data to a flexible MQTT topic structure, the module enables enterprises to reduce unnecessary data volume, maintain high data quality standards, and eliminate the need for complex, late-stage data transformations. This streamlined data flow also supports the creation of a Unified Namespace (UNS), a core concept in Industry 4.0, facilitating seamless data integration between operational and IT systems.

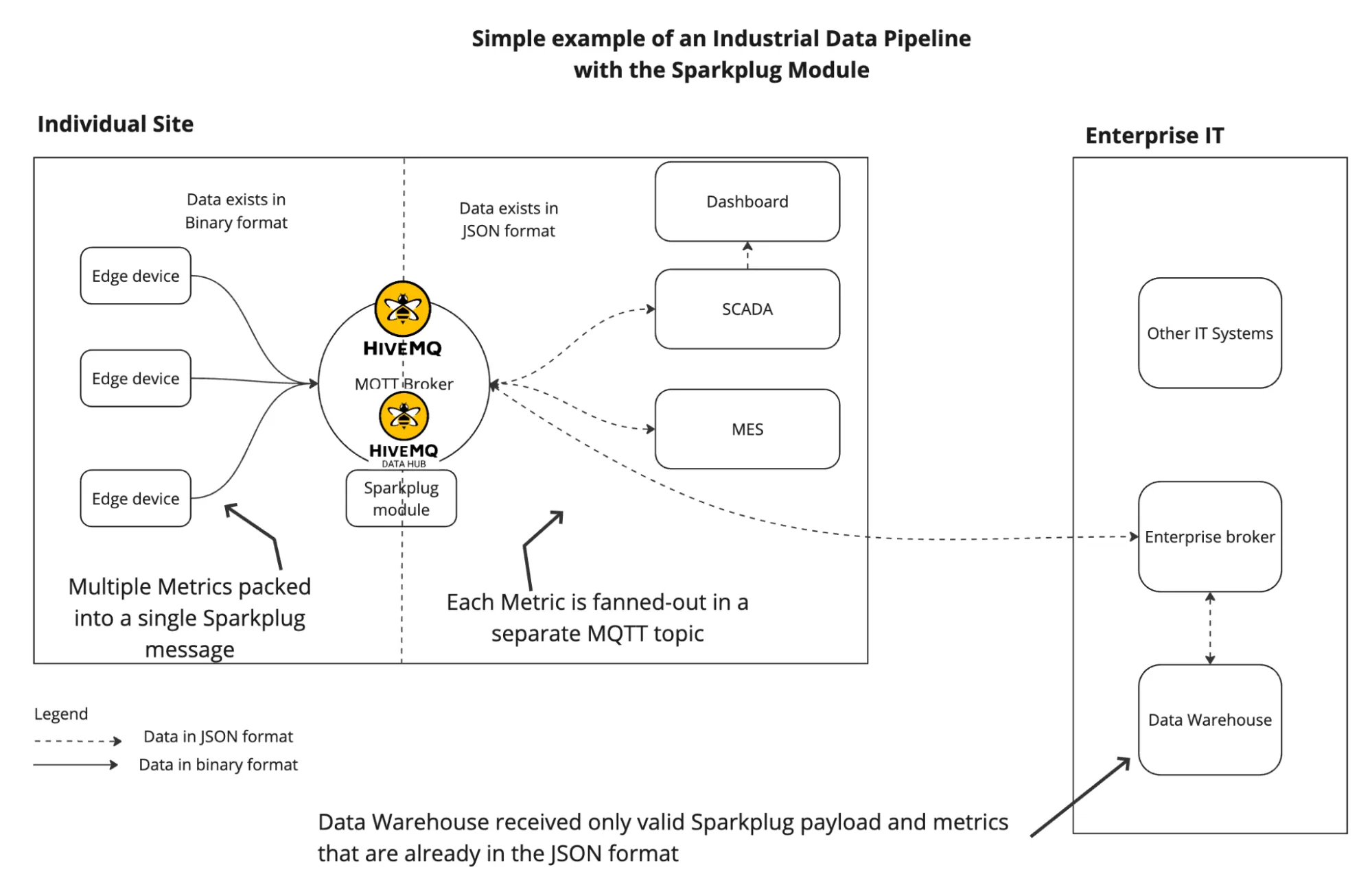

With the Sparkplug Module, the data flow in the above example is simplified:

The data is transformed in flight and becomes readily available for processing and meaningful interpretation by human operators. It also increases the data availability to many other OT and IT systems.

The data is transformed in flight and becomes readily available for processing and meaningful interpretation by human operators. It also increases the data availability to many other OT and IT systems.

Introducing HiveMQ’s Sparkplug Module for Data Hub

HiveMQ’s Sparkplug Module for Data Hub simplifies the data processing from devices that use the Sparkplug protocol. Integrated within the HiveMQ Platform, the module automatically converts Sparkplug data, originally encoded in Protobuf (a complex binary format), into JSON, a format that’s both human-readable and compatible with a wide range of applications.

With just one click, the Sparkplug Module lets you enforce data quality, generate metrics, and seamlessly transform data, making it easy to use without manual intervention or complex setups.

This module makes it easier to configure data schemas, data policies, and behavior policies and automates key data workflows—all designed to help you move from rigid, structured data to a more flexible format.

What are the Key Features of the Sparkplug Module?

The Sparkplug Module’s core features are designed to address specific data challenges faced in industrial environments:

Schema Validation: The Sparkplug Module checks that incoming data aligns with predefined schemas. If data doesn’t meet the standards, you can automatically drop the message, log an error, or take other actions to ensure that only high-quality data flows through your systems.

Protobuf to JSON Conversion: Sparkplug data is encoded in Protobuf, a binary format that’s challenging to work with directly. By converting this data to JSON, the module enhances system interoperability, making it easier to integrate data across platforms while providing a readable format for better understanding and usability.

Metric Fan-Out: Extract specific metrics from Sparkplug payloads and publish them to individual, configurable MQTT topics. This ensures data consumers receive only relevant information, reducing information overload and improving decision-making with actionable data. This also translates to reduced costs and it means less data transport and storage costs as well as needing less hardware to parse the binary data.

How to Use the Sparkplug Module in HiveMQ’s Data Hub?

The Sparkplug Module is designed for quick and easy setup, with a user-friendly interface that minimizes the learning curve. Here’s how to get started:

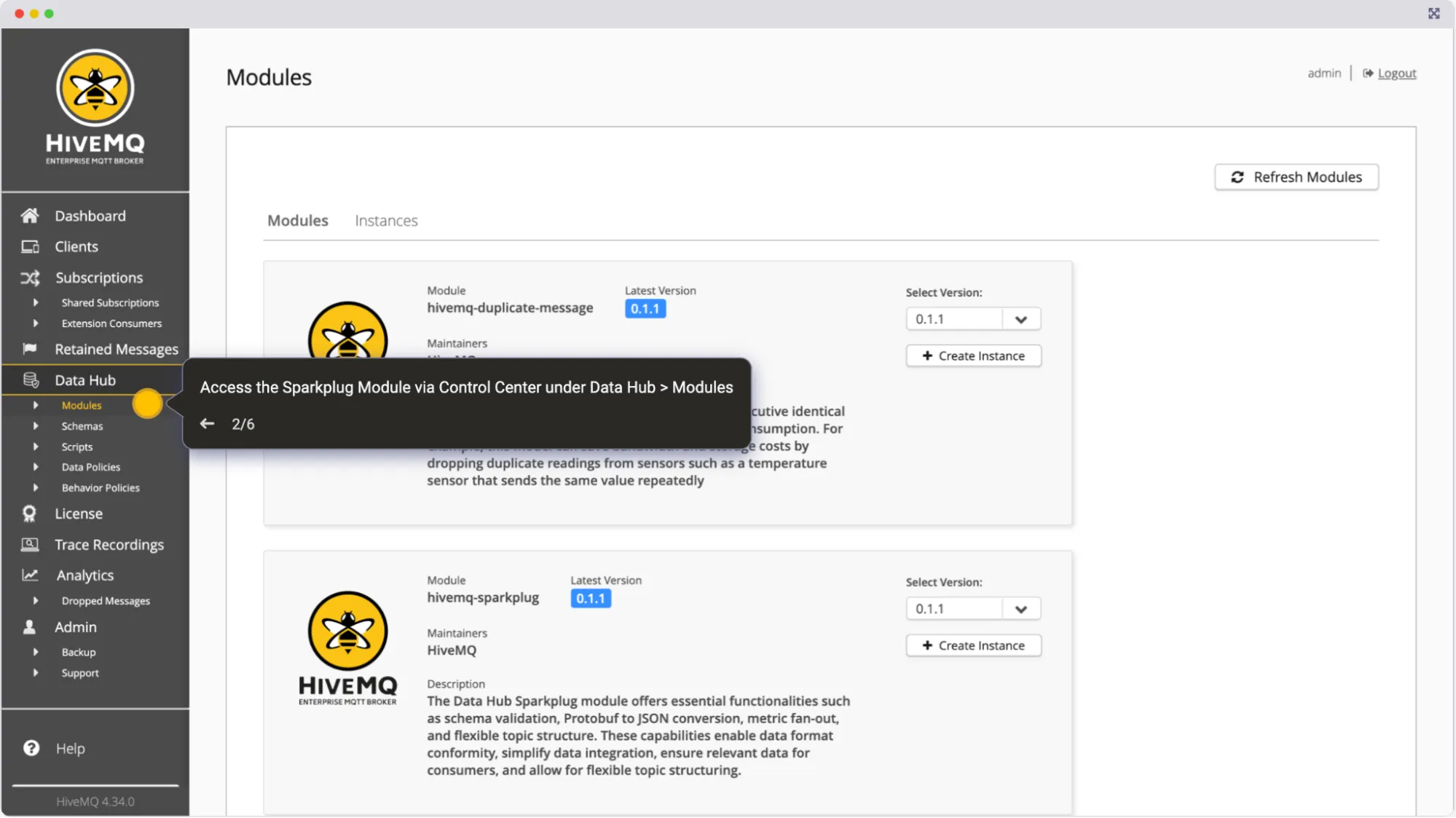

Access Modules via the Control Center: In the HiveMQ Control Center, you can find Modules under Data Hub > Modules.

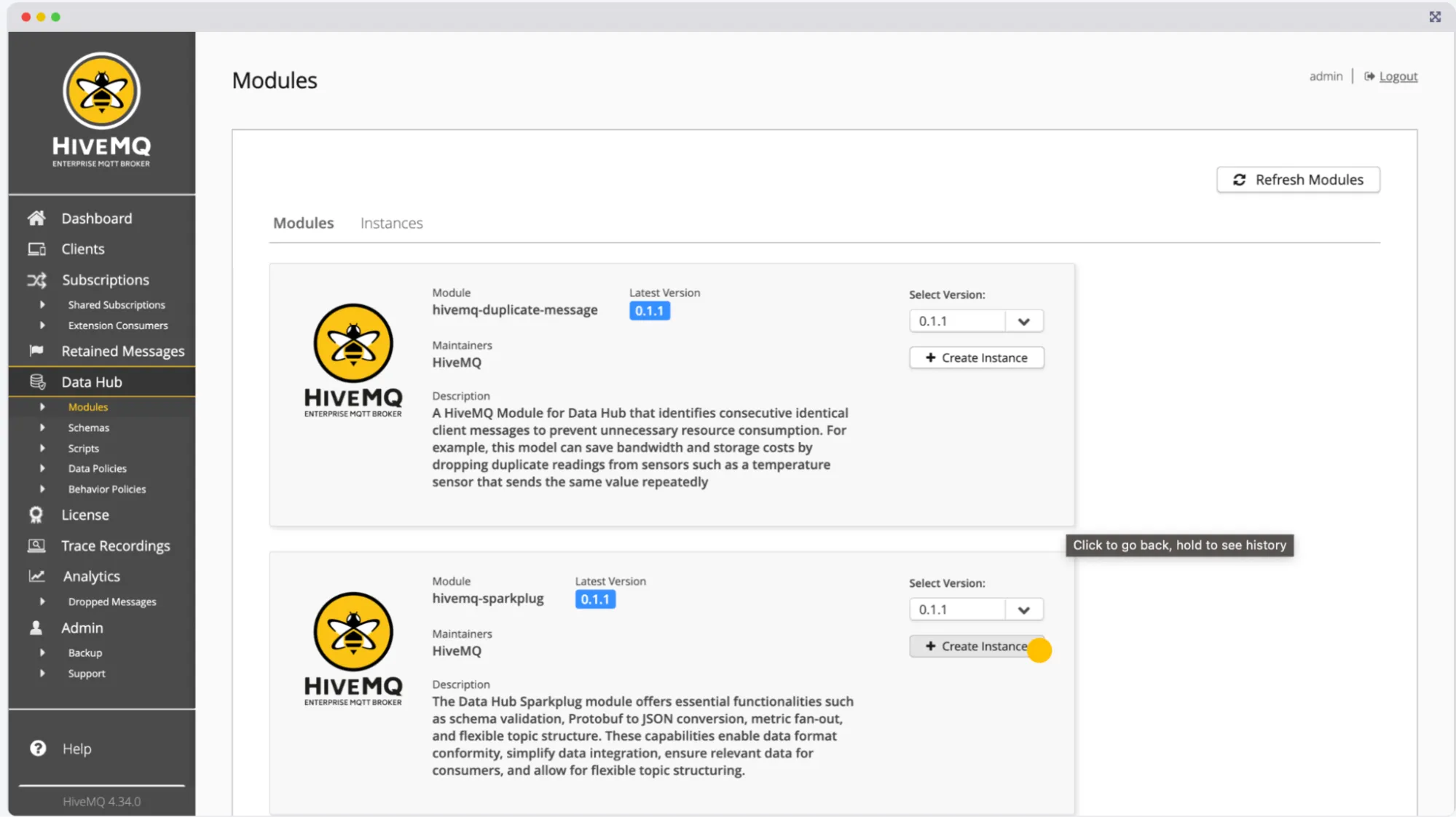

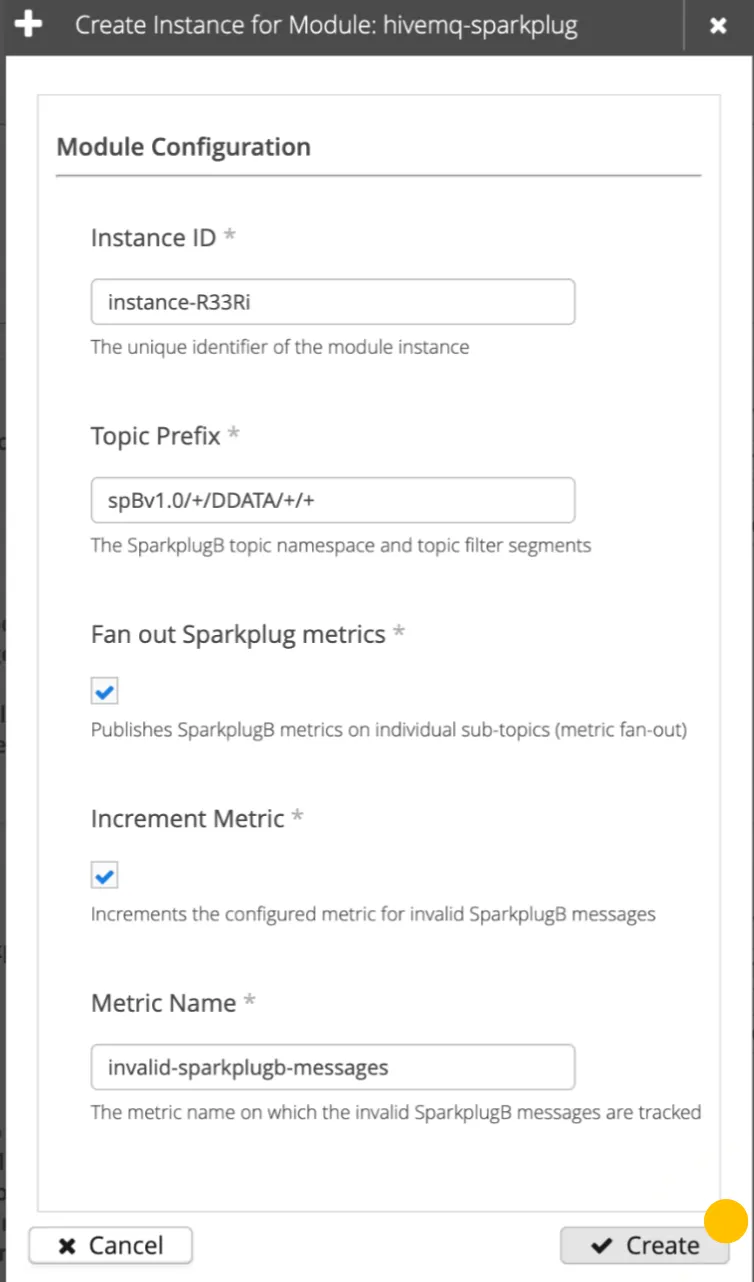

Create an instance by clicking on the + Create instance button under hivemq-sparkplug to configure the Module.

Configure the Module by selecting the actions that you need. You can:

Configure the Module by selecting the actions that you need. You can:

Customize the topic prefix

Fan-out Metrics

Track invalid Sparkplug B messages

We already pre-fill this configuration for you as well. Click Create to run your module instance with the desired configuration.

With just two clicks, you can activate Protobuf-to-JSON conversions, allowing Sparkplug data to be compatible with various applications, analytics tools, and dashboards.

Here is an interactive demo of the Module:

Unlocking the Power of Unified Data in Smart Manufacturing

By converting Sparkplug data to JSON, HiveMQ’s Sparkplug Module for Data Hub enables enterprise customers to get one step closer to a unified, real-time view of their industrial data. This approach enhances visibility across operations, accelerates data-driven decision-making, and supports Industry 4.0 transformation across manufacturing and energy sectors. With efficient data management and real-time processing, OT engineers and IT teams can leverage IIoT data for critical insights without additional delays.

HiveMQ’s Sparkplug Module for Data Hub simplifies complex data integration challenges, helping reduce data clutter, maintaining data quality, and streamlining data workflows. Whether you’re focused on improving data quality, avoiding data overload, or enhancing data workflows, the Sparkplug Module offers a user-friendly solution that provides tangible benefits for any enterprise environment.

Learn more about how the Sparkplug Module can transform your data management by checking out the User Guide and download HiveMQ to get started using Data Hub today.

Shashank Sharma

Shashank Sharma is a product marketing manager at HiveMQ. He is passionate about technology, supporting customers, and enabling developer-centric workflows. He focuses on the HiveMQ Cloud offerings and has previous experience in application software tooling, autonomous driving, and numerical computing.