What’s New in Sparkplug® v3.0.0?

The Eclipse Sparkplug Working Group has released the new Sparkplug Specification Version 3.0, upgrading from the v2.2 of the Sparkplug Specification of 2019.

So what does Sparkplug Specification Version 3.0 provide compared to Version 2.2?

On a high level, the new v3.0.0 Sparkplug® Specification includes cleanup and formalization of the previous release. According to the Eclipse Sparkplug Working group, the goals were to clarify ambiguities in version v2.2 and make clear normative statements while maintaining the general intent of the v2.2 specification.

For example, Chapter 2 “Principles” of the new specification has replaced the “Background” chapter in Spec 2.2. It now describes in detail the key principles of Sparkplug, and Chapter 5 “Operational Behavior” very extensively describes the operational aspects of Sparkplug environments. The organization also included MQTT v5.0 specific settings, especially in respect to the different session settings, e.g “Clean Session” in MQTT 3.1.1 vs “Clean Start” in v5.0.

Sparkplug infrastructures have a specific subset of requirements on MQTT Servers (in the specification document, an “MQTT Broker” is referred to as “MQTT Server”). Any fully MQTT v3.1.1 Server/Broker will meet the requirements of Sparkplug infrastructures.

However, not all of the features of the MQTT Specification are required.

Essentially required features are:

QoS 0 (at most once) for data

QoS 1 (at least once) for state management

Retained Messages support

“Last Will and Testament” (LWT) for state management

Wildcard available

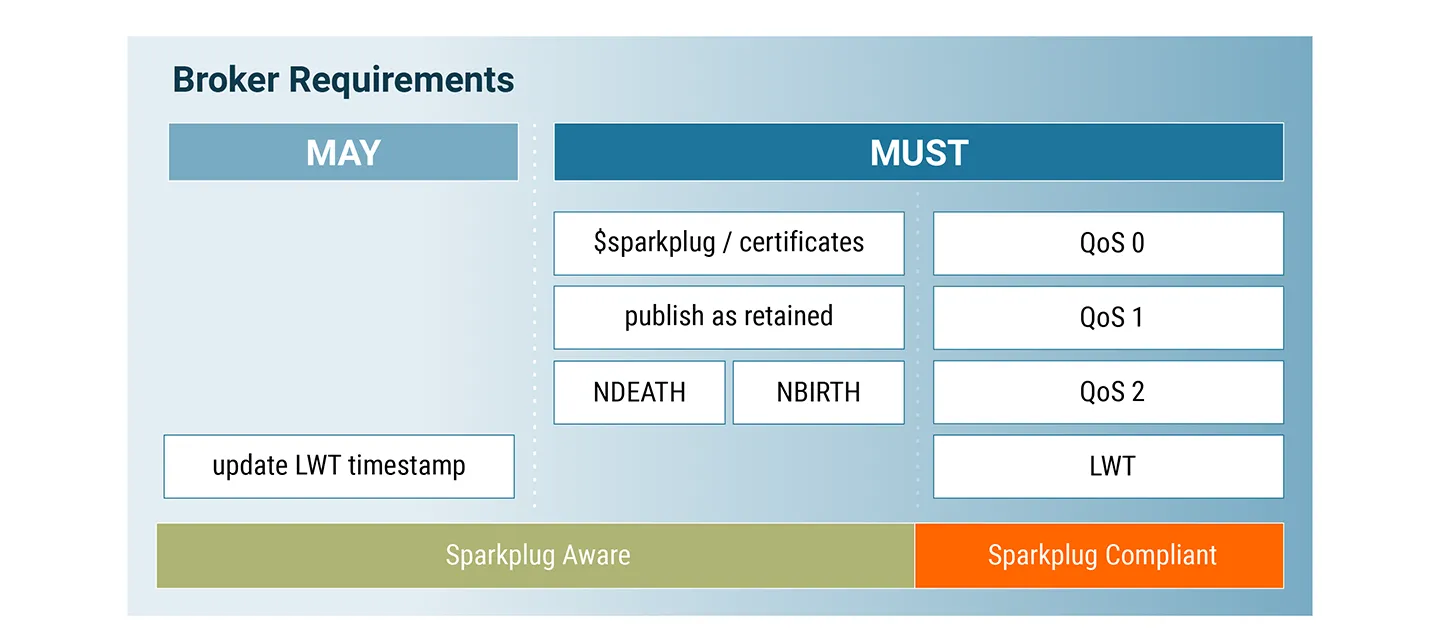

The specification distinguishes between a “Sparkplug Compliant MQTT Server” and a “Sparkplug Aware MQTT Server”. Let’s take a quick look at the difference between the two.

Sparkplug Compliant MQTT Server

A Sparkplug Compliant MQTT Server MUST support the following:

publish and subscribe on QoS 0

publish and subscribe on QoS 1

all aspects of Will Messages including the use of the retain flag and QoS 1

all aspects of the retain flag

Sparkplug Aware MQTT Server

Whereas a Sparkplug Aware MQTT Server includes all of the aspects of a Sparkplug Compliant MQTT Server and MUST have the following additional abilities:

store NBIRTH and DBIRTH messages as they pass through the MQTT Server

make NBIRTH messages available on a topic of the form:

$sparkplug/certificates/{namespace}/{group_id}/NBIRTH/{edge_node_id}

Given a group_id=GROUP1 and edge_node_id=EON1, NBIRTH messages MUST be made available on topic:$sparkplug/certificates/spBv1.0/GROUP1/NBIRTH/EON1make NBIRTH messages available on the topic:

$sparkplug/certificates/{namespace}/{group_id}/NBIRTH/{edge_node_id}

with the MQTT retain flag set to truemake DBIRTH messages available on a topic of the form:

$sparkplug/certificates/namespace/group_id/DBIRTH/edge_node_id/device_id

Given a group_id=GROUP1, edge_node_id=EON1 and device_id=DEVICE1, DBIRTH messages MUST be made available on topic:$sparkplug/certificates/spBv1.0/GROUP1/DBIRTH/EON1/DEVICE1make DBIRTH messages available on the topic:

$sparkplug/certificates/{namespace}/{group_id}/DBIRTH/{edge_node_id}/{device_id}

with the MQTT retain flag set to true

In addition, a Sparkplug Aware MQTT Server MAY also replace the timestamp of NDEATH messages. If it does, it MUST set the timestamp to the UTC time it attempts to deliver the NDEATH to subscribed clients.

So, a Sparkplug Aware MQTT Server extends the state management approach of Sparkplug. In a nutshell Birth and Death Certificates are now stored as retained messages made available at the newly introduced topic structure $sparkplug/certificates/#.

This optional feature of updating the timestamp of NDEATH messages is a highlight of this version. These timestamps are stored at the Broker due to the Last Will functionality. Last Will messages are included in the MQTT Connect attempt and will contain an invalid timestamp as the time of unconditional client disconnects is actually unknown. This (optional) timestamp update on LWT message publish solves this issue.

The HiveMQ Broker is providing these optional features with the free available HiveMQ Sparkplug® Aware Extension. This Extension turns the HiveMQ Broker into a fully Sparkplug® Aware Broker.

The source code as well as a release artifact of the extension can be found at the HiveMQ GitHub repository.

Let End-Users Know You are Sparkplug Ready with the Sparkplug® Compatibility Program

Let End-Users Know You are Sparkplug Ready with the Sparkplug® Compatibility Program

The Sparkplug Compatibility Program allows software and hardware vendors the opportunity to prove compatibility and certify their products for Eclipse Sparkplug and MQTT-based IoT infrastructure.

The Sparkplug Compatibility Program allows software and hardware vendors the opportunity to prove compatibility and certify their products for Eclipse Sparkplug and MQTT-based IoT infrastructure.

Certifying vendors makes it easy for integrators and end users to procure devices and software products that are compatible with the Sparkplug specification. The program ensures that its solutions integrate seamlessly with the most common devices and networks in the Industrial IoT.

To become certified, vendors’ products must pass multiple open-source tests that confirm conformance to Sparkplug Technology Compatibility Kit (TCK). If a product passes the compatibility tests, the Sparkplug Working Group will add it to their official list of compatible products (found on their website). Once licensed, vendors can market their compatibility to the outside world by using the Sparkplug Compatible logo.

https://sparkplug.eclipse.org/compatibility/get-listed/

Here is what you need to know about the TCK…

Sparkplug Technology Compatibility Kit (TCK)

A proper Sparkplug implementation requires full compatibility with the following components:

Edge of Network Nodes/ Devices,

the Primary Application and of course,

the MQTT Broker.

The Sparkplug Technology Compatibility Kit (TCK) is available to provide guidelines and verify that all components up for certification are compliant with Sparkplug Specification.

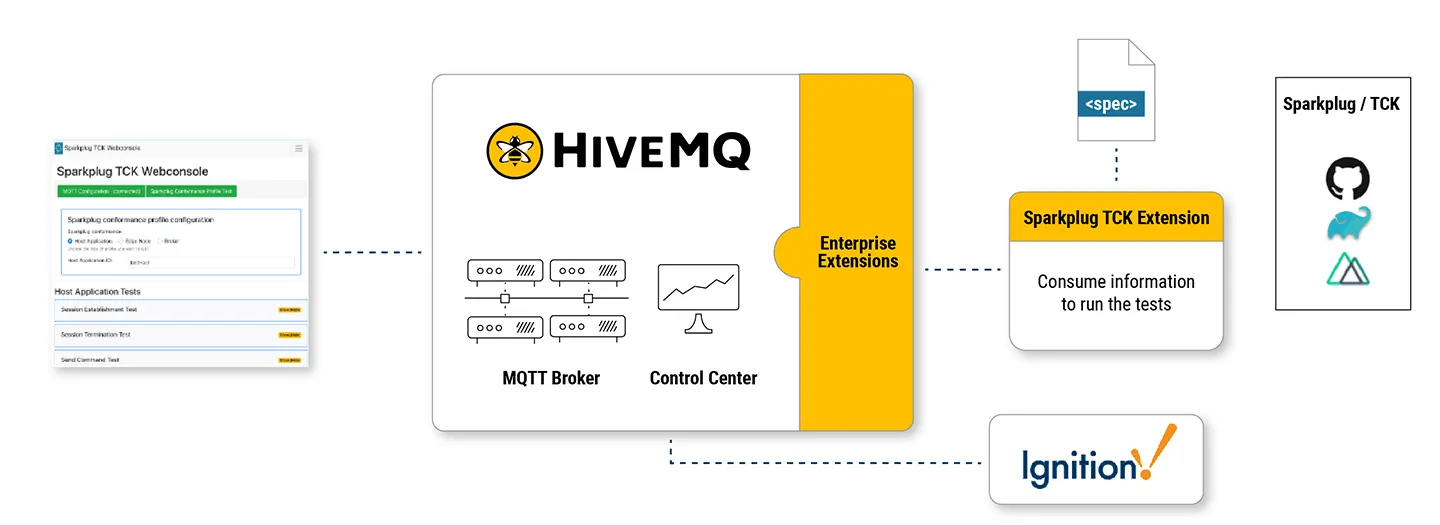

The TCK is a web application that consists of a HiveMQ Broker with a HiveMQ extension and a Web Interface. The Web Interface provides access to the compatibility Tests.

The TCK can be found in the Eclipse Sparkplug Repository.

The TCK can be found in the Eclipse Sparkplug Repository.

To build the Sparkplug TCK HiveMQ Extension with JDK 11 (JDK 17 will not work), check out “sparkplug/tck” from GitHub and ./gradlew. You can find the Extension artifact at build/hivemq-extension in your project folder.

You must add a WebSocket listener to the HiveMQ Broker and include the extension in the HiveMQs extensions folder. After (re)starting HiveMQ, the extension is ready to go.

If you want to run or debug the HiveMQ extension directly in your IDE, use ./gradlew runHivemqWithExtension. Within this Gradle target, the HiveMQ Community Edition is automatically downloaded and fully configured for the TCK extension by the tasks from the Gradle build file.

As mentioned, a Nuxt.js (Vue.js) based-web console controls the TCK. Be sure the latest versions of yarn and node.js are available on your machine. Mac users should look for brew install yarn and brew install node. If you get the error message: “env: node: No such file or directory,” it means node is not installed. In this case, you should be good to install and start the web console with yarn install and yarn dev (for more details, see the webconsole readme.

The TCK web console is accessible via http://localhost:3000 in your browser:

You can choose Sparkplug conformance profiles for Host Applications, Edge Nodes and MQTT Brokers.

The Host Application profile contains tests for testing:

Session Establishment

Session Termination

Send Command

Edge Session Termination

Message Ordering and

Multiple MQTT Server (Broker).

Edge Node Tests are

Session Establishment

Session Termination

Send Data, Send Complex Data

Receive Command

Primary Host and Multiple MQTT Server (Broker).

With the Broker Profile a MQTT Broker can be tested for general Sparkplug Compliance and also the Sparkplug Aware functionality.

Conclusion

Even though not much new has been specified, the new specification has straightened out a bunch of topics and worked them out much more stringently and comprehensively. A real gain, however, is above all the possibility to certify products or even to check the Sparkplug compatibility of the products used. This is a real added value not only for manufacturers, but also for end-users. As a next step you should follow our step-by-step guide and give the Sparkplug 3.0 Certification a try:

Check out the video below that provides the summary of this blog

(Sparkplug®, Sparkplug Compatible, and the Sparkplug Logo are trademarks of the Eclipse Foundation.)

Navigate this series:

Jens Deters

Jens Deters is the Principal Consultant, Office of the CTO at HiveMQ. He has held various roles in IT and telecommunications over the past 22 years: software developer, IT trainer, project manager, product manager, consultant, and branch manager. As a long-time expert in MQTT and IIoT and developer of the popular GUI tool MQTT.fx, he and his team support HiveMQ customers every day in implementing the world's most exciting (I)IoT UseCases at leading brands and enterprises.