What's New in HiveMQ 4.35?

The HiveMQ team is excited to announce the release of HiveMQ Enterprise MQTT Platform 4.35. This release introduces a new partition distribution strategy in the HiveMQ Enterprise Extension for Kafka, enhanced authentication options in HiveMQ Control Center v2, custom module creation for HiveMQ Data Hub, and numerous performance enhancements for the HiveMQ broker including improved client overload protection.

Highlights

- New HiveMQ Enterprise Extension for Kafka partition distribution strategy

- Enhanced authentication capabilities in the new Control Center v2

- Create and Import Custom Modules for Data Hub

Configurable Kafka partition distribution strategy in the HiveMQ Enterprise Extension for Kafka

The HiveMQ Enterprise Extension for Kafka integrates HiveMQ with the popular open-source Kafka streaming platform. HiveMQ 4.35 allows you to optimize your Kafka partition distribution strategy individually per MQTT to Kafka mapping. The Kafka partition strategy determines how messages are distributed across the partitions of a Kafka topic.

How it works

You can now set a key-based or uniform-based Kafka partition distribution strategy for each of your mqtt-to-kafka-mappings.

Example mqtt-to-kafka-mapping configuration with a uniform-based strategy:

...

<mqtt-to-kafka-mappings>

<mqtt-to-kafka-mapping>

<id>your-mapping-id</id>

<cluster-id>your-cluster-id</cluster-id>

<mqtt-topic-filters>

<mqtt-topic-filter>data/+/unordered</mqtt-topic-filter>

</mqtt-topic-filters>

<kafka-topic>your-kafka-topic</kafka-topic>

<kafka-partition-strategy>

<uniform-based/>

</kafka-partition-strategy>

</mqtt-to-kafka-mapping>

</mqtt-to-kafka-mappings>

...

For further configuration details, see our documentation.

How it helps

By default, the HiveMQ Enterprise Extension for Kafka uses the key-based Kafka partition strategy. The MQTT topic is used to set the key of the Kafka record. The key is hashed and determines the target Kafka partition of your Kafka topic. This approach ensures message ordering per Kafka partition and MQTT topic.

For use cases where per-partition message ordering is not important, HiveMQ 4.35 introduces a new Kafka partitioning strategy called uniform-based. This strategy is designed to balance the workload across the partitions of the target Kafka topic, regardless of the Kafka key. The extension can batch records and select partitions uniformly to optimize throughput. Partition-based message ordering is not possible with this strategy. The use of the uniform-based strategy is currently backed by the Sticky Partitioner.

Enhanced authentication capabilities in Control Center v2

HiveMQ 4.32 introduced an Open Beta of the new HiveMQ Control Center v2 interface. Now, in our HiveMQ 4.35 release, we are extending the authentication capabilities of the Control Center v2 to support enhanced authentication with OIDC/OAuth via the HiveMQ Enterprise Security Extension and the HiveMQ Enterprise SDK.

How it works

As we work toward making v2 ready for production environments, the existing Control Center v1 remains the default interface for managing your HiveMQ broker. Since the new interface is not yet recommended for production use, we have restricted access to Control Center v2 to users with the superadmin role. However, as well as the existing basic authentication support offered for Control Center v2, it is now also possible to access it using OIDC-based enhanced authentication.

How it helps

HiveMQ Control Center v2 has been redesigned from the ground up to provide a better user experience for managing the HiveMQ Platform. This additional authentication support is intended to allow more of our customers to explore this new interface. Future releases of Control Center v2 will focus on further interface improvements. Your feedback is crucial in shaping these improvements. Please use the feedback tool in Control Center v2 to share your thoughts or take a moment to email us with your suggestions at ccv2-feedback@hivemq.com.

Create and Import Custom Modules for Data Hub

HiveMQ Platform release 4.30 introduced HiveMQ Modules for Data Hub that allow you to easily run policies and transformation scripts in Data Hub with just a few clicks. Now, HiveMQ Platform release 4.35 allows you to create your own custom modules.

To get started, you can create a new module from your existing Data Hub policies on the Control Center v1 interface or clone our HiveMQ 'Hello World' Data Hub Module Example from the HiveMQ GitHub repository.

How it works

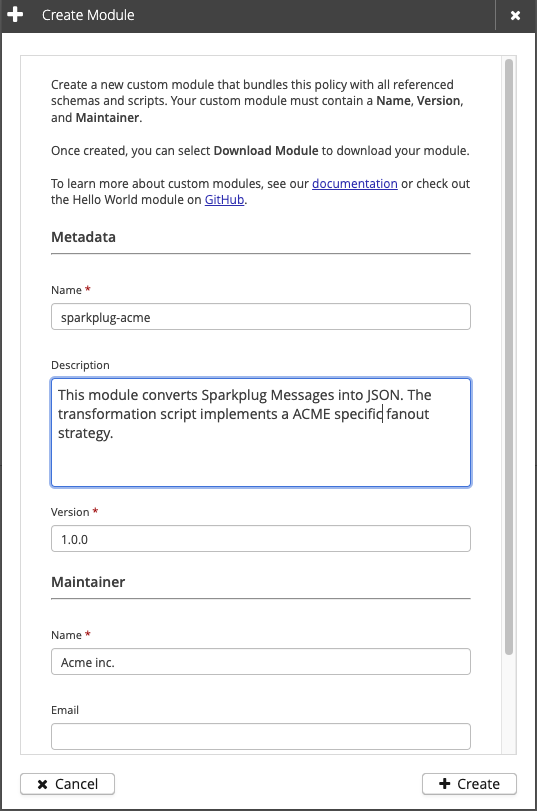

Create a Custom Module: You can develop a custom module from your existing data and behavior policies from the HiveMQ Control Center v1. To view all running data policies on your system, navigate to Data Policies In the Data Hub menu of your Control Center. To start module creation, simply click the plus sign. The dialog that opens prompts you to enter basic details about the new module. When you click Create, your module is created and ready for download within a few moments. Downloaded modules can be shared to another deployment, stored in a GitHub repository, or uploaded again. You can even directly modify the module’s content to make further customizations.

More advanced users can clone the HiveMQ 'Hello World' Data Hub Module Example from the HiveMQ GitHub repository that contains a simple structure for module development and an example of how to build a CI pipeline to release modules reliably and conveniently.

Example Control Center dialog to create a new module from an existing data policy:

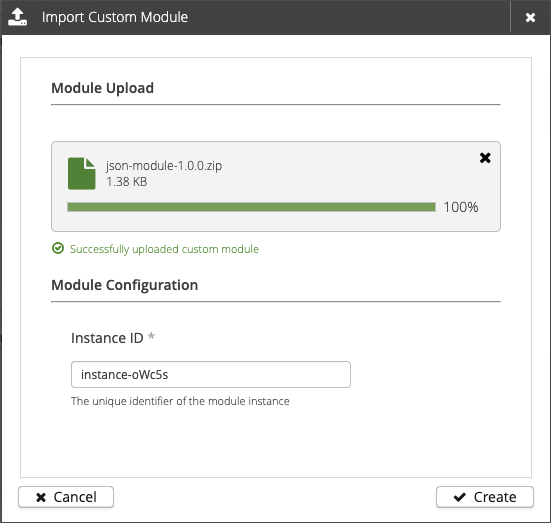

Import a Custom Module: Control Center v1 offers a convenient method for importing the custom modules you have built into your deployment. The Data Hub | Modules view now features an Import Custom Module action that opens a dialog to select a module from your local disk. After you define your input parameters, just select Create to instantiate the module. The remaining module functionality remains the same, modules can be disabled/enabled, updated, or deleted.

Example Control Center dialog to import a custom module from your local disk and configure input parameters:

How it helps

The Modules for Data Hub feature helps you easily transform data from your IIoT devices without requiring custom code or complex configuration. A module automatically packages together all of the Data Hub configuration such as schemas, transformation scripts, data policy, and behavior policy. Once created, Data Hub takes care of executing the tasks defined by the Modules’ configuration. With today’s HiveMQ Platform Release 4.35, you can build your custom module and package your Data Hub configuration. Modules can be easily shared and developed collaboratively within your organization. Our HiveMQ 'Hello World' Data Hub Module Example, makes it easy to get started.

Since Modules for Data Hub packages all the configurations within one file, deployments are simple without manually managing dependencies between schemas, scripts, and policies. Even module version updates can be seamlessly rolled out by uploading a newer version. Data Hub fully automates the update. Follow our documentation about Custom Modules for further information.

More Noteworthy Features and Improvements

HiveMQ Enterprise MQTT Broker

- Reduced memory usage per client during connection establishment.

- Fixed an issue that could cause the broker to crash during shutdown in rare cases.

- Improved feedback for client connection password states on the Client Details view of the Control Center.

- Improved the robustness and sensitivity of the CONNECT overload protection and client overload protection (throttling).

- Removed obsolete client overload protection metrics.

- Removed the trace-level log statement that shows the triggered number of credits for enabled client throttling to avoid information redundancy.

HiveMQ Data Hub

- Fixed an issue that could prevent the creation of JSON Schema files that start with Byte Order Mark (BOM) bytes such as UTF-16: 0xFEFF.

HiveMQ Control Center v2

- Added a client certificate modal to the Client Details view of the new Control Center v2 to provide mTLS connection information.

- Fixed an issue on the Cluster Nodes overview to correctly format the display of JVM memory usage.

- Added a Client ID input field on the Clients overview to facilitate navigation to the details page of a specific client.

- Added a new

Was Presentclient connection password state to the Sessions overview.

IMPORTANT: Java 21 will soon be required to run the HiveMQ Platform.

Since the HiveMQ 4.28 release in April 2024, Java 21 is recommended to run the HiveMQ Platform. For all HiveMQ versions released after April 2025, Java 21 will be required.

If you use the official HiveMQ container images, no action is required because these images have shipped with Java 21 since HiveMQ 4.28. If you do not run HiveMQ as a container, or you build your own container image, we recommend updating to Java 21 before the April 2025 deadline.

Get Started Today

To upgrade to HiveMQ 4.35 from a previous HiveMQ version, follow our HiveMQ Upgrade Guide. To learn more about all the features the HiveMQ Platform offers, explore the HiveMQ User Guide.

HiveMQ Team

The HiveMQ team loves writing about MQTT, Sparkplug, Industrial IoT, protocols, how to deploy our platform, and more. We focus on industries ranging from energy, to transportation and logistics, to automotive manufacturing. Our experts are here to help, contact us with any questions.