Connector Framework vs. Plug-in Architecture in MQTT-Based IoT Architectures

As enterprise and integration architects, your architectural choices directly influence your system's scalability, performance, and reliability. Two dominant paradigms when integrating MQTT brokers with other technologies are connector frameworks and plug-in architectures. In this blog post, we'll focus on the pros and cons of the two approaches and conclude by sharing why the plug-ins framework is preferred for building HiveMQ Enterprise Extensions.

Understanding Architectural Paradigms: Plug-ins vs. Connectors

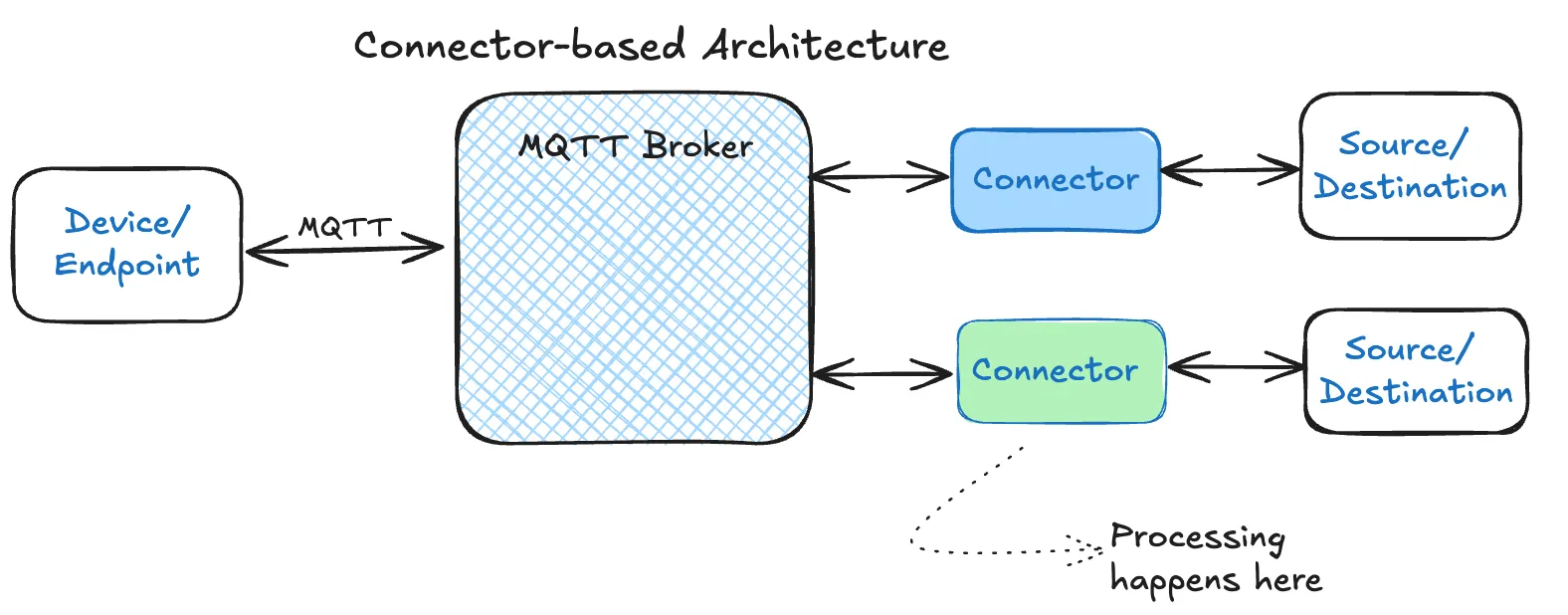

Connector frameworks are used by some MQTT brokers to enable integration with external systems like databases or cloud services. These connectors typically exist as intermediaries, handling the transfer of data from the broker to external systems. While this approach looks straightforward on the surface, it often comes at the cost of added complexity, increased latency, and potential reliability issues.

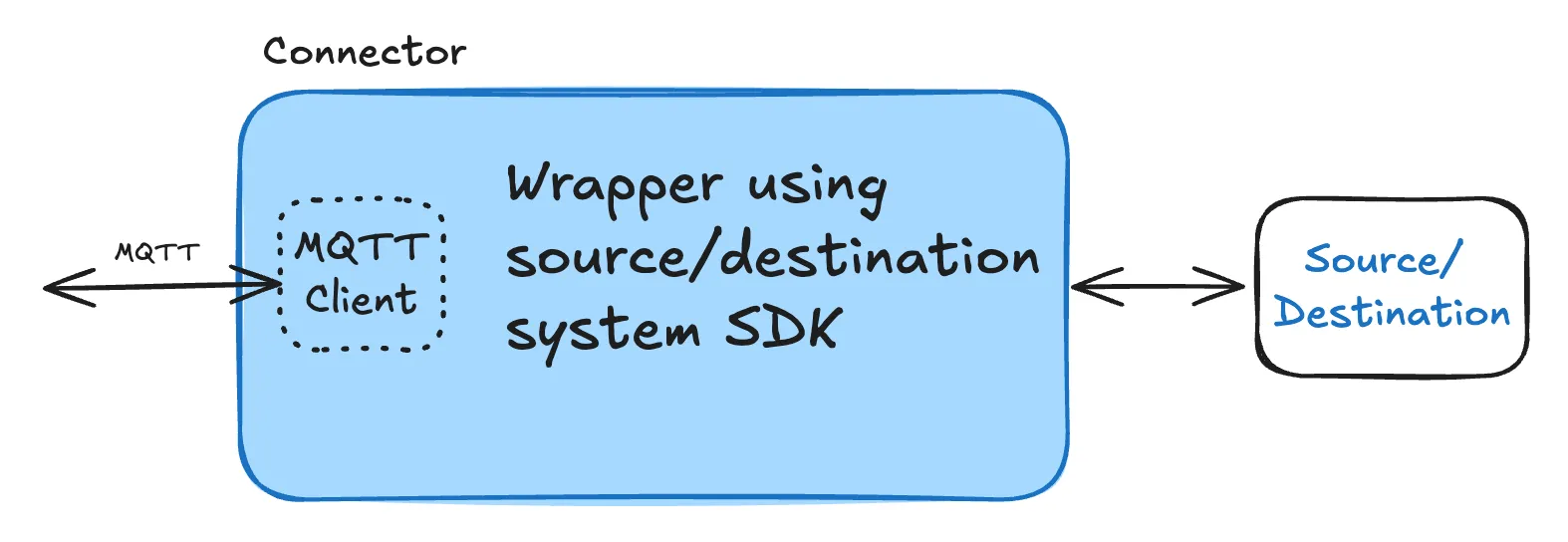

Let’s look at the elements of complexity, performance, and reliability more closely. Quite often, the ‘connector’ is basically an MQTT client with a wrapper that helps forward data to the destination (or vice versa). This means the data flow is single threaded and running as a process outside of the core MQTT process. Here is a closer look at the connector:

Let’s look at the elements of complexity, performance, and reliability more closely. Quite often, the ‘connector’ is basically an MQTT client with a wrapper that helps forward data to the destination (or vice versa). This means the data flow is single threaded and running as a process outside of the core MQTT process. Here is a closer look at the connector:

This design pattern limits the performance of the whole data pipeline to the performance of the MQTT client that’s essentially embedded in another application. Yes, one can add multiple MQTT clients and balance the load across multiple connections, but it still means actively managing these connections and scaling underlying infrastructure in close synchronization will be complex and challenging.

This design pattern limits the performance of the whole data pipeline to the performance of the MQTT client that’s essentially embedded in another application. Yes, one can add multiple MQTT clients and balance the load across multiple connections, but it still means actively managing these connections and scaling underlying infrastructure in close synchronization will be complex and challenging.

Also picture the situation where you are required to add more client connections in the connector as your throughput needs change. A quick Google search will show you several vendors who put limits on what they call “delivery points,” or “gateway processors” etc. when describing how to integrate with other services. This causes serious scalability issues for businesses as you might add thousands (or maybe millions) of endpoints in the field, but the applications downstream can’t operate optimally to support them.

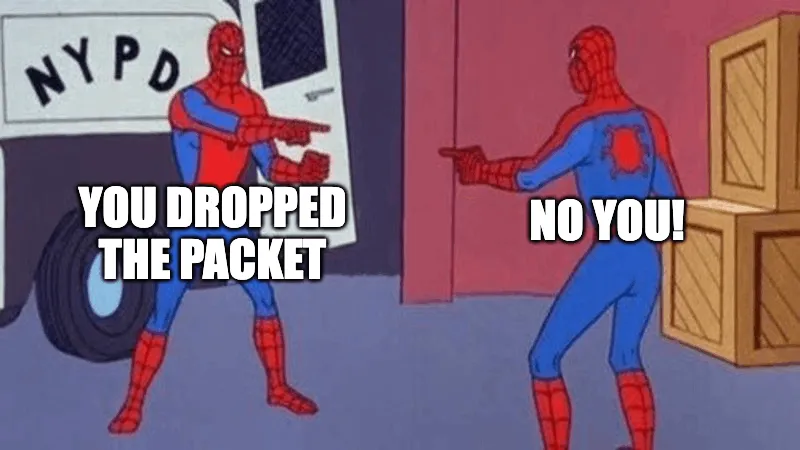

While additional client connections come online, the broker continues to send messages to the subscriber client that’s embedded in the connector. Since messages continue to arrive, and the available clients can’t take the load, the messages will begin to drop on the broker. Or, the client will accept the messages but can’t process them fast enough.

Merely setting them to QoS 1 (at-least-once guaranteed delivery), and assuming the same QoS can be maintained through this scaling process is incorrect. This also depends on how the connector is implemented in terms of handling the message guarantee doing downstream into, for example, Kafka.

Why? The broker will queue messages for the client that is too slow (QoS1). Once the queue limit is reached messages are dropped. Different brokers may have different approaches to queuing here.

We need to recognize that the connector itself can have a queue to process messages, and that creates additional complexity and monitoring requirements.

The target system (or the MQTT clients in the connector) will typically not even notice the dropped messages (unless you use additional logic). This raises significant concerns about reliability.

This peculiar behavior is not rare, and becomes very difficult to troubleshoot in a real life scenario. As elastic as a connector might appear on the surface, one has to understand the underlying process and how it’s managing changing demands of the data pipeline.

This peculiar behavior is not rare, and becomes very difficult to troubleshoot in a real life scenario. As elastic as a connector might appear on the surface, one has to understand the underlying process and how it’s managing changing demands of the data pipeline.

The impact? This will require personnel involvement and continuous monitoring to keep the ‘connector’ in good health. This is especially applicable when integrating with high-leverage Cloud services for say real-time analytics. The health and performance of this connector can have a direct impact on the performance of a business process.

One more pain point before we talk about the good stuff: Ordering.

As discussed above, a connector approach needs to bring in traffic from the MQTT broker and spread them over multiple connections to increase the overall throughput. One way of achieving this is via Shared subscriptions. The downside is that the ordering of the message stream coming from the MQTT broker is now lost. Ordering isn’t critical in all use cases, but something to keep in mind from an architecture perspective because this will create the need for the application to be idempotent. This can hurt overall latency, and up the need for processing power and logic needed downstream.

An optional feature of the MQTT v5.0 specification (and supported by HiveMQ) is the ability for declaring a shared subscription on the broker. If one has to take the connector path (we cover later why), a better way to support multiple connections into the connector is by declaring the shared subscription name and attributes and that will command the broker to hold on to the messages while new connections come online.

Now, the case for Plug-in architectures.

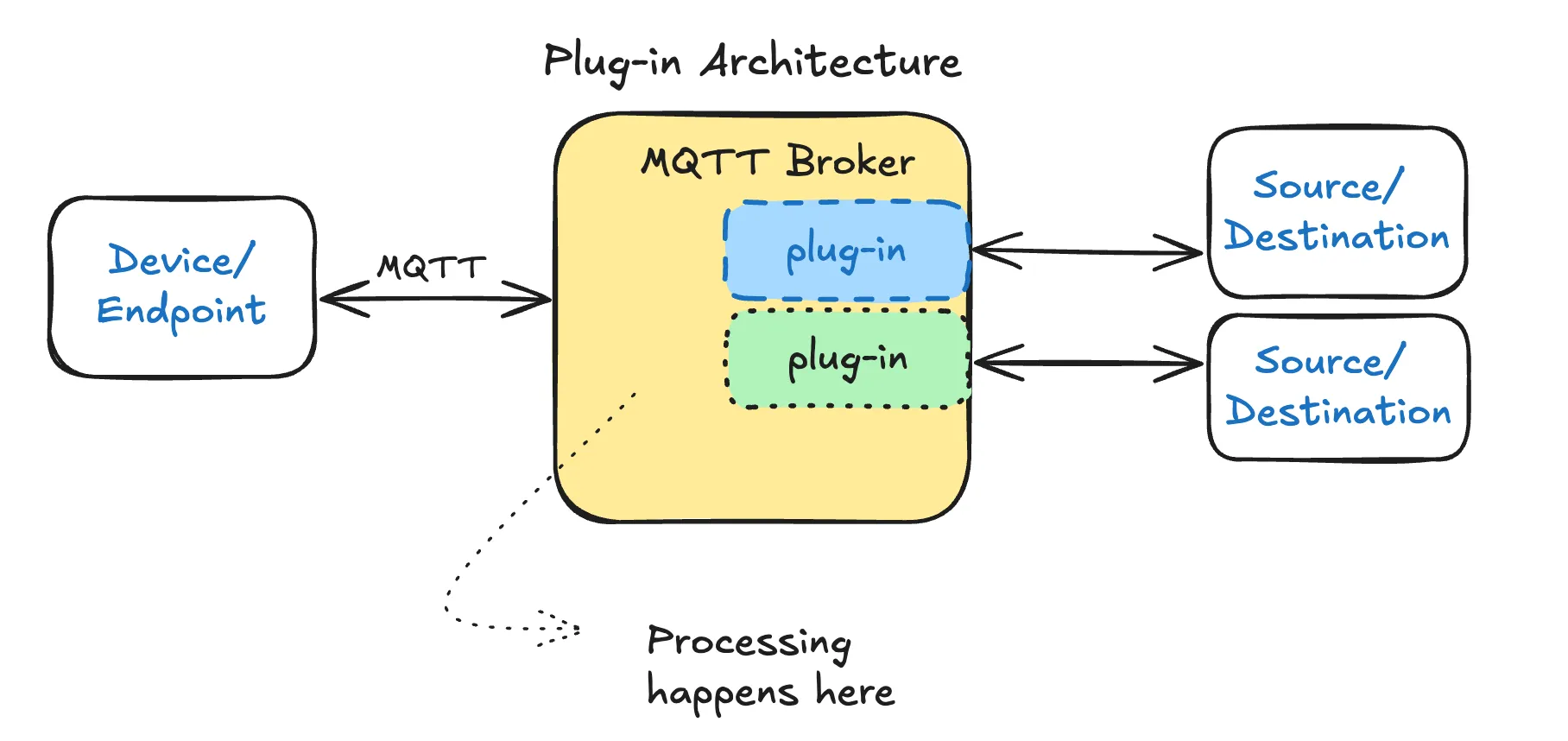

Plug-in architectures allow for deeper integration directly within the broker, where data is processed and managed inside the broker’s environment. This enables lower latency, tighter integration, and better control over data flows.

As shown in the visual above, a plug-in is part of the MQTT process. It initiates (if activated) right with the broker and there is no dissonance between when this integration option comes online and when the MQTT broker starts transacting with it.

As shown in the visual above, a plug-in is part of the MQTT process. It initiates (if activated) right with the broker and there is no dissonance between when this integration option comes online and when the MQTT broker starts transacting with it.

If an MQTT cluster is built with say 6-nodes, the plug-in will be configured on all the six and will share traffic to/from the target system. If you scale the nodes, you automatically scale the plug-in with it. Contrast this with the connector frameworks where the two sides (broker and connector) were to be constantly and carefully balanced when scaling.

This also enhances the reliability of the data pipeline since the broker can queue messages in-process if the target system isn’t available (requires relevant session and QoS settings). This guarantees delivery of data (at least once / QoS 1) and also preserves the ordering of messages (with some caveats). No more lost packets or additional processing on the target systems to sequence the messages.

When does a connector-based approach make sense?

A connector-based approach helps do quick prototyping and validations and some enterprises might try that before looking for a scalable and reliable solution to build their data pipeline.

It also enables some boutique/specialized software companies to build a solution that includes an MQTT broker. They might then offer this as a custom or managed solution to customers. It’s mostly relevant for low-stakes and non-business-critical applications.

HiveMQ Enterprise Extensions: Plug-in Framework in Action

Though HiveMQ utilizes a plug-in architecture, the product feature for extending its capabilities is known as HiveMQ Enterprise Extensions. These extensions allow for seamless integration with other services while maintaining the broker's high performance and reliability standards.

Advantages of HiveMQ Enterprise Extensions:

| Advantages | Description |

|---|---|

| Tight Integration | Extensions integrate directly into the broker’s core, allowing optimized data handling and processing. |

| Lower Latency | By processing data within the broker, latency is minimized, making it ideal for real-time applications. |

| Customizability | You have built-in control on how the extension behaves, and also the option to use the Extensions SDK to tailor the extension to your specific needs, ensuring flexibility and precision. |

| Scalability | Scales in conjunction with the broker and enables higher throughput to target systems without re-configuring the integration. |

| MQTT-spec Guarantees | Use the mechanisms of the broker to adhere to MQTT guarantees like topic-level ordering or QoS levels. |

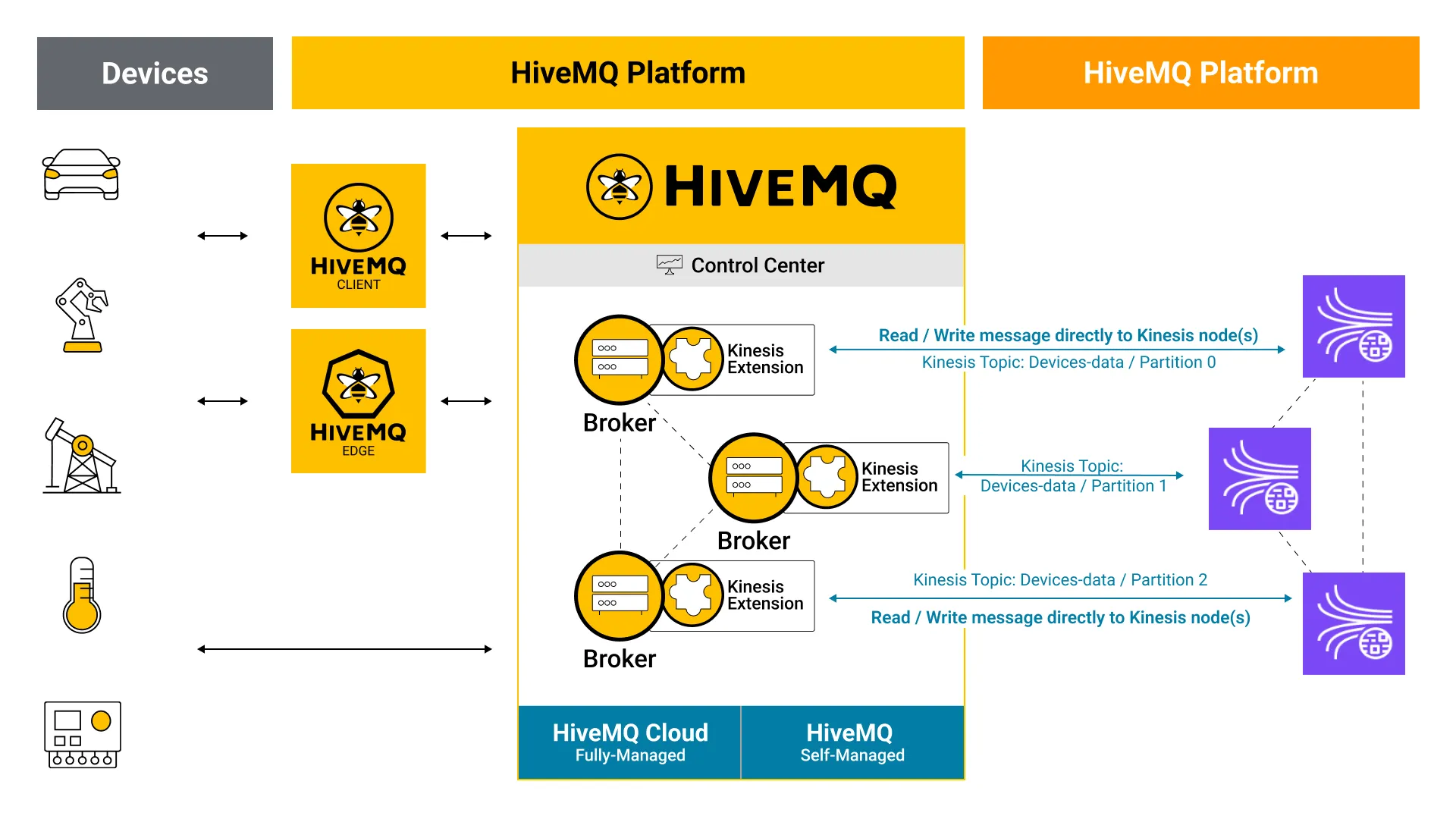

Example: Integrating with AWS Kinesis Using HiveMQ Enterprise Extensions

HiveMQ Enterprise Extensions provide a straightforward way to integrate with external systems like AWS Kinesis. Below is a sample architecture that shows the bi-directional mapping.

This setup showcases how HiveMQ Enterprise Extensions manage integration directly within the broker, providing high-performance scalable data streaming with minimal external dependencies.

For details on settings specific to this extension and related technical specifications, visit this section in HiveMQ documentation: https://docs.hivemq.com/hivemq-amazon-kinesis-extension/latest/index.html

For details on settings specific to this extension and related technical specifications, visit this section in HiveMQ documentation: https://docs.hivemq.com/hivemq-amazon-kinesis-extension/latest/index.html

HiveMQ offers a variety of extensions that cover the full gamut of capabilities required by a modern enterprise:

| Purpose | HiveMQ Extension |

|---|---|

| Data Streaming | Amazon Kinesis, Google PubSub, Apache Kafka |

| Databases and Data Analytics | DataLake, Snowflake, MongoDB, MySQL, PostgreSQL, CockroachDB, MariaDB, Timescale DB |

| Platform Operation | Distributed Tracing (Open Telemetry), Enterprise Security, Bridge Extension |

There are also a variety of community created and contributed extensions like InfluxDB, Splunk, AWS CloudWatch etc. The entire list is available on our Extensions page.

Tailoring HiveMQ to your needs: A standout feature of HiveMQ is the ability to develop custom extensions with the HiveMQ Community Extension SDK. This flexibility allows enterprises to create extensions that integrate with bespoke systems or apply custom data processing logic that is unique to their business needs.

In Conclusion

A connector-based approach helps do quick prototyping and validations and some enterprises might try that before looking for a scalable and reliable solution to build their data pipeline.

It also enables some boutique/specialized software companies to build a solution that includes an MQTT broker. They might then offer this as a custom or managed solution to customers. It’s mostly relevant for low-stakes and non-business-critical applications.

The Risk of "Kicking the Can Down the Road"

While connector frameworks offer flexibility on the surface, they often offload critical integration tasks (delivery guarantee, sequencing, scaling etc.) to external systems, which can introduce latency, complexity, and reliability issues. In contrast, A plug-in architecture for integrations helps leverage the scale and reliability guarantees from MQTT from right within the broker which helps optimize and easily manage the entire data flow from ingestion to processing to external integration.

HiveMQ’s plug-in architecture, embodied in its Enterprise Extensions, provides a robust, high-performance solution that integrates deeply with the broker, ensuring that your enterprise needs for scalability, security, and reliability are fully met.

When designing your next system, consider how these approaches align with your goals. HiveMQ’s plug-in framework, coupled with the flexibility of custom extension development, might just be the key to unlocking a seamless, high-performance integration strategy.

Gaurav Suman

Gaurav Suman, Director of Product Marketing at HiveMQ, is an electronics and communications engineer with a background in Solutions Architecture and Product Management. He has helped customers adopt enterprise middleware, storage, blockchain, and business collaboration solutions. Passionate about technology’s customer impact he has been at HiveMQ since 2021 and is based in Ottawa, Canada.