Improve IT-OT Collaboration with HiveMQ’s Custom Modules for Data Hub: Part 1

In modern factory environments, operational efficiency is hindered by excessive data volumes, protocol mismatches, and complex data quality enforcement. These challenges increase storage and processing costs, slow decision-making, and create integration hurdles between IT and OT systems. Without standardized data transformation and schema mapping, IT struggles with inconsistent data formats, while OT teams face manual intervention and operational disruptions. This lack of alignment results in siloed solutions, higher maintenance efforts, and poor data governance. To stay competitive, manufacturers need a seamless way to optimize data handling, ensure high data quality, and bridge IT-OT gaps while reducing operational overhead.

HiveMQ’s Modules for Data Hub—by providing a plug-and-play approach to data transformation, behavioral transformation, and data schema conversion—address these pain points in industrial use cases requiring mapping data to different schemas, transforming data, and managing device interactions with the MQTT broker.

With Custom Modules, IT developers can package any data schema, data policies, and transformation scripts as a single plug-and-play package that can be shared across your organization to process machine and systems data coming from your factory floor “in flight”.

In this blog post, we discuss a use case involving data filtering and transformation and how enterprises can benefit via HiveMQ’s Custom Modules for Data Hub.

Recap on Terminology

Before we jump into the use case, let’s revisit some of the key definitions related to it.

Data Hub: HiveMQ Data Hub, a part of the HiveMQ Platform, is an integrated policy and data transformation engine designed to ensure data integrity and quality across your MQTT deployment. It enables businesses to enforce data policies, transform schemas, and validate incoming messages, ensuring that IoT data is accurate, standardized, and ready for decision-making.

Data Schemas: A data schema simply refers to a blueprint or layout of data where its structure follows certain guidelines. HiveMQ supports JSON and Protobuf Schema types out of the box.

Data Policy: A set of instructions that tell Data Hub how to process incoming messages by taking schema files as a blueprint. It is also composed in JSON format.

Data Transformation Scripts: A JavaScript add-on to the data policy that allows you to modify or convert data before it is sent further from the MQTT Broker.

Use Case: Reducing Data Overload in a Smart Factory by Filtering Specific Sparkplug Metrics

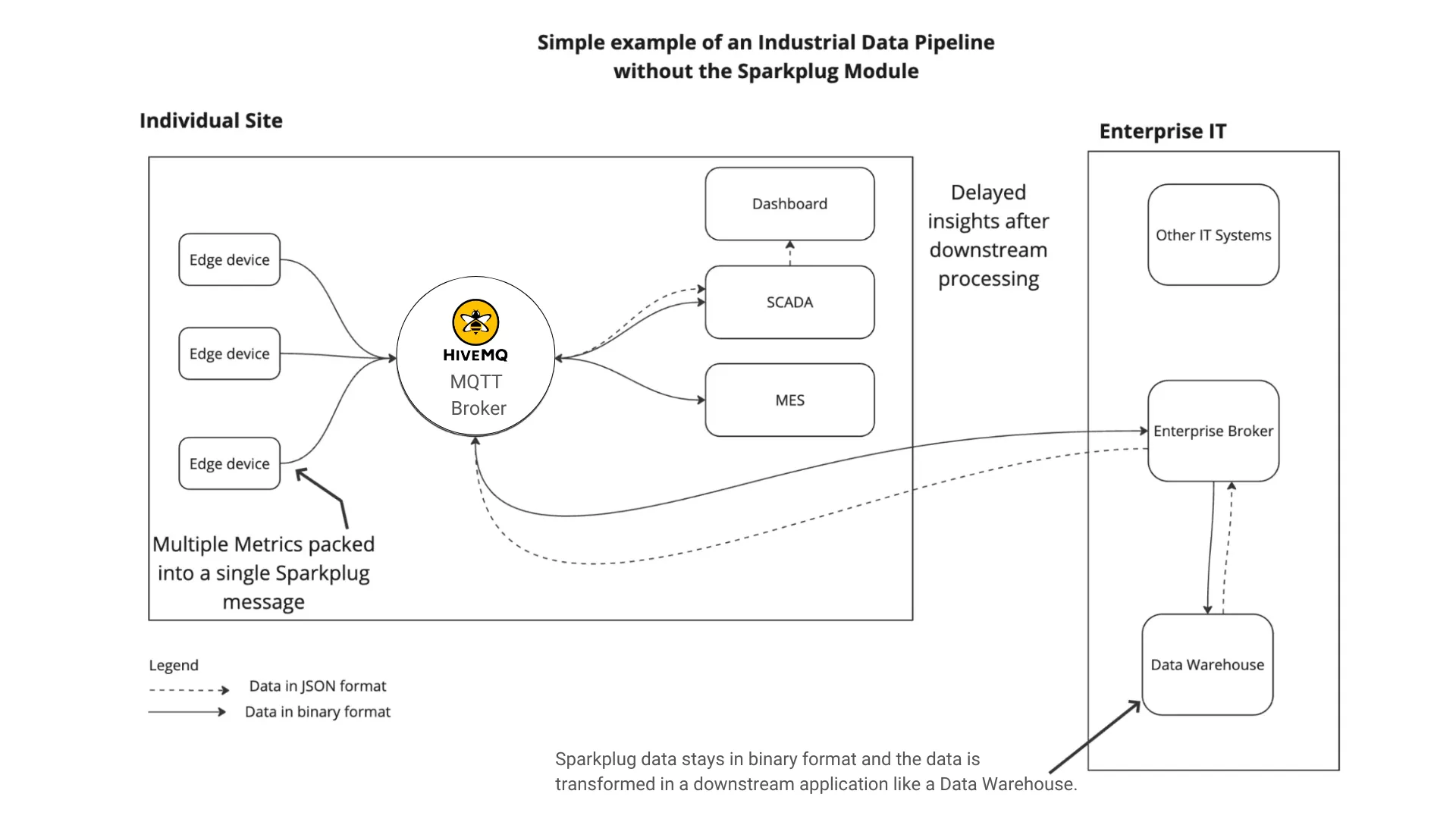

In a previous article, I discussed how Sparkplug is used and the need to transform the data. To summarize, Sparkplug is designed to meet the unique needs of industrial environments, providing a reliable, low-latency method for sharing data across systems. Additionally, Sparkplug supports stateful data management, which allows devices and applications to detect when data sources go offline, which is critical for continuous factory floor operations.

However, the Sparkplug protocol comes with challenges such as its adherence to the rigid topic structure, and its Protobuf format. This binary format isn’t suitable for many downstream applications and hinders collaboration and decision-making because of its lack of human readability.

In that article, we used a ready-to-use Module that fans out every metric to a separate topic. However, fanning out every metric is not always the optimal solution to address the problem of excessive data, especially in cases where you may have hundreds or even thousands of metrics packed in binary-encoded Sparkplug messages. Large enterprises operate at a different level of scale and complexity. Some of their challenges are:

In that article, we used a ready-to-use Module that fans out every metric to a separate topic. However, fanning out every metric is not always the optimal solution to address the problem of excessive data, especially in cases where you may have hundreds or even thousands of metrics packed in binary-encoded Sparkplug messages. Large enterprises operate at a different level of scale and complexity. Some of their challenges are:

Incoming data complexity: For an enterprise with multiple factory sites across the world and thousands of metrics, how do they monitor a subset? In that scenario, fanning out every single metric would not be a good choice. Ideally, you would like to get a subset of metrics that are relevant to your operations.

Scale of operation, maintenance, and deployment: Scaling such a global operation to more than one factory is a daunting task. Ensuring uniformity in operation requires an easy way to share the data schemas, data policies, and the transformation script reliably, ideally via already established best practices such as DevOps.

Skillset diversity across departments: In such a large enterprise, an IoT developer might find it easy to work with JavaScript, data policies, and data schemas, whereas a factory floor operations manager might find it distracting and would prefer something more “straightforward” to quickly get to relevant metrics providing real-time insight to do their job effectively.

Custom Modules for Data Hub offer enterprises both flexibility and ease-of-use to overcome these challenges.

Using Custom Modules to Solve the Challenge of Data Overhead

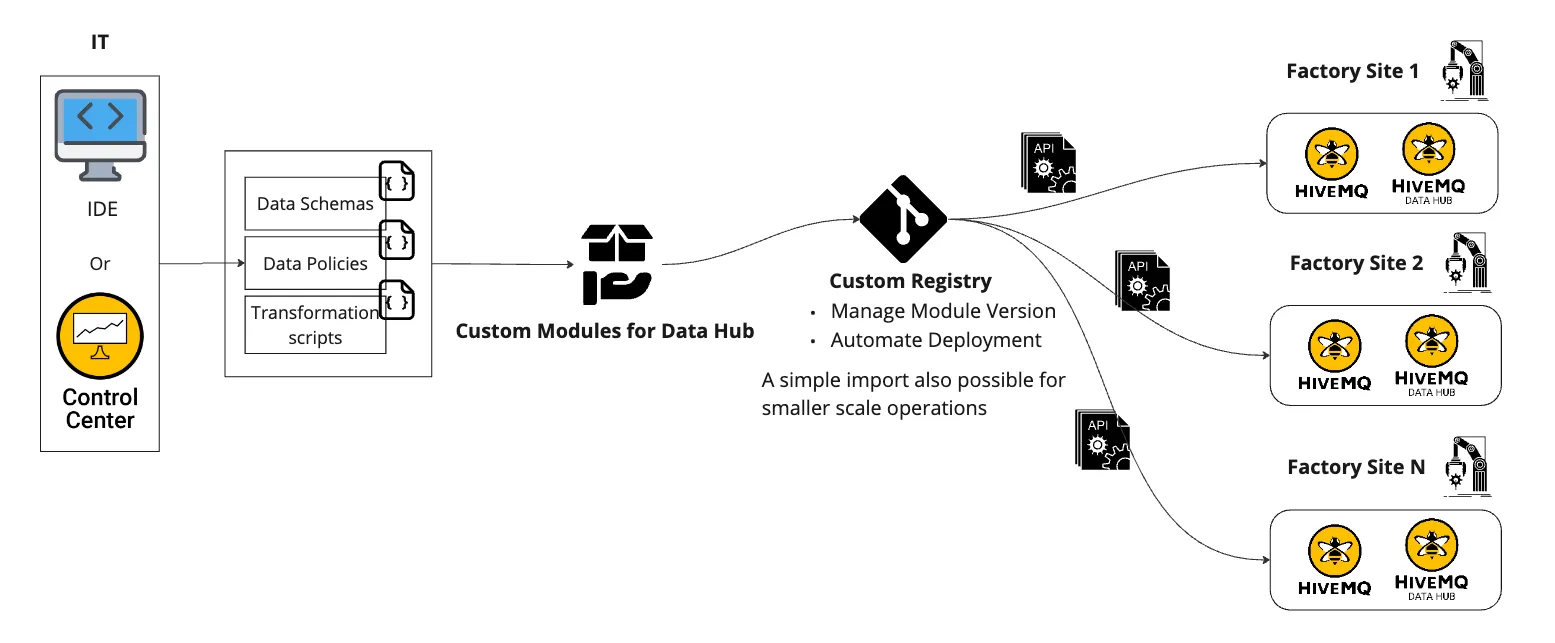

With Custom Modules, you can package a set of data schema, data policy, and transformation scripts together in an easy-to-use package. In a large enterprise, this can be done by experts such as IT developers. Once a Custom Module is successfully created, you can manage its lifecycle very easily and centrally with the help of custom registry support.

Enterprises can build and host a module registry for Data Hub using an HTTP server within the local network. Once configured, a Custom Module can be instantiated the same way as one of the pre-configured HiveMQ modules. All available modules from your configured registry appear in the Control Center. One custom registry can serve multiple HiveMQ deployments. Once a new module version is compiled into a single module file within a CI pipeline, you can use the REST API to roll out a module automatically. This allows you to fully support automated CI pipelines as well as version-control your Modules.

Let’s discuss how to create a Custom Module for this use case via HiveMQ’s Control Center. We will address how to use custom registries and REST APIs to achieve the same in a follow-up blog.

Let’s discuss how to create a Custom Module for this use case via HiveMQ’s Control Center. We will address how to use custom registries and REST APIs to achieve the same in a follow-up blog.

Step 1: Create a Module for filtering specific Sparkplug messages

In our previous blog series (Sending Filtered MQTT Sparkplug Messages to MongoDB for IIoT Data Transformation: Part 1 and Part 2), we showed a step-by-step method to create data schemas, policies, and scripts for this exact scenario.

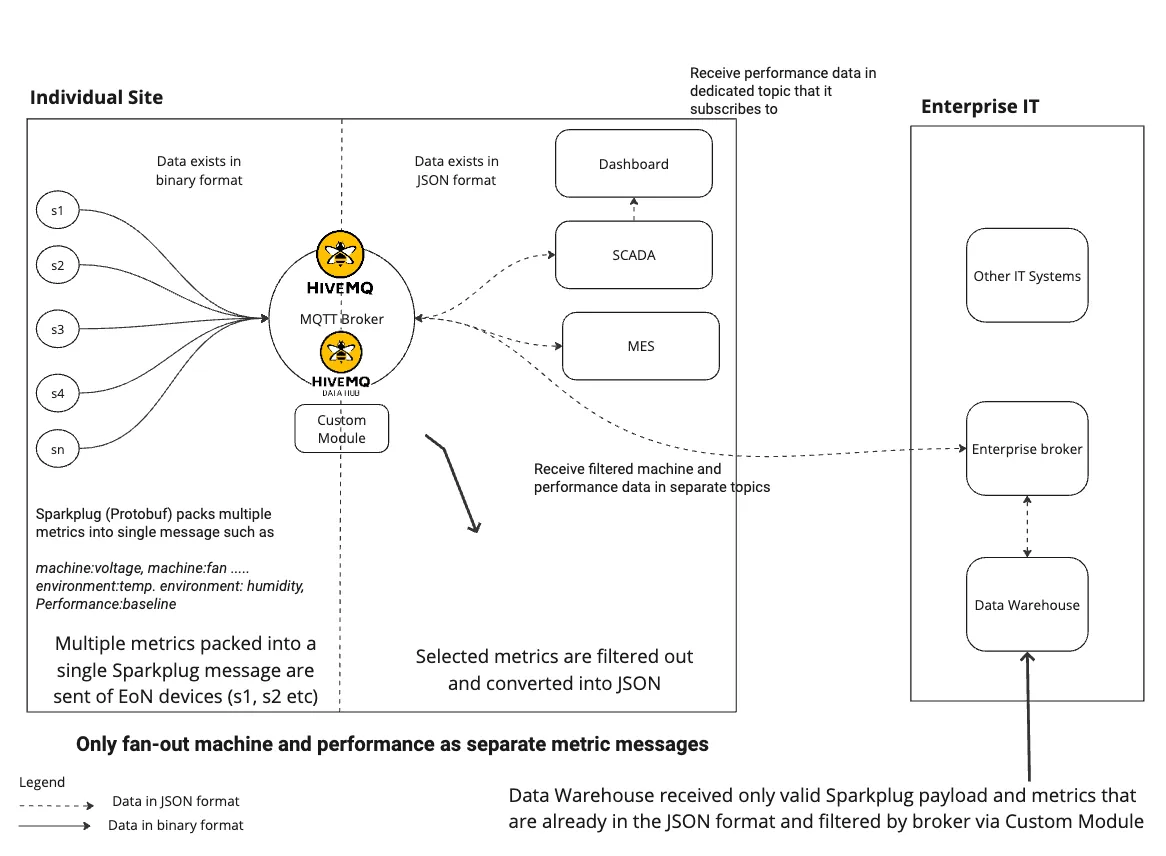

To summarize, when an MQTT Sparkplug message arrives from the device at the broker, the following steps occur:

Deserialization: The Protobuf-encoded message is deserialized into a readable format using the defined Protobuf schema.

Transformation: The transformation script processes the message, filtering metrics based on the allowList. Allowed metrics are fanned out to new MQTT topics.

Serialization: The filtered metrics are serialized into JSON format.

Forwarding: The JSON-formatted metrics are published to their respective MQTT topics.

Step 2: Validate that you have all the necessary Data Hub artifacts needed for the Custom Module

Once you do everything described in Step 1, you will have successfully created the following artifacts:

Schemas:

a. Schema with an ID

Sparkplug-formatand Schema TypeProtobufb. Schema with an ID

simple.jsonand Schema TypeJSONData transformation script with ID

fanoutand with description: filter metrics as argumentsData Policy with ID

protobuf-to-json-and-fanoutand matching topic filterspBv1.0/+/DDATA/+/+. This policy executed the step outlined in Step 1.

Step 3: Create a Custom Module

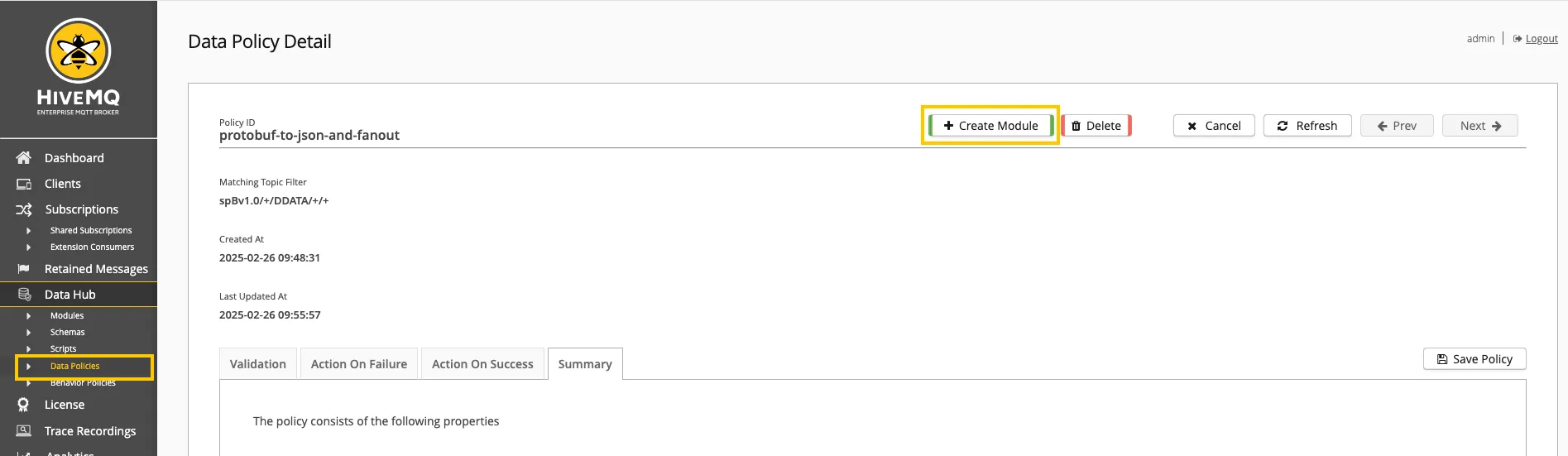

To create a Custom Module, navigate to Data Hub > Data Policies inside HiveMQ Control Center and click on the policy with ID protobuf-to-json-and-fanout.

Once there, click on the Create Module button at the top of the page.

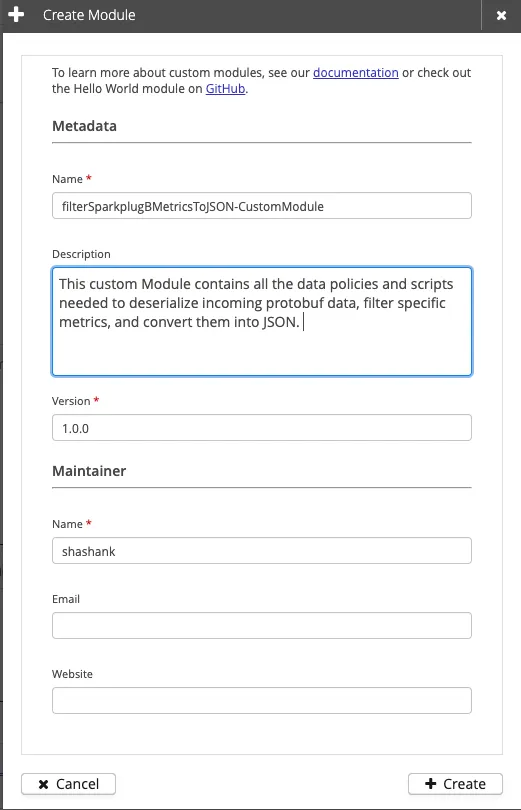

After you click it, the following pop-up will appear—fill in the details as per your requirements.

After you click it, the following pop-up will appear—fill in the details as per your requirements.

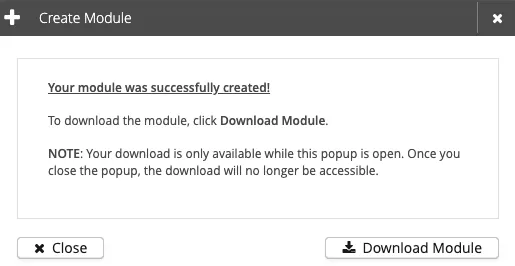

When done, click on the Create button. HiveMQ will create a Custom Module for you which is ready for download. Click on the Download Module button to save it.

Step 4: Upload your Module to the HiveMQ Control Center

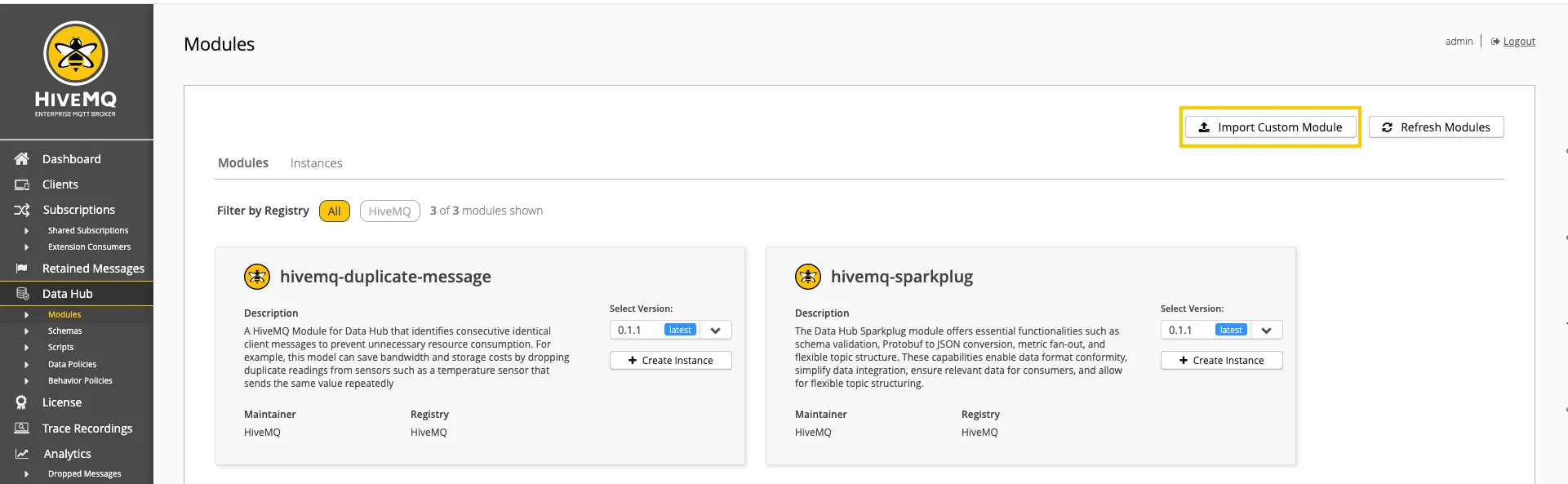

There are two ways to upload your Module. You can upload it manually to HiveMQ Control Center. This is handy in case you want to implement consistent data policies across a couple of broker instances. This can be done by navigating to Data Hub > Modules in the Control Center and clicking on Import Custom Module on the top right.

Alternatively, you can use REST APIs to create and deploy Modules across a large number of instances. This is especially useful for large enterprises where they have multiple facilities spread across geographically, all needing consistent data policies on their incoming MQTT data.

Alternatively, you can use REST APIs to create and deploy Modules across a large number of instances. This is especially useful for large enterprises where they have multiple facilities spread across geographically, all needing consistent data policies on their incoming MQTT data.

Let’s explore the manual approach first.

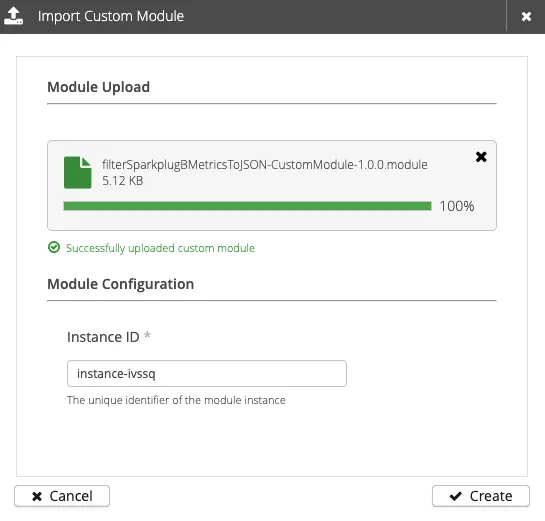

After clicking on import Module, you can simply select the file and click on Create.

This will upload and instantiate the Custom Module.

Now you can run this Custom Module on your broker instance. We will cover, in a follow-up post, how to achieve the same results without the help of GUI. A simple ‘hello world’ example is already available in our GitHub repository.

Now you can run this Custom Module on your broker instance. We will cover, in a follow-up post, how to achieve the same results without the help of GUI. A simple ‘hello world’ example is already available in our GitHub repository.

Summary

In this article, we discussed the importance of improving data quality to enhance overall plant efficiency. Large enterprises struggle with managing excessive, multi-format data and extracting real-time insights. HiveMQ Data Hub addresses this challenge by enabling in-flight data transformation.

Beyond finding a solution, enterprises must deploy it consistently across sites. This is where Custom Modules for Data Hub excel, allowing organizations to package data schemas, transformation scripts, and policies into a single deployable unit, applied manually or via DevOps.

Custom Modules standardize data transformation and schema mapping, eliminating protocol mismatches that hinder integration. By enabling IT teams to package and share transformation logic as reusable modules, these Modules reduce reliance on custom, siloed solutions, ensuring consistency across the organization. This plug-and-play approach minimizes manual intervention, allowing OT teams to focus on operations while IT teams maintain governance over data flows. Additionally, real-time "in-flight" data processing ensures only relevant, high-quality data reaches IT systems, reducing noise and improving decision-making. By bridging IT-OT gaps with a unified, scalable approach, Custom Modules accelerate digital transformation.

We covered a part of the solution in this article, where we showed you how to create a Custom Module that decodes incoming binary Sparkplug messages into JSON format, filters specific Sparkplug metrics from the whole set, and publishes that information in a new topic. We covered how to do that step-by-step in HiveMQ Control Center, and we’ll cover an alternative approach without the use of GUI in a follow-up article. Stay tuned.

Shashank Sharma

Shashank Sharma is a product marketing manager at HiveMQ. He is passionate about technology, supporting customers, and enabling developer-centric workflows. He focuses on the HiveMQ Cloud offerings and has previous experience in application software tooling, autonomous driving, and numerical computing.