HiveMQ Configuration with AI: A Practical Approach

HiveMQ welcomes this guest post from Moheeb Zara, an IoT expert based out of Arizona.

Configuring Dockerfiles and XML for HiveMQ MQTT Broker deployments can require a lot of know-how and patience. Using AI we can streamline this process and use it to iterate on solutions faster. While you shouldn’t rely on AI solely, it can be a powerful assistive tool in creating the backbone of your application.

In this blog post, we’ll look at how you can use OpenAI’s ChatGPT to customize a GPT to generate Dockerfiles and XML to deploy a secure HiveMQ Broker or a HiveMQ cluster. We’ll also demonstrate it can be used to generate and validate JSON schema and policies for HiveMQ Data Hub.

Customizing ChatGPT

While you can achieve a great deal of success using the uncustomized free-tier of ChatGPT, subscribing to their paid plan gives you access to more refined and up-to-date models. It also allows you to tailor custom GPTs. For this guide we’ve done exactly that. We created a custom GPT that has been fed several links to HiveMQ’s documentation with an emphasis on the Docker, Validation, and Policy guides. It has also been told to prioritize up-to-date information from these sources.

ChatGPT yields more reliable results when you are specific about the context of the information you want it to draw from. This can be achieved by providing the correct links in the guided GPT builder and giving it the context to ensure accurate information when the user asks for Docker and XML configurations for HiveMQ.

If you are on a paid plan for OpenAI, you can find the customized GPT here: https://chat.openai.com/g/g-sFWcIIZ8h-hivemq-copilot

OpenAI has instructions on creating your own GPT. The following is an example of what we told it in order for it to provide us with the most relevant responses. We continued this process of telling it where in the documentation to draw on for different types of questions or solutions until we saw results we were happy with.

OpenAI has instructions on creating your own GPT. The following is an example of what we told it in order for it to provide us with the most relevant responses. We continued this process of telling it where in the documentation to draw on for different types of questions or solutions until we saw results we were happy with.

Crafting Prompts

While AI is very good at providing detailed answers from simple prompts, there is an art to nudging it in the right direction. ChatGPT will make many assumptions based on your initial prompt that may not be what you are looking for. That is why it is important to provide as much relevant information as possible and be very clear about the kind of results you want.

Here are some key tips to help you use something like the HiveMQ CoPilot.

Clearly describe your working environment: Are you running this on your laptop using Docker? Are you deploying on Kubernetes?

Define your expected output: “In your response include a Dockerfile and separate XML config files for each node in the cluster.”

Refining Outputs: If the initial output isn't perfectly aligned with your needs, refine your prompt with additional details or clarify your requirements.

Define Requirements: Determine your security needs, such as TLS/SSL, authentication, and ports. "Generate a Dockerfile and XML configuration for a secure HiveMQ Broker with [your requirements]."

Identify Issues: Describe the problem you're facing in detail. Include error messages or behaviors. "Suggest fixes for [specific issue] in HiveMQ Docker deployment."

Example Prompts

Let's tackle some real world use-cases that can be run against the newly created HiveMQ CoPilot. We start by crafting prompts that declare our working environment, define what we are requesting it to create, and how we expect the output.

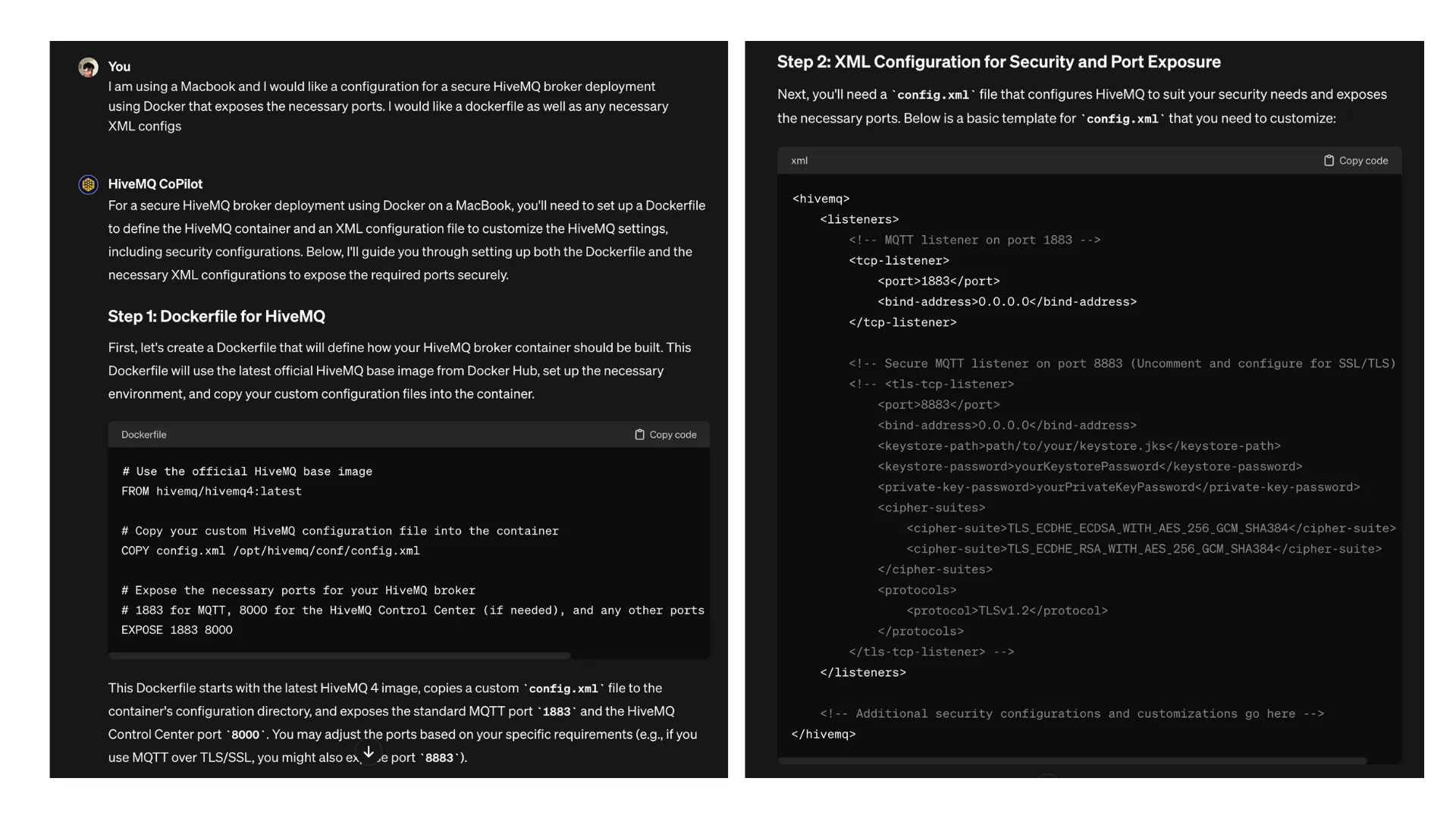

Generate Docker and XML Configuration for a Secure HiveMQ Broker

Prompt: “I am using a Macbook and I would like a configuration for a secure HiveMQ Broker deployment using Docker that exposes the necessary ports. I would like a dockerfile as well as any necessary XML configs.”

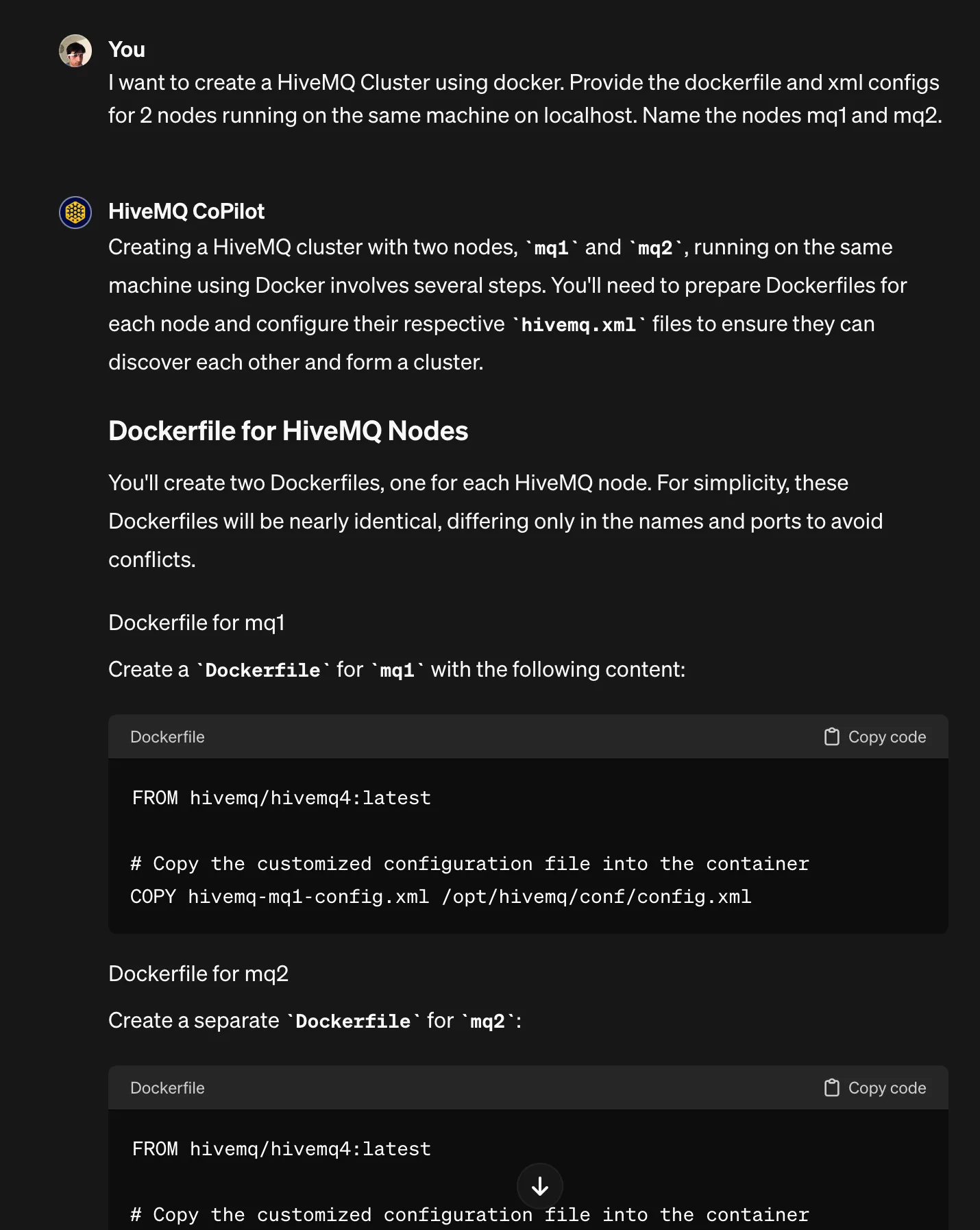

Generate a Local HiveMQ Cluster Deployment

Prompt: “I want to create a HiveMQ Cluster using Docker. Provide the dockerfile and xml configs for 2 nodes running on the same machine on localhost. Name the nodes mq1 and mq2.”

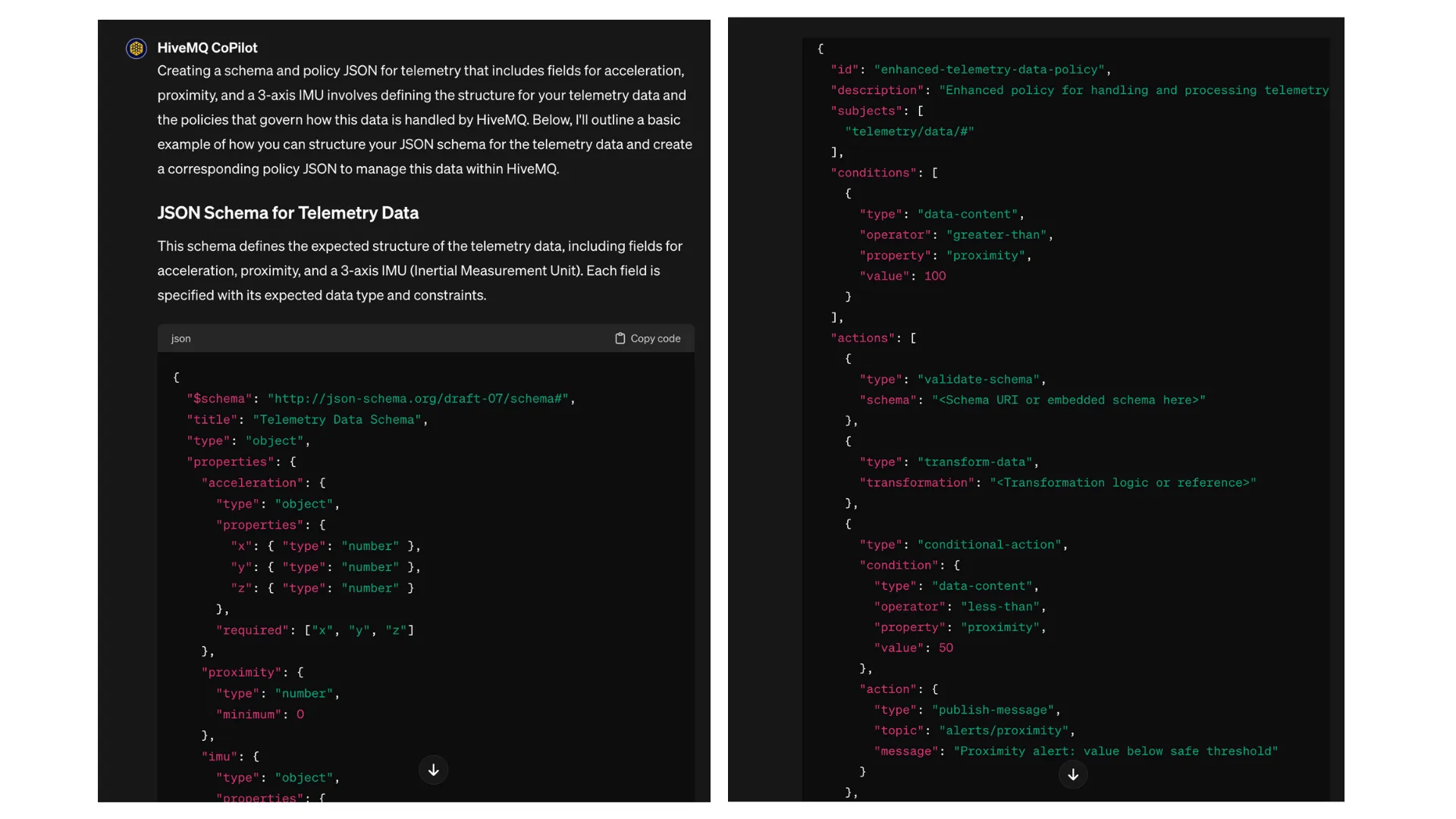

Generate and Validate JSON for HiveMQ Data Hub

Prompt: “Provide me with schema and policy JSON for telemetry that includes fields for acceleration, proximity, and a 3 axis IMU”

Shortfalls

Remember — AI isn’t perfect. While it does a great job of approximating what you’re asking for and on some occasions surpassing expectations, it can be limited. With the HiveMQ CoPilot custom GPT we tested with various prompts, and it took quite a bit of correcting its mistakes and training it to specifically draw from certain documents when certain keywords were used. For example, when prompted to create Policy JSON, it initially kept consistently providing a bulleted list of conceptual policies about the schema JSON — not an actual Policy JSON.

On occasion, ChatGPT will spit out what we call “hallucinations” and speak about them as if they are facts. For example, the screenshot below is from an initial prompt asking for a secure HiveMQ deployment. The ACL in this XML file is pure hallucination. If we were to search for that string in the documentation, we wouldn’t find it. If you encounter something like this or receive errors related to code that you suspect was a hallucination, you can amend it by training the custom GPT with more specific context and doc URLs or by adjusting the follow-up prompt. In this case, we would say:

“The acl.xml you provided is fiction; please refer to the docs for the Enterprise Security Extension found here https://docs.hivemq.com/hivemq-enterprise-security-extension/latest/index.html and adjust your response”

Through this process, we learned that ChatGPT can also be a powerful tool for debugging. When we would provide error logs, it would recognize its mistake and correct its responses. So while HiveMQ power users aren’t likely to use the GPT to generate config files, they could benefit from using it to debug or modify their existing setup.

Another pitfall to avoid is to not expect the GPT to be aware of or solve issues related to things outside its control or domain that exist in the users code infrastructure. The HiveMQ CoPilot also does not have knowledge of newly published data beyond its last training date and that is something to keep in mind, especially if your current task involves security. A good practice is to always check the AI’s work before putting anything into production.

Conclusion

Through this guide, you've learned how to effectively leverage a customized ChatGPT for generating the backbone of your HiveMQ deployment, from Docker configurations to JSON schemas. The key to success lies in crafting detailed prompts and iteratively refining the AI's output to meet your exact requirements.

As you integrate these AI-generated configurations into your deployment process, remember to validate and adjust based on your specific environment and needs. This approach not only streamlines the deployment process but also helps you more effectively build solutions. As a learning tool it can become an invaluable resource, and while it may not always return the ideal results, continuously engaging with it can help you eventually get the response you want or at least help you to get closer to the answer yourself.

We hope you take the lessons learned here from our experimentation and apply them to create more robust, secure, and reliable deployments of HiveMQ to power your IoT projects and solutions.

We value your feedback and would love to know about the kind of projects you create using this approach. Join the HiveMQ Community, where you can learn and engage with other developers working with our products!